“Reagan doesn’t have that presidential look” — United Artists Executive Rejecting Reagan for Lead Role in The Best Man (1964)

The liberal wave started during the Progressive Era, gained momentum with FDR’s New Deal in the 1930s, crested with LBJ’s Great Society in the 1960s, and ran out of steam by the mid-1970s. Conservative Ronald Reagan won the 1980 presidential election by arguing that “In this present crisis, government is not the solution to our problem; government is the problem.” The Departments of Energy (1977-) and Education (1979-) expanded federal bureaucracy some in the ’70s, but the public mood was shifting back toward smaller government at all levels. Ralph Nader’s idea of creating a bureaucracy for consumer protection went nowhere. The Civil Aeronautics Board went away in 1985 and the Interstate Commerce Commission (railroads and trucking) in 1995, their safety enforcements transferred to other agencies. America, of course, never swings all the way in one direction or the other — right or left — but the momentum and rhetoric shifted right during the conservative resurgence of the 1970s and after. To repurpose for liberalism what Winston Churchill said about the meaning of World War II’s Battle of El-Alamein for Nazi Germany: “this was not the end. It was not even the beginning of the end. But it was, perhaps, the end of the beginning.”

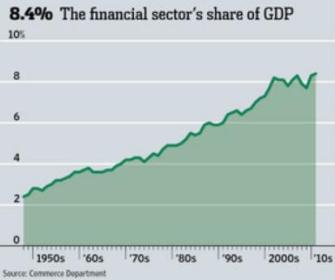

Does America really have a “big government?” Proportional to the size of its country, America’s government as of 2015 was fairly small by international standards, spending around 14.5% of national GDP output compared to Australia (18%), Germany (19%), Russia (19%), the United Kingdom (19%), and Canada (21%). These World Bank figures are lower than the Office of Management & Budget figures (right) that usually hover ~20%. The U.S. leads the world in total expenditures, though, spending ~$3.8 trillion in 2016 while collecting $3.3 trillion in revenue. America spends more on its military than its next eight competitors and twice that of China and Russia combined (SIPRI), but doesn’t provide health insurance for those under 65, except for Medicaid at the state level. Of course, spending and military power aren’t the only measures of a government’s size or reach; there’s also its legal/regulatory system and the power of law enforcement.

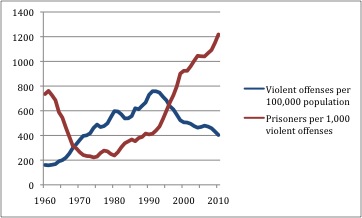

Domestically, there’s a lot of noise about “tyranny” but, collectively, U.S. laws don’t stand out as being overly oppressive in comparison to other nations. Look at the chart on the right and think of how steadily the drumbeat has grown over the last decades about the government getting bigger and bigger. Some of that noise originates among those profiting from beating the drum, selling airtime on radio and cable or ads on blogs. Some of the noise originates from quarters unfamiliar with real tyranny or suspicious of a deep state within intelligence agencies operating outside the public or even regular government’s purview. Conspiracy theories are more entertaining and profitable than real knowledge or perspective. Still, many laws originate in agencies run by non-elected bureaucrats and housed under the executive branch such as the IRS, FDA, OSHA, FCC, EPA, etc., and it’s not surprising that citizens resist or resent these laws when they’re onerous or administered with an inflexible or heavy hand. Governments grow because voting citizens across the political spectrum demand things, because agencies grow to police other agencies, because big companies lobby (i.e., bribe) politicians to pass regulations that small companies can’t afford to comply with, and because bureaucracies have a natural tendency to grow like fungi regardless of the first three reasons.

By the 1970s and ’80s, the Great Society era launched by Lyndon Johnson was waning, and the public was ready to send the pendulum back in the other direction, despite still wanting the services and protection government provided. You could trace one turning point in liberalism’s demise to New York City’s near-bankruptcy, when they ran out of money to pay public employees and had to look overseas to sell municipal bonds. While New York was the first city to tighten its budgetary belt, California was the first state, though in their case they cut taxes more than they cut spending. California passed Proposition 13 in 1978, a law banning increases in property taxes (beyond inflation) unless authorized by a two-thirds majority. The brewing conservative resurgence wasn’t just about cutting taxes, but also reducing government intervention in the economy and reinjecting religion into politics. With the election of Ronald Reagan in 1980, the conservative revolution launched under Barry Goldwater in 1964 led to a fundamental changing of the guard in Washington and in many states. Conservatives didn’t take over, but they stole the momentum and have more or less maintained it ever since.

Stagflation & Energy Crisis

Yet, the first president we’ll discuss as part of this conservative resurgence was a Democrat. By the 1976 election, the public wanted the most anti-Nixon, anti-Vietnam, anti-Watergate type candidate they could find. They found it in Jimmy Carter (D), a born-again, peanut-farming governor from Georgia untarnished by Washington politics. Watergate began a trend toward outsider candidates, resulting in state governors Carter, Reagan, Clinton and Bush the Younger all winning the presidency. After defeating Gerald Ford in a tight 1976 election, Carter came to Washington with what some congressmen perceived to be a holier-than-thou attitude and didn’t work well with what he perceived to be corrupt Washington insiders. Like Richard Nixon, he had a fortress mentality in the White House, not initiating relations with congressmen on Capitol Hill. He alienated conservatives by creating the Department of Energy to try to wean the country off Arab oil (the GOP didn’t want more bureaucracy, and oil companies feared breakthroughs on alternative energy), and he alienated Great Society Democrats by overseeing economic deregulation (more later) and insisting on a balanced budget. In that way, Carter was more of an independent and fiscal (budgetary) conservative than a party-line Democrat. As a fiscal conservative, Carter alienated Democrats by refusing to go further into debt and Republicans by refusing to cut taxes. But his main, seemingly intractable problem was stagflation.

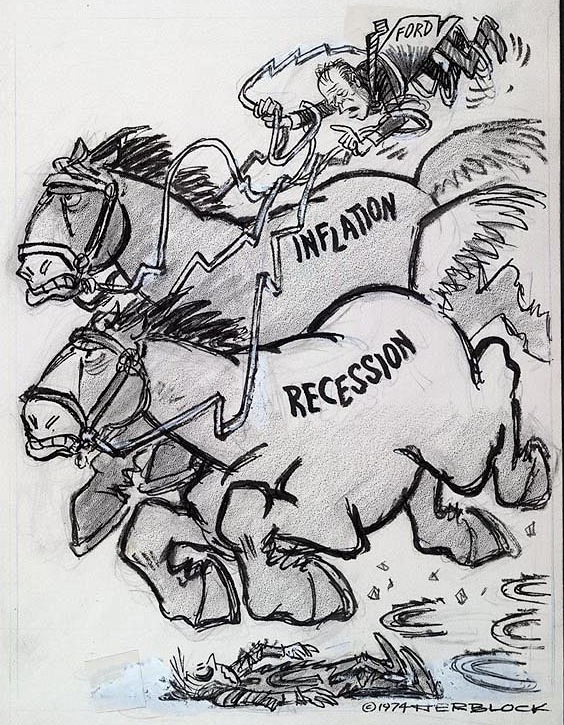

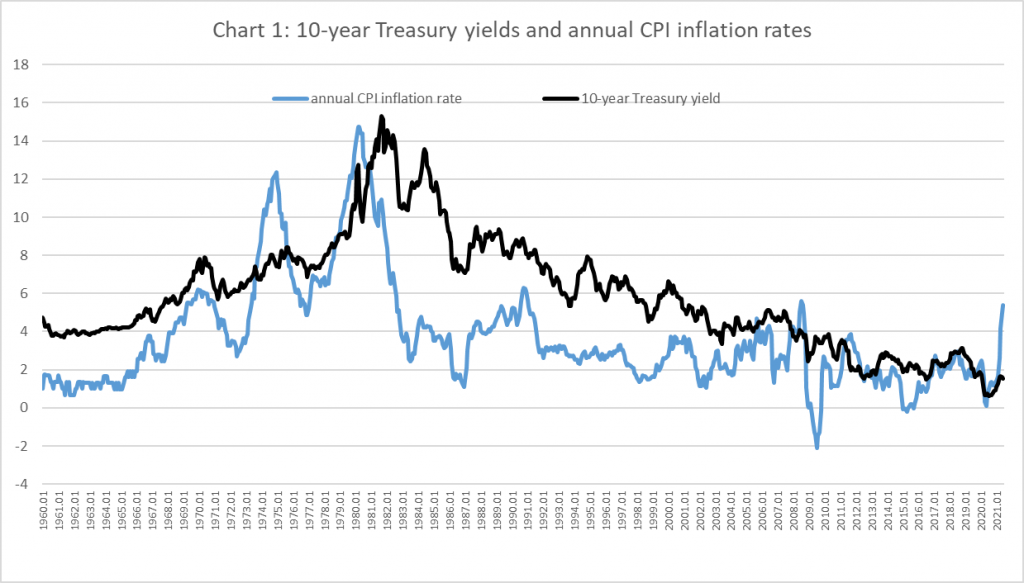

Normally prices don’t rise during a recession but, by the mid-1970s, the U.S. was mired in stagflation: the unlikely combination of inflation and high unemployment. LBJ’s Great Society and the lengthy Vietnam War raised the federal deficit, contributing to inflation. So, too, did President Nixon decoupling American currency from the Gold Standard in 1971, due to too many trade surplus nations swapping greenbacks for a dwindling gold supply (American dollars were convertible to gold dating back to the Bretton Woods Conference in 1944). Going off the Gold Standard created confidence, or fiat, currency instead. Greenbacks from then on were worth whatever people thought they were worth, based on their confidence in America’s solvency and survival. As for coins, they cost more to mint than the amount of copper or nickel on the coin is worth. Caught in between its two mandates — curbing inflation and supporting job growth — the Federal Reserve initially didn’t raise interest rates to stem inflation because they feared that would further weaken the job market. Inflation has a mixed impact on the government itself: it helps because, in real dollars, it reduces the money the government owes; however, higher interest rates will raise the cost of issuing bonds to fund future debt.

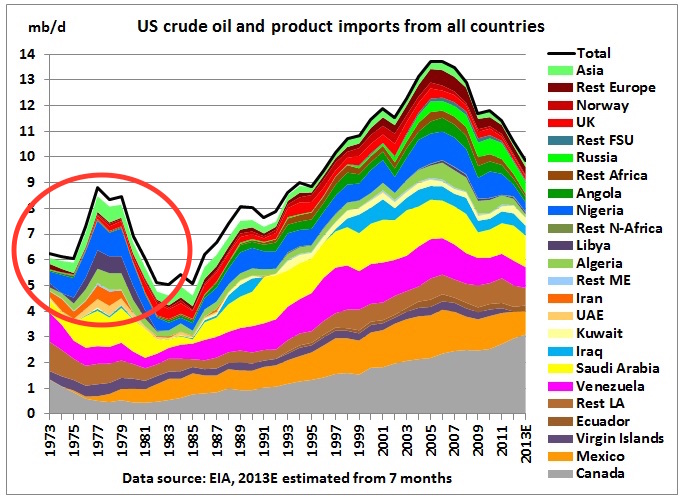

As we saw in the previous chapter, OPEC (Organization of Petroleum Exporting Countries) embargoed oil in 1973 to show the West how dependent they’d become while punishing them for supporting Israel. Then they raised prices and within a few short years, oil climbed from $3 to $12/barrel. The Iranian Revolution that we’ll cover later in this chapter caused another big spike in oil prices in 1978-79. This coincided with increased dependence on foreign oil. Oil hit $1 gallon for the first time in American history, even adjusted for inflation far higher than the 5¢ it cost in the 1950s.

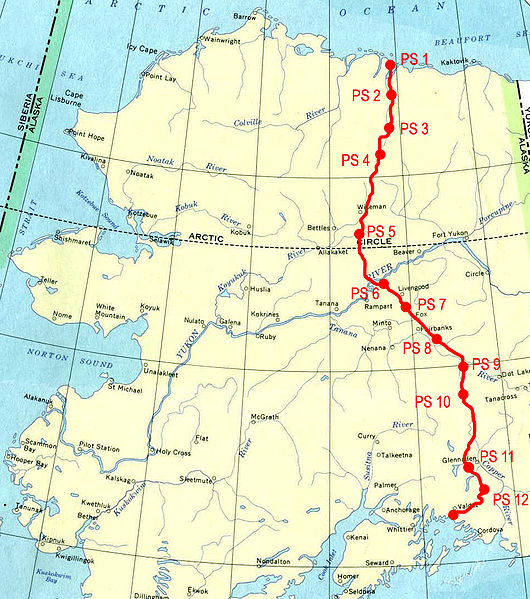

To offset high prices and Peak Oil, the U.S. built, between 1975 and ’77, the Trans-Alaska Pipeline from the North Slope, which was iced in much of the year, to the Port of Valdez, where tankers could ferry it to the Lower 48. That still wasn’t enough to offset the price hike. Many Americans overreacted by trying to hoard oil, not realizing that global price and local supply aren’t always linked. There was often no actual shortage in the pipeline. Either way, prices remained high, and oil is so important to industrialized societies that it can drive up inflation even during a recession, thus the stagflation. European countries tax oil enough to deliberately make it expensive and then use the taxes to pay for mass transit, encouraging people to conserve. The United States has historically devoted more to its military budget instead, partly to ensure the flow of cheap oil.

Detroit wasn’t well prepared to manufacture fuel-efficient cars in the 1970s. For years leading up to then, bigger was better. The oil crisis coincided with the overall rise of European and Japanese industry from the ashes of WWII, and they were better at making smaller cars that got good mileage. The U.S. rebuilt those countries as industrial powerhouses after the war and succeeded beyond their expectations. They were now fully rebuilt, which was good for the world economy, but also meant that the U.S. wasn’t the only kid on the block. In Detroit itself, frustration took the form of one white autoworker and his son-in-law beating to death Chinese-American draftsmen Vincent Chin with a baseball bat in 1982. A plea-bargaining judge gave the murderers three months probation for manslaughter because they had histories of solid employment (see optional video below).

Among other things, foreign competition meant that American unions would steadily weaken from that point forward, and most middle and working-class families would need both parents working to keep up.

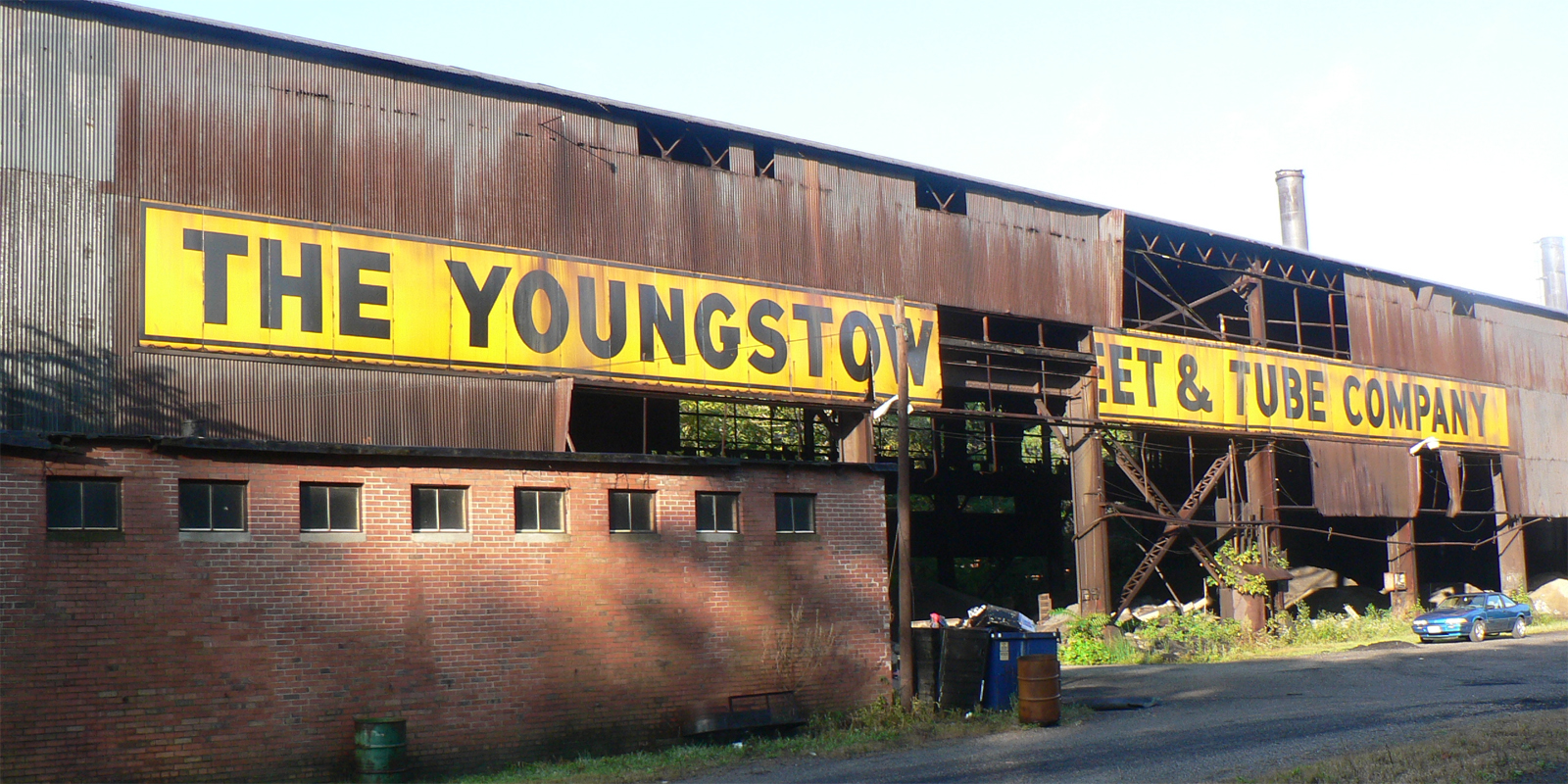

American manufacturing was entering a long, slow period of decline, as one factory after another shut down in the Rust Belt of the industrial Northeast and Midwest. That trend continued into the early 21st century if you look at manufacturing jobs. But if you measure by output, American manufacturing has done well in recent decades. In fact, the U.S. is producing far more than ever; it’s just producing more with automation and lowered-paid staff rather than union workers. And there is even an unmet need for semi-skilled workers in American plants that can only be offset by immigration or more young citizens seeking work in manufacturing. As factory unions weakened, public unions suffered setbacks as well. Major cities like New York struggled with a combination of low taxes and well-pensioned public unions (policemen, firemen, sanitation workers, teachers, etc.).

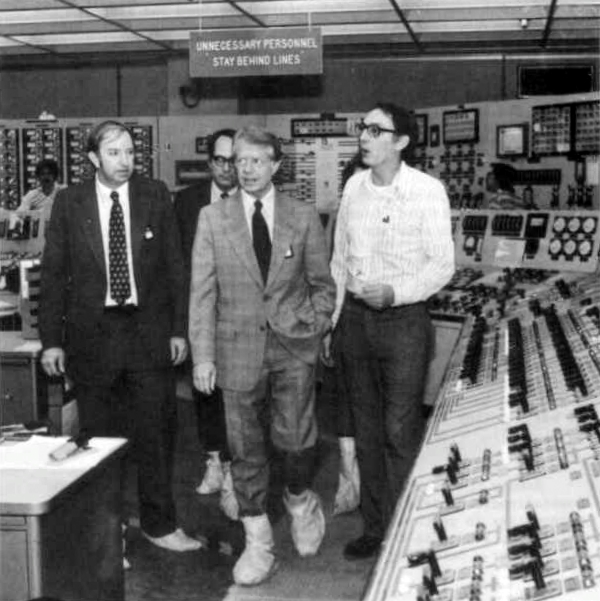

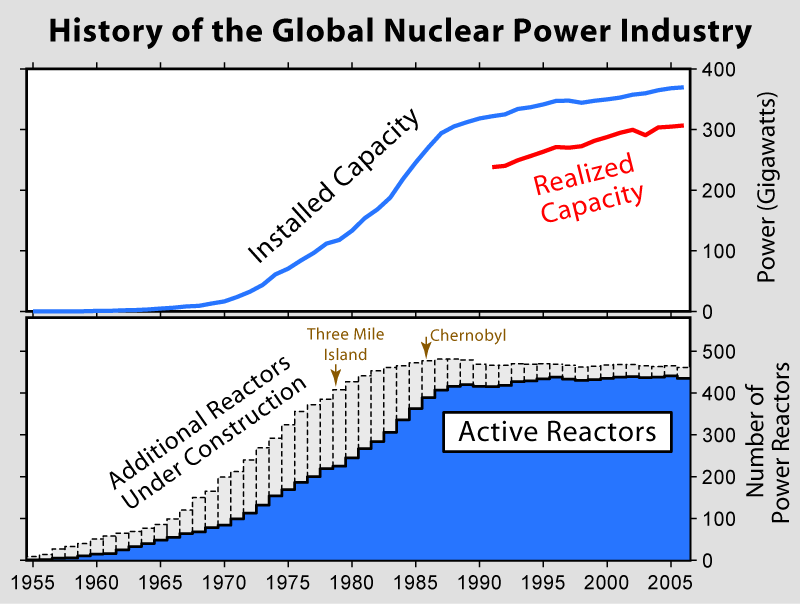

President Carter described the need to transition to alternative energy as a moral imperative, or the “moral equivalent of war.” But his pleas for Americans to conserve energy out of a sense of patriotism mainly fell on deaf ears. Carter learned that, while most Americans are patriotic when it comes to wars, they’re less enthusiastic about turning down the AC in the summer or heat in the winter. The moral argument ran headlong into strands of Sunbelt Christianity that associated with the oil industry — what historian Darren Dochuk called “wildcat Christianity” or “oil patch evangelicalism.” Then a near meltdown at the Three Mile Island nuclear plant outside Harrisburg, Pennsylvania in 1979 dampened the public’s enthusiasm to pursue atomic energy as a viable alternative to fossil fuels. Carter had been a nuclear engineer himself, commanding a nuclear sub in the early Cold War, and visited the plant personally during the height of the crisis. Engineers staved off a full meltdown of the inner core reactor, but the near-miss spooked the public and few new reactors went into construction afterward. Had they not been able to cool the reactor it would’ve sunk into the Earth, radiating the soil and water around it before exploding when it contacted groundwater. At least the crisis propelled artificial intelligence, as they had to build a robot to clean up the wreckage and water because it was too contaminated for humans. It finished up its work in 1993, fourteen years later.

A popular movie called The China Syndrome, so-named because one fanciful scenario was that a reactor might melt through the Earth’s core “all the way to China,” which it wouldn’t, foreshadowed the near-meltdown just prior to the actual emergency. Problems with waste disposal and much worse crises in the USSR in 1986 (Chernobyl) and Japan in 2011 (Fukushima Dai-Chi) dampened the industry’s prospects. Soviets also covered up an accident at Kyshtym in 1957 and the CIA didn’t mention it either, even though they knew, so as to not dampen Americans’ enthusiasm for nuclear energy. President Eisenhower’s dream of always having one of the giant reactor chimneys within sight as one drove across the country didn’t materialize, and neither did mini-reactors in each home or smaller devices to propel vacuum cleaners and other appliances. We didn’t use nuclear weapons to widen the Panama Canal or clear cuts through western mountains for interstates as some hoped. Nuclear-powered coils that could melt driveway and sidewalk snow didn’t happen in the 1950s and they weren’t about to after Three Mile Island. Still, today, nuclear reactors supply ~ 20% of America’s electrical power and it’s carbon-free.

When Carter talked about the economic malaise the country was in, he came across as ineffectual in fixing the situation or as telling Americans something they didn’t want to hear. His administration was struggling to keep the basic cost of living and energy from rising faster than wages. For many Americans, especially white-collar workers or union workers with automatic cost-of-living adjustments (COLAs), the price-wage spiral allowed them to keep up. People are skeptical about this concept but inflation requires wage spirals; otherwise no one would be able to afford the higher prices. But wage hikes of the 1970s were uneven across occupations, as is always the case during inflation. Some blue-collar workers lost ground and retirees on fixed incomes saw their “nest egg” savings shrink at the rate of inflation, probably the cruelest effect of high inflation. Investors weren’t happy either. While stock prices rose in nominal dollars, there was no real increase (adjusted for inflation) from 1965 to 1982. Still, stocks were a better investment than a low-yielding savings account which, in effect, lost money as it grew gradually at a lower rate than inflation.

Just as the capacity of wages to rise along with inflation varies among occupations, so too, inflation is uneven from sector to sector. For instance, in the 21st century, despite low inflation in retail and deflation in electronics and air travel, prices in housing, healthcare, and education rose relative to income. In an inflationary environment, borrowing makes sense because the amount you’ll owe later is, in effect, less, so people that hang on to their jobs keep borrowing and spending, which drives inflation even more. In a desperate move to stop double-digit inflation, which stood at a staggering 13% by 1980, Federal Reserve Chair Paul Volcker raised interest rates dramatically, slowing the economy because fewer people could borrow, but at least reversing inflation’s rise. As the short-term borrowing rate between banks soared to 20%, the nation dipped into its worst recession since the 1930s, this one deliberately caused.

Some economists argue that Volcker’s drastic actions were unnecessary because those prices would’ve subsided on their own, without government action. There were many causes of inflation in the 1970s besides low-interest rates, and those would’ve naturally taken care of themselves, according to this argument.

Slamming the brakes on the economy by raising rates also raised unemployment, which Volcker supporters like Milton Friedman claimed hovered naturally around 5% anyway. Unemployment shot up to nearly 11%, above. In truth, the country was in a bind that didn’t offer any easy solutions, and Carter sided with the conservative approach of Volcker and Friedman. America took its Volcker chemotherapy, killing inflation cells along with growth and employment cells and deliberately triggering a telegraphed recession. Things got worse before they got better and the economy didn’t bottom out until 1982. Farmers with variable-rate land mortgages also felt the pinch of higher rates, especially those that lost their wheat and corn export trade to the USSR with the end of détente (more below).

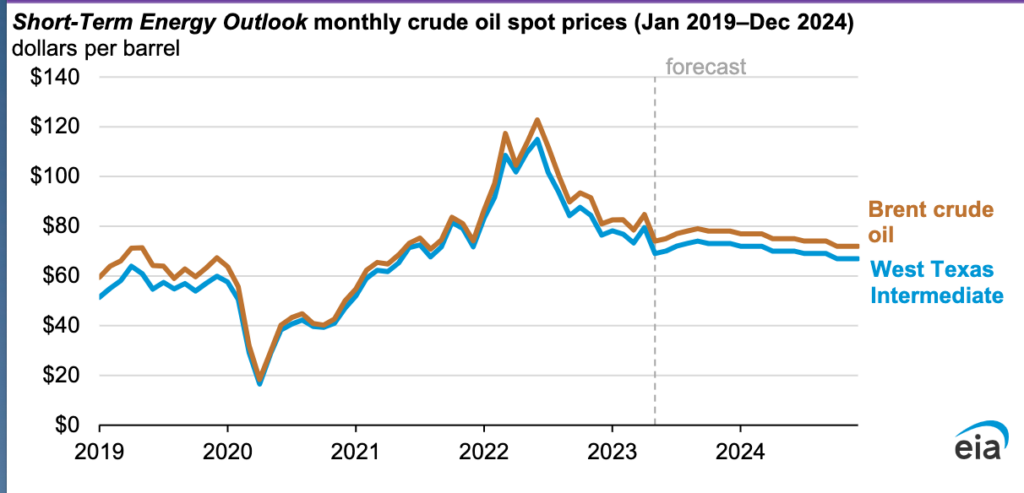

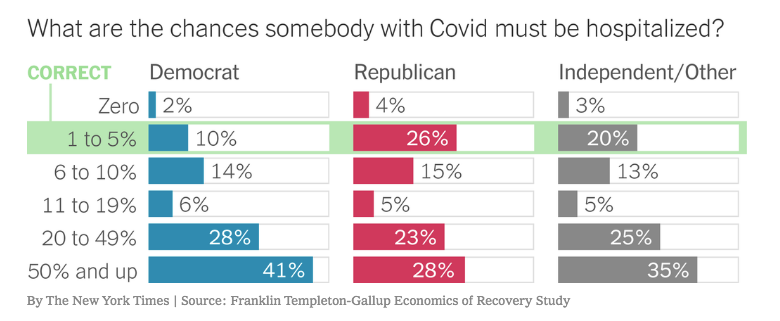

Inflation rose briefly after COVID-19 but has mostly subsided, from 8+% in 2021 to 3+% by December 2023. As we saw in the Chapter 5 section on the Fed, recent inflation resulted from a perfect storm of COVID-related disruptions in supply-chains (especially global), tight oil supplies, Trump-Biden China tariffs, and worker shortages — all combining to cause demand to exceed supply. Some people blamed too much COVID-19 relief under Trump and Biden, but $1200 stimulus checks to Americans didn’t cause global inflation, and they helped stave off a recession. Oil, food, and fertilizer prices were up because of Russia’s invasion of Ukraine. The Federal Reserve can’t fix problems outside their control, including gas and food prices, the part of inflation that’s most troubling to citizens (and voters). By 2023, supply-chain snags were clearing up and gas prices lowering, but a tight labor market kept wages, and thus prices, on the rise, especially in service industries, and high corporate margins (profits) kept prices high, too. Keep in mind, though, that falling inflation rates don’t mean prices are going down (that would be deflation), but rather that the rate at which they’re rising is decreasing. It’s good news for young people entering the workforce that wages will climb in certain sectors as employers compete for help in an economy with low unemployment.

It’s also possible that the recent spike in gas prices will either accelerate the transition to EVs and hybrids, which would be good for the environment in the long run, or lead to a political backlash against renewables because part of the problem is that energy companies aren’t making long-term capital investments in fossil fuels (e.g. refineries) because they anticipate the switch to EVs, contributing to near-term shortage. Every demographic in America except for white Democrats supports expanding oil production in the near term, at least, to keep prices down, and Biden released oil from the Strategic Petroleum Reserve after’s Russia’s Ukraine invasion. In the case of ExxonMobil, their potential loss in gas revenue with the advent of EVs will be partially offset by the need for more plastic, a petroleum byproduct, as we’ll need lighter-weight vehicles for greater EV range and less tire pollution.

Deregulation

Jimmy Carter made some changes that helped the economy long-term besides initiating the painful process to slow inflation. To deregulate is to lessen the government’s role in a sector and/or take laws off the books. One theme you’ll see in this chapter is that, much like its inverse regulation, deregulation can have unpredictable and unforeseen consequences, good and bad. If it was as simple as the-more-laws-the-better or the-fewer-laws-the-better we would’ve figured that out already. Spurred by Republicans and his intraparty rival, Senator Ted Kennedy (D-MA), Carter deregulated some industries that had been under the government’s control, including transportation (airlines, trucking, rail) and natural gas lines. In 1978, regional startups such as Southwest began to undersell big national airlines on an open market, challenging the original five-headed government-sanctioned oligopoly of United, Eastern, Braniff, American, and Delta. The impact of airline deregulation has been to dramatically cheapen the cost of flying, adjusted for inflation, so that by the late 20th century it was common for middle-class Americans to fly. However, in comparison to the mid-20th century, airlines now put less emphasis on customer service because they distinguish themselves by selling cheaper coach tickets rather than serving customers that are all paying similar, high rates. Trucking deregulation has been a net loss for short- and long-haul truckers, leading to lower pay (with fewer unionized as Teamsters), more dangerous working conditions (that also kill 5k motorists annually), and corrupt lease-purchasing agreements that rarely result in truckers owning their rig.

Communications followed the same deregulatory trend, triggered by a 1974 anti-trust lawsuit breaking up Ma Bell (AT&T), by 1984, into “Baby Bells” including AT&T, Verizon, CenturyLink, etc., which one AT&T engineer described as “trying to take apart a spider web without breaking it.” The government had sanctioned the Bell System’s monopoly in 1921, and their Bell Labs research and development dominated patents, stifling competition, while they rolled their profit into buying up over half the U.S. bonds on the market. Taking direction from the Federal Communications Commission (FCC), the quasi-public utility’s slogan was: “one policy, one system, universal service.” That service was decent, widely accessible, and affordable for local calls but expensive for long distance, and public pay phones were hard to maintain. Pre-cable TV piggybacked on AT&T’s transmission lines, and Richard Nixon hated the big three networks that could afford to pay AT&T’s ransom. So Nixon’s Office of Telecommunications Policy and DOJ overcame AT&T’s lobbyists and filed a suit that, ten years later (1984), opened up telecom for competitive pricing just before the advent of mobile/cell phones, starting with car phones and “bricks.” They also opened up competition in satellites just as they replaced expensive, long distance communication lines, and freed cable TV to compete with networks in 1972. The 1996 Telecommunications Act then opened up any companies to compete in any market. The biggest company now is none other than AT&T, but it’s unlikely that smartphones would’ve come about in the same way under just Ma Bell.

Other industries deregulated in the 1970s. Credit card companies — to the detriment of the working poor and unfrugal middle class, but in keeping with free-market principles — won the right to charge unlimited interest rates. Jimmy Carter also signed off on legislation allowing for the creation of Business Development Companies (BDCs) that gave small investors a tax-friendly way to invest in private businesses and riskier startups than those otherwise allowed in the SEC-regulated public stock markets. All this helped lay the foundation for economic recovery in the 1980s but wasn’t enough to help Carter at the time. Finally, on a lighter note, Carter signed regulation legalizing home-brewing in 1978 that advanced the rise of craft beers as independent, artisanal brewers initially honed their craft in garages.

Arms Race

Meanwhile, Jimmy Carter had plenty of problems overseas to deal with. Building on Richard Nixon’s foundation, the U.S. officially normalized relations with China in 1979 but Nixon’s détente with the Soviets came unraveled under Carter. The Soviets gained influence in Ethiopia (East Africa) as a Marxist state killed hundreds of thousands in a Red Terror and various relocation schemes. Carter’s emphasis on human rights threatened the Soviets and the arms race spiked dramatically because of better ICBMs (inter-continental ballistic missiles) and multi-warhead MIRVs (multiple independently targetable reentry vehicles) that, according to rumor at least, could be dosed with biological weapons. This LGM-118 “Peacekeeper” MIRV the U.S. tested over the Kwajalein Atoll divides into eight 300 kiloton warheads, each ~20x more powerful than the Hiroshima bomb if detonated.

In the 1970s, each side was testing submarine-based ballistic missiles (SLBMs) and air-launched cruise missiles that could be loaded onto traditional bombers like America’s B-52’s. The U.S. placed medium-range cruise missiles similar in design to the Nazis’ old V-1 “flying bombs” in southern England, loaded onto mobile launch pads in the payloads of trucks parked in underground bunkers. These relatively cheap warheads, each costing only ~ ¼ of a jet fighter, had a 2k-mile range and could incinerate a small city and kill everyone in a ten-mile radius. In general, Soviets focused more on size whereas the U.S. focused on accuracy. Each side additionally worked on neutron bombs that could wipe out life without destroying property, though the U.S. shelved plans to arm NATO with the new weapons due to public pressure. Near the end of his presidency, Carter announced that both sides had more than 5x as many warheads as they had in the early 1970s. A round of SALT (strategic arms limitation talks) between Carter and the Soviets slowed the madness some, limiting each side to 2400 warheads and convincing the Soviets to halt production on new MIRVs that carried up to 38 separate warheads.

Middle East

Six months after the SALT II talks in Vienna, in December 1979, the Soviets invaded Afghanistan to support communist forces in a civil war there against the jihadist Mujahideen. In response, Carter embargoed agricultural trade, boycotted the 1980 Moscow Olympics, and issued a doctrine stating America’s intention to protect its oil interests in the Middle East. However, nothing could compel the Soviets to relent in their misguided quest to conquer Afghanistan and arms reduction talks stalled.

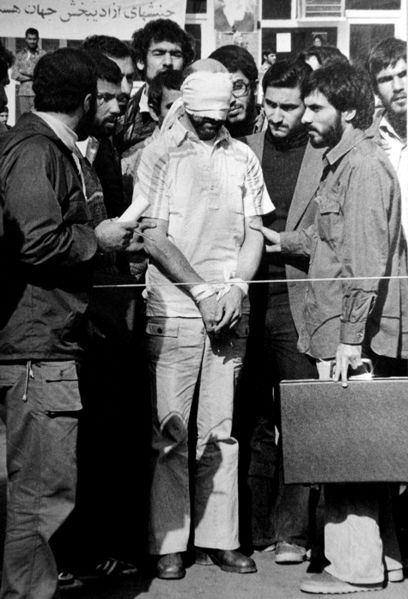

West of Afghanistan, resentment had been building in Iran against the U.S. ever since 1953 when the CIA helped overthrow their democracy and replace it with a dictatorship (the Shah) that sold the West cheap oil. America’s role in that overthrow grew in the Iranian imagination as the years passed. Unfortunately for Carter, he reaped what President Eisenhower and his successors sowed. The only accommodation the Shah had made to free speech was within mosques, so anti-Western sentiment fused with fundamentalist Islam there over the decades. When Islamic revolutionaries took over the country in 1978, the Shah escaped to Egypt and Mexico, then sought cancer treatment in the U.S. after Henry Kissinger insisted on it in exchange for endorsing Carter’s SALT II treaty. Granting the Shah exile was the straw that broke the camel’s back for the new leaders who seized the American embassy in Tehran, capturing diplomats and Marines in the process. Before the Carter administration granted the Shah asylum at a New York hospital, they should’ve evacuated their embassy in Tehran. According to international law, embassies are enclaves protected from the societies around them, but U.S. embassies were breached in Vietnam (1968), Iran (1979), Lebanon (1983), Tanzania (1998), Kenya (1998), and Libya (2012).

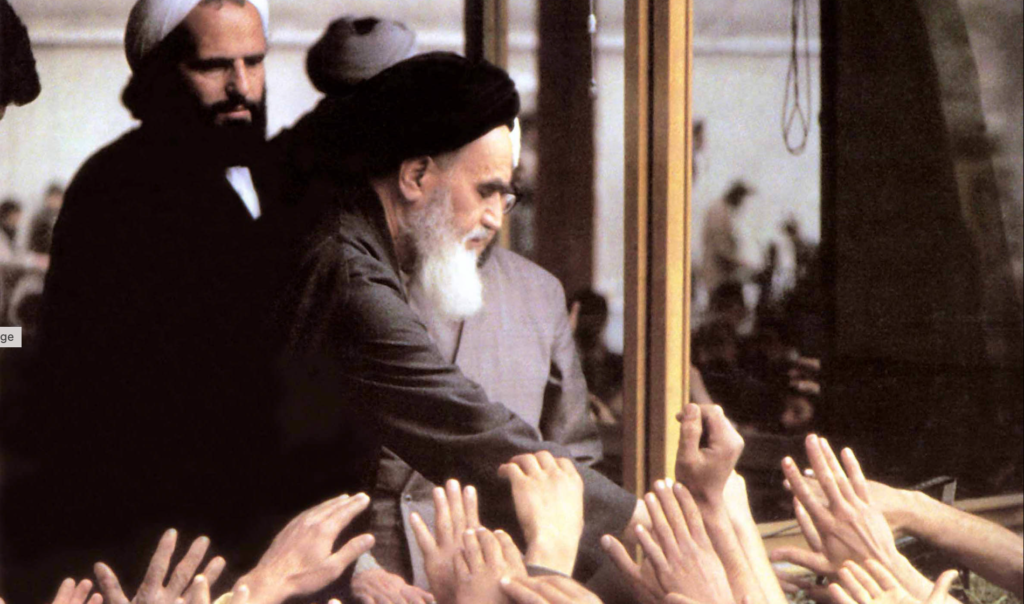

The Iranian Hostage Crisis gave rise to a warped, symbiotic relationship between western media and terrorists. The American TV-watching public riveted on the event, but the kidnappers were granting them access inside the embassy only because it provided them a forum to broadcast their anti-American message. At least the English-speaking kidnappers and hostages bonded over pro football, as western media gave them cassette recordings of the 1980 Steelers-Rams Super Bowl to listen to together. Meanwhile, Iranian TV televised executions of Iranians live, nightly, as an assortment of factions within the country fought for control of the revolution. At first, Carter hoped the crisis could divert Americans’ attention away from the domestic economy, but that backfired as the crisis wore on and ABC’s Nightline covered angry Iranians burning Uncle Sam in effigy. CNN launched in 1980 to take advantage of viewer interest in the crisis. For Americans still in a post-Vietnam funk, it was like getting salt poured in their wound. Finally, Carter ordered a military rescue, but poor planning compromised the operation and one helicopter crashed into the C-130 tanker aircraft there to re-fuel the helicopters, killing eight Americans who were left behind. The CIA foolishly chose a desert staging area with a highway running through it (they blew up a fuel truck after the driver and other witnesses drove off in a pickup, and temporarily kidnapped 44 bus passengers). The fiasco further torpedoed Carter’s presidency and raised the prestige of revolutionary leader Ayatollah Khomeini (below), exiled by the Shah in 1963:

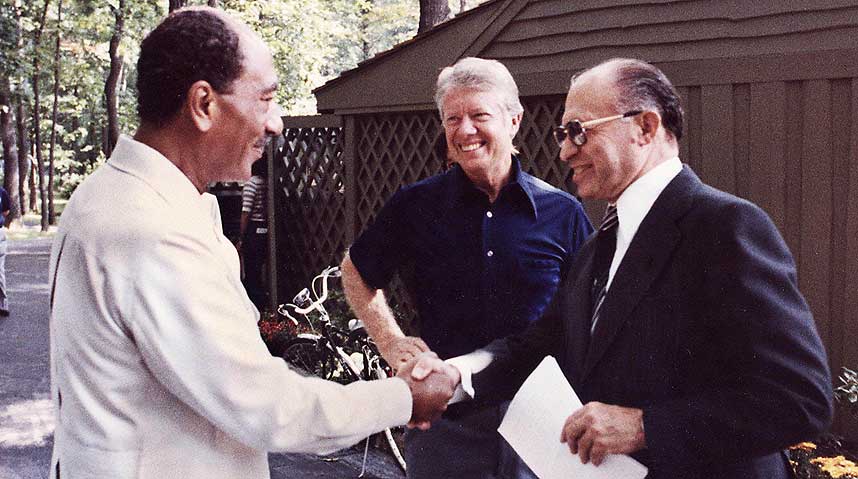

The one big feather in Carter’s foreign policy cap was negotiating peace between Israel and its most formidable adversary, Egypt. Carter built on the Shuttle Diplomacy initiated by Henry Kissinger in the early 1970s, whereby the U.S. no longer supported Israel unconditionally but rather tried to broker peace between Israel and its neighbors. Building on an idea raised by CBS News’ Walter Cronkite in a split-screen interview with the leaders of Israel and Egypt, Menachem Begin and Anwar el-Sadat, Carter invited both to Camp David, Maryland for a retreat.

At first, he had to walk from one end of the compound to the other to relay messages, but he eventually got both men in the same room to talk through interpreters. In the Camp David Accords, Israel agreed to swap the Sinai Peninsula in exchange for Egypt’s recognition of Israel’s right to exist. The two have been at peace ever since, though the democratic revolution in Egypt in 2011 threatened the relationship because, potentially, a populist Muslim Brotherhood in Egypt might favor war with Israel. As for Sadat, his own army assassinated him during a parade for negotiating with Israel, underscoring the resistance to peaceful compromise that Middle Eastern leaders face among their own populations. A similar fate awaited Israeli leader Yitzhak Rabin after he laid out a framework for peace with Palestinians within Israel in the 1993 Oslo Accords. He was killed by a right-wing Israeli opposed to peace.

For Carter, his success with Israel and Egypt wasn’t enough to offset setbacks in Iran and Afghanistan. In retrospect, Afghanistan was causing the Soviets more harm than the Soviets were causing the U.S., but Iran plagued Carter as he approached re-election in 1980. After months of negotiations to get their assets unfrozen in American banks, Iranians released American hostages within minutes of when Carter left office. As new president Ronald Reagan took the inaugural oath in January 1981, freed hostages hit the airport tarmac.

Morning In America

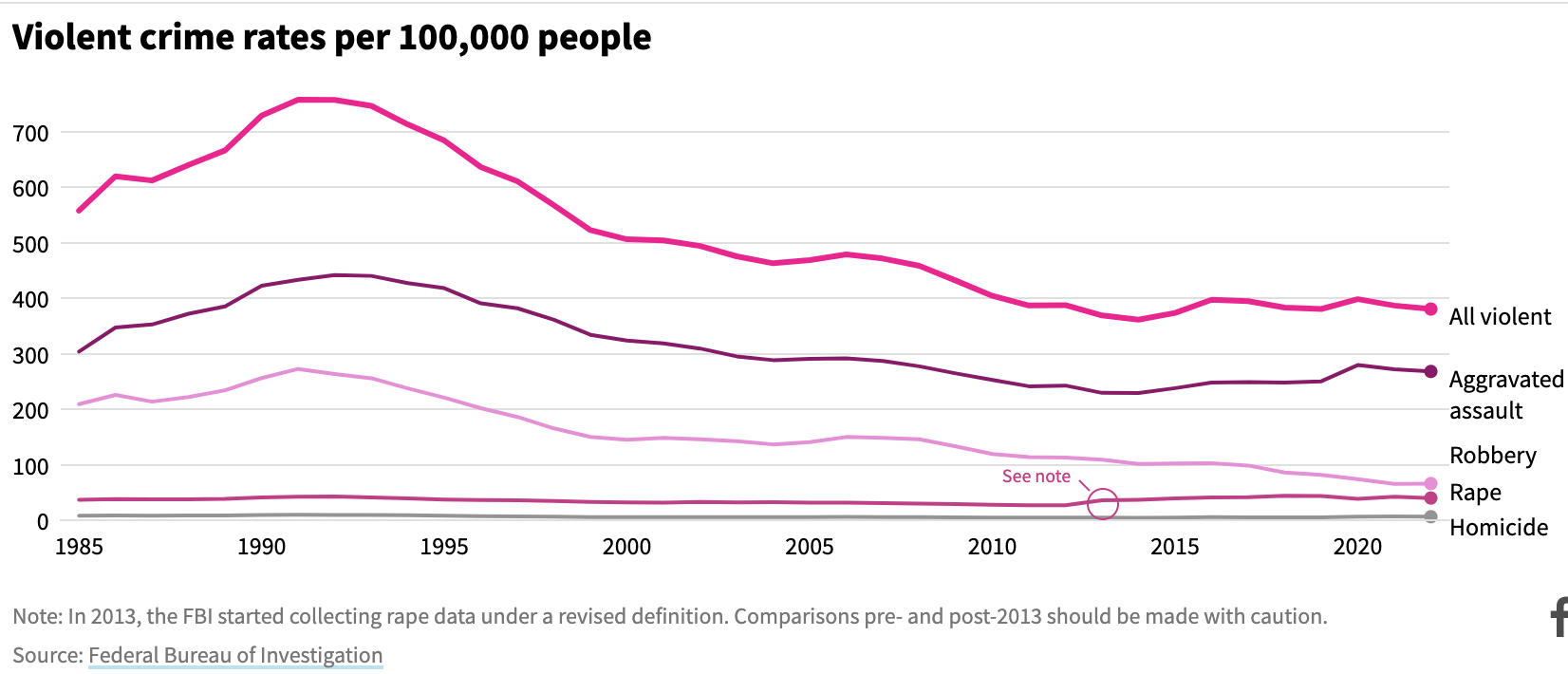

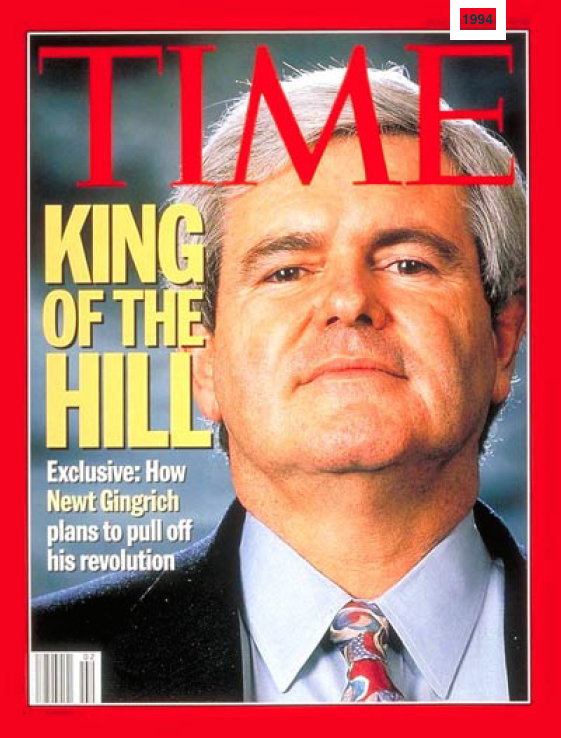

It’s hard to say whether the Iranian Crisis cost Carter re-election or not. By 1980, the time was right for the Reagan Revolution as Americans were ready for a conservative change of pace. The Misery Index (stagflation) as economists came to call it, set the stage for Republican victory by Californian Ronald Reagan over Carter in 1980. The actor and former liberal had tried to lure Dwight Eisenhower into running as a Democrat in 1952 and supported Harry Truman’s plan for universal healthcare insurance until his conservative (second) father-in-law talked him out of it. As president of the Screen Actors Guild, he veered right during the Red Scare, serving as an FBI informant. With no foreknowledge of classic movie theaters or TCM, he negotiated for the actors’ future royalties, or residuals (he’d seen the popularity of 1939’s Wizard of Oz on TV starting in 1956). Reagan became a spokesman for General Electric in the early 1960s and advocated for deregulation of all industries. He became governor of California in 1966 after campaigning for Barry Goldwater’s presidential run in 1964, saying of his defection that “I didn’t leave the Democratic Party; it left me.” As California governor in the ’60s, he deftly used race riots and the counterculture as foils. He ran on a law and order platform, just as Nixon did in the presidential election, saying that inner cities were “jungle paths after dark.” He ordered tear gas dropped from National Guard helicopters on protesting “beatniks and malcontents” at Cal-Berkeley and upbraided professors for their leftist leanings. By 1980, he led movement conservatism. If Goldwater was the godfather of the conservative counter-revolution, Reagan was its messiah.

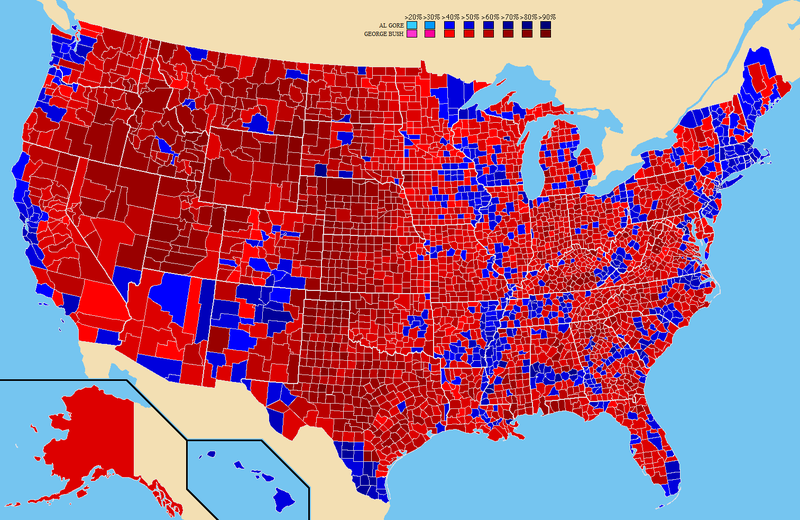

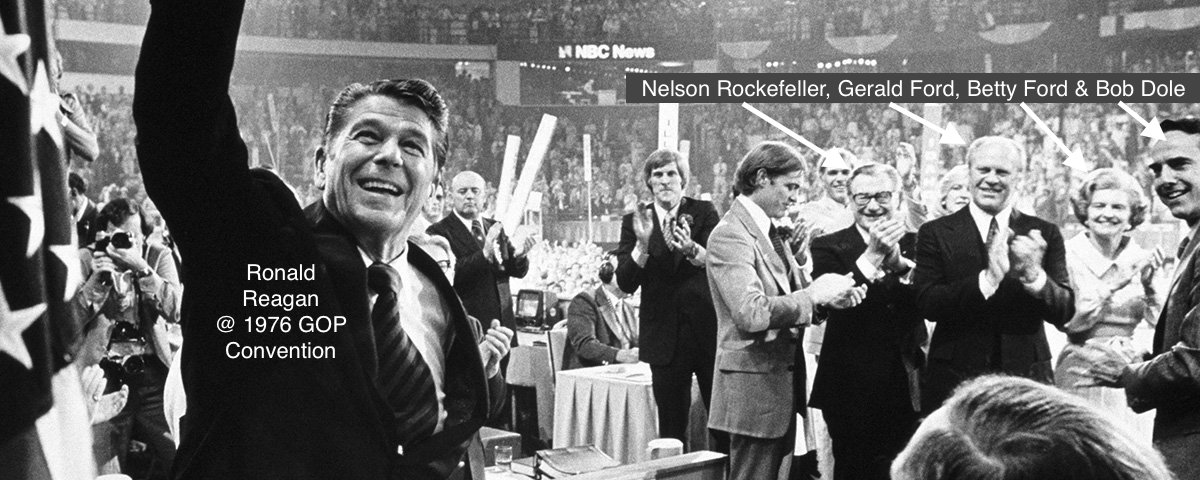

At the 1976 GOP convention — the last contested convention to date — Reagan nearly stole the nomination from Gerald Ford. With his telegenic charisma and jocular charm, the “Gipper” gave a rousing speech even as the party crowned the more moderate Ford as their candidate. Ford was an Eisenhower Republican whereas Reagan was a more conservative Goldwater Republican. His acting talent served him well in politics and provided built-in familiarity. Most famously, Reagan had played dying Notre Dame football player George Gipper in Knute Rockne: All-American (1940). 1980 was a watershed election, on par with 1932 in terms of swinging the American political pendulum back toward the right, just as ’32 had swung it to the left. Fusing optimism and patriotism, Republicans, for the first time since the New Deal, pried away a significant chunk of blue-collar workers. Many of these Reagan Democrats wanted to restore military pride or, in the case of some Christians, opposed the Democrats’ pro-choice abortion platform. The Supreme Court legalized abortion in Roe v. Wade (1973). Reagan tapped into public skepticism about government agencies and programs launched under Johnson in the 1960s like welfare, public housing, and food stamps, whereas Goldwater had taken on a Social Security program that was popular among working-class Whites. As we read in Chapter 16, one commentator said that “Goldwater lost against the New Deal, but Reagan won against the Great Society.” Reagan’s motto in 1980 was “Let’s Make America Great Again” but there weren’t red LMAGA hats.

1980 was a watershed election, on par with 1932 in terms of swinging the American political pendulum back toward the right, just as ’32 had swung it to the left. Fusing optimism and patriotism, Republicans, for the first time since the New Deal, pried away a significant chunk of blue-collar workers. Many of these Reagan Democrats wanted to restore military pride or, in the case of some Christians, opposed the Democrats’ pro-choice abortion platform. The Supreme Court legalized abortion in Roe v. Wade (1973). Reagan tapped into public skepticism about government agencies and programs launched under Johnson in the 1960s like welfare, public housing, and food stamps, whereas Goldwater had taken on a Social Security program that was popular among working-class Whites. As we read in Chapter 16, one commentator said that “Goldwater lost against the New Deal, but Reagan won against the Great Society.” Reagan’s motto in 1980 was “Let’s Make America Great Again” but there weren’t red LMAGA hats.

One of Reagan’s first speeches after his nomination was at the Neshoba County Fair outside Philadelphia, Mississippi, where three civil rights workers were killed in 1964. Reagan and his advisers weren’t just throwing darts at a map and picking random towns instead of larger cities. While not endorsing segregation or violence, he used the occasion to remind the townspeople of how much he’d always appreciated their commitment to states’ rights in the context of a talk on the innocuous subject of education. Like many who went before him, he’d learn to couch racism in an anti-federal context, especially appealing to suburban racists who, like Reagan, obsessed over not being labeled as racist. This time-honored political trick is known as a dog-whistle (for whistles that dogs can hear but not humans, like coded messages that racists hear but not mainstream voters). The obvious advantage is plausible deniability. The rebel flags in the crowd were merely about “heritage” and the fact that everyone knew states’ rights in the South was associated with Jim Crow was coincidental. When his opponent Jimmy Carter accused him of racially-coded language Reagan was insulted and demanded an apology.

In the 1960s, Reagan likewise opposed the national government’s role in the 1964 Civil Rights Act and ’65 Voting Rights, which he denounced as “humiliating” the South and later tried to weaken as president. When confronted about race, his stock response was that he supported Jackie Robinson’s integration of baseball in 1947. Other times Reagan was more plain-spoken: as California’s governor, he appealed to Whites in areas like Orange County by denouncing the 1968 Fair Housing Act, arguing that “If an individual wants to discriminate against Negroes or others in selling or renting his house, it is his right to do so.” In a 1971 phone call to Richard Nixon that he didn’t realize was being recorded, he referred to African leaders as “monkeys…still uncomfortable wearing shoes,” in keeping with his characterization of American cities as “jungles” and frequent jokes about African cannibalism. (Likewise, when a supporter that hadn’t donated enough to his campaign asked John Kennedy for an ambassadorship, he purportedly said, “F*** him. I’m going to send him to one of those boogie republics in Central Africa.”) The key for politicians, though, is manipulating voters’ worst instincts. Nixon admired how Reagan played on the “emotional distress of those who fear or resent the Negro, and who expect Reagan somehow to keep him ‘in his place.’ ” It makes historians queasy to see this racism paired with Reagan’s promise of trickle-down economics — the idea that making the rich richer will ultimately create working-class jobs — because rich planters, the “one-percenters” of the 19th century if you will, paired those two in the antebellum South; except that poor Whites there never got anything out of the bargain except for their pride in being above enslaved workers and the right to serve on slave patrols. Playing on the “emotional stress of those that fear the negro” has been such a major theme in our history that it helps explain the entire evolution of America’s two-party system from the founding to the present.

But Reagan was craftier on-the-record by the late 1970s, exploiting the public’s resentment toward people who were taking unfair advantage of the welfare system. He asked working-class white audiences if they were tired of working hard for their paycheck then going to the grocery store and seeing a “strapping young buck” ahead of them in line with food stamps. Prior to the Civil War, “bucks” or “studs” referred to enslaved males that masters encouraged to reproduce with “wenches.” During his 1976 campaign, Reagan popularized the term welfare queen for women who took advantage of the government’s well-intentioned idea of paying single unemployed moms more than married couples. The term derived from an African-American Linda Taylor who, in 1974, was caught defrauding the government with multiple identities and sentenced to prison. Reagan’s audience understood who the strapping young buck and welfare queen were. He wasn’t going to beat people over the head with explicit racism (he wasn’t stupid), but neither was he going to leave the old southern Democratic voters and George Wallace supporters on the table. In an infamous 1981 interview (YouTube), South Carolina GOP strategist Lee Atwater explained how his party won over racists without sounding overtly racist.

Reagan was continuing with a variation on the GOP’s Southern Strategy, begun under Nixon to siphon off the old Democratic “negrophobes.” The concern over welfare abuse transcended race, though. In Hillbilly Elegy: A Memoir of a Family & Culture In Crisis (2016), J.D. Vance recalled how working-class Whites in the Appalachia of his youth resented other lazy Whites that ate (and drank) better than they did by staying on the public dole permanently, spurring the workers to abandon the Democratic Party.

Republicans gained control of the South, fulfilling LBJ’s prophecy about the Civil Rights movement, partly through various Southern Strategies on race, partly through their general limited government philosophy (including cracking down on welfare abuse among all races), and partly through their new alliance with Christian Fundamentalists. Fundamentalism had been growing since the 1970s and abortion, legal since Roe v. Wade in 1973, gave Republicans a wedge issue to galvanize their alliance, along with Christian nationalism. Consequently, many Reagan Democrats, North and South, Protestant and Catholic, crossed the aisle and voted GOP for the first time, regardless of their economic class and Republican hostility toward unions that would lack the right to collective bargaining in a free market. Economically, they were either willing to sacrifice their interests on behalf of outlawing abortion and strengthening the military or were convinced that wealth would trickle-down.

You need to be careful what you wish for in politics. Roe was a seeming victory for liberals but, like the Civil Rights movement of the previous decade, it further splintered the Democrats’ New Deal coalition. As of 1973, when SCOTUS ruled on Roe v. Wade, each party was split about evenly on the issue of abortion. It hadn’t yet become a polarizing issue either in terms of public awareness or partisanship. In fact, moderate Republican judges wrote Roe, just as they had Brown v. Board (1954). But Republicans siphoned off far more working-class Christians into the GOP than Democrats did pro-choice Republicans. Today, you can’t function as a Republican if you’re not pro-life or as a Democrat if you’re not pro-choice, and many voters in each party’s base stake out absolutist positions. It is the most defining litmus test in each party’s platform — more important than either’s take on economics, diplomacy, the environment or, more recently, democracy itself. But fifty years later, modern Republican voters are not uniformly pro-life. So, in another case of being careful what you wish for, Republican politicians are like the proverbial “dog that caught the car” after they overturned Roe in Dobbs v. Jackson (2022), especially after putting draconian trigger laws on the books in red states based on the assumption that it wouldn’t happen. The best political position for the GOP was just to oppose Roe, not actually overturn it.

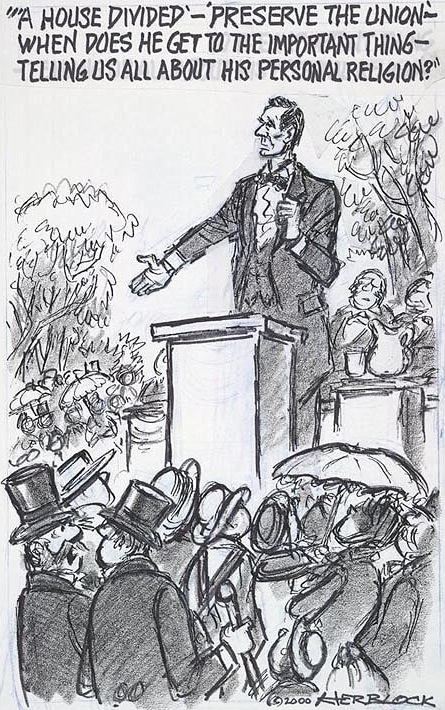

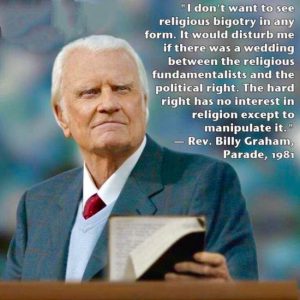

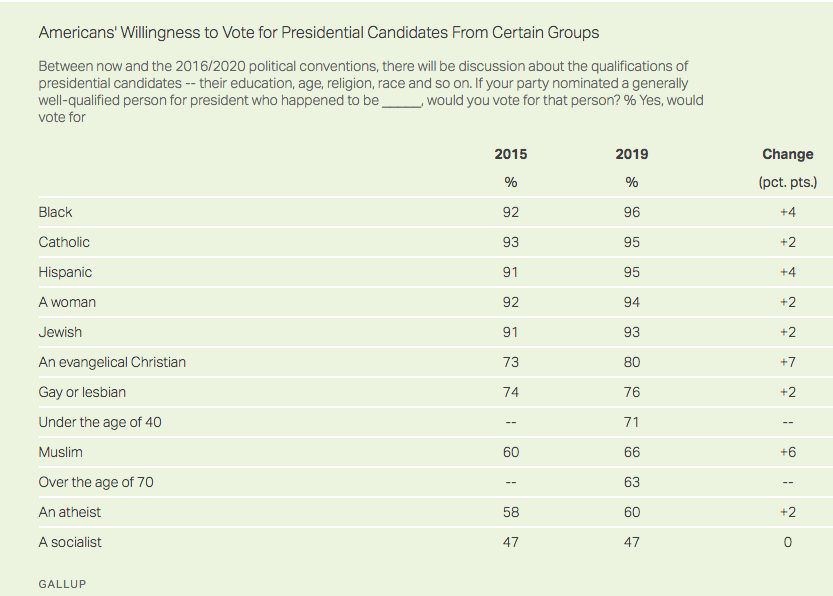

In the 1970s, evangelicals didn’t agree on whether this political alliance was a wise deal, given the amorality of politics. Jerry Falwell bridged fundamentalism and the GOP with his Moral Majority, but the most famous evangelist, Billy Graham (aka the “Protestant Pope”), who counseled every president from Harry Truman to Barack Obama, urged Christians to avoid partisanship (right) despite Graham’s earlier ministry having paved the way for the infusion of religion into politics. According to evangelical commentator David French, this alliance opened the door for the 21st-century Christian Right supporting politicians that undermined their moral values, even without them being aware of it. Conversely, influential conservative and 1964 GOP candidate Barry Goldwater didn’t want to dilute conservatism with too many “preachers,” arguing in 1981 that they could be uncompromising. Despite these misgivings, religion took on a revived role in American politics during the Carter-Reagan era and after. Carter was born-again and wanted to carry Christ’s message of peace into the real world. Reagan, too, was interested in the New Testament, especially its last chapter, the Book of Revelations, that he suspected might foreshadow an apocalyptic showdown between America and the USSR. He secured a lasting alliance between Christian fundamentalists and the Republican Party. Today no candidate could run for president without fully explaining his or her faith. Mormons (e.g., Mitt Romney 2012) and Jews are more or less welcome to join the sweepstakes along with Christians, while this 2019 Gallup poll suggests that other groups’ prospects are at least improving, while also underscoring that there’s no agreed-upon definition of socialism.

In the 1970s, evangelicals didn’t agree on whether this political alliance was a wise deal, given the amorality of politics. Jerry Falwell bridged fundamentalism and the GOP with his Moral Majority, but the most famous evangelist, Billy Graham (aka the “Protestant Pope”), who counseled every president from Harry Truman to Barack Obama, urged Christians to avoid partisanship (right) despite Graham’s earlier ministry having paved the way for the infusion of religion into politics. According to evangelical commentator David French, this alliance opened the door for the 21st-century Christian Right supporting politicians that undermined their moral values, even without them being aware of it. Conversely, influential conservative and 1964 GOP candidate Barry Goldwater didn’t want to dilute conservatism with too many “preachers,” arguing in 1981 that they could be uncompromising. Despite these misgivings, religion took on a revived role in American politics during the Carter-Reagan era and after. Carter was born-again and wanted to carry Christ’s message of peace into the real world. Reagan, too, was interested in the New Testament, especially its last chapter, the Book of Revelations, that he suspected might foreshadow an apocalyptic showdown between America and the USSR. He secured a lasting alliance between Christian fundamentalists and the Republican Party. Today no candidate could run for president without fully explaining his or her faith. Mormons (e.g., Mitt Romney 2012) and Jews are more or less welcome to join the sweepstakes along with Christians, while this 2019 Gallup poll suggests that other groups’ prospects are at least improving, while also underscoring that there’s no agreed-upon definition of socialism.

Reaganomics

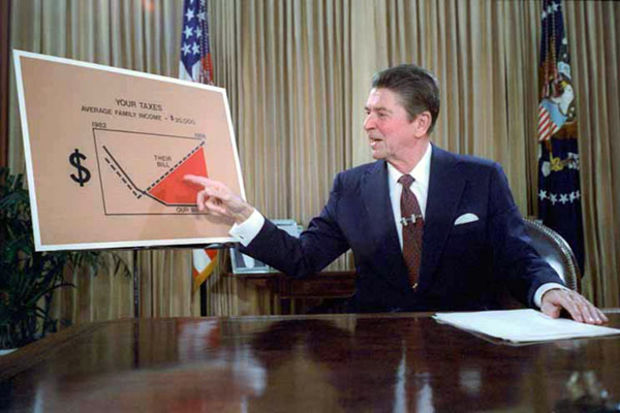

Economics, though, was where the right-wing Reagan set himself apart the most from Democrats. Just as FDR wanted to jump-start the economy through government spending, Reagan’s supply-side economics reversed the concept of Keynesian demand-side stimuli, focusing not on government spending but on tax cuts, especially for the wealthy and corporations. Several major corporations were essentially on welfare throughout Reagan’s presidency because their tax rebates exceeded their tax bills. It’s difficult to tell how much the wealthy were actually paying on income taxes prior to 1980, but the top rates went from 70% when Reagan came into office down to 28% by 1988, so they were the biggest and most obvious beneficiaries of his election. Just as the New Deal philosophy wasn’t that new, drawing on ideas that Populists and Progressives had advocated for decades, so too, Reaganomics drew on ideas that conservative economists at the University of Chicago like Milton Friedman had promoted since WWII (aka, the Chicago School of Economics).

Ronald Reagan Televised Address from the Oval Office, Outlining his Plan for Tax Reduction Legislation, July 1981, White House Photo Office-Reagan Library

Critics saw all this as a cover for power and privilege, increasing the gap between rich and poor. But was it “Reagan-Hood” as Reagan’s critics charged? In other words, did Reaganomics really steal from the poor and give to the rich, the opposite of the legendary Robin Hood? Yes and no. He helped the rich plenty, but his record was mixed on the poor. He reduced food stamps and school lunch subsidies, most forms of student aid (e.g., Pell Grants), and painkillers from disability coverage, leading to a black market in drugs like oxycodone. His reductions in publicly-funded mental health facilities increased homelessness. It’s hard to untangle these cuts from coded racist rhetoric. To the extent that race played a part in the pullback on public spending, which is impossible to prove or measure, it victimized poor Whites as collateral damage. However, spending continued through Reagan’s presidency on most of the core New Deal programs and even much of the welfare from the Great Society. Some tax burdens were shifted to the states but still came out of paychecks just the same. Reaganomics kicked off an era when Americans continued to spend on core entitlements (Social Security and Medicare) while voting themselves tax cuts.

Reagan’s budget director, David Stockman (right), a follower of Austrian free-market economist Friedrich Hayek (Chapter 9), quickly learned the limits of what a full-blown Reagan economic revolution would entail. Stockman, the “father of Reaganomics,” realized that since cutting most non-military spending would decimate “Social Security recipients, veterans, farmers, educators, state and local officials, [and] the housing industry…democracy had defeated the [free market] doctrine.” In other words, it would be political suicide to cut off all those demographics since they can vote. Senate Majority Leader Mitch McConnell reiterated this point in 2018 when Republicans had control of both chambers and the White House, meaning that, unless they’d been bluffing all these years, they could finally cut Social Security and Medicare all they wanted to balance the budget and offset tax cuts. That would’ve required legal changes rather than just lowering the amounts (apportionments) because those entitlements come under mandatory spending rather than discretionary, but they could’ve changed the law. The problem is that most GOP voters who favor lower spending and smaller government don’t actually want cuts to include Social Security or Medicare, just Medicaid for the poor. In an unprecedented burst of honesty, McConnell said the pain that comes with meaningful cuts makes it “difficult if not impossible to achieve when you have unified government” (translation: we need the Democrats to win back at least one chamber so that they can take the blame among a voting public that, collectively, wants higher spending for lower taxes). The truth is that democracies and budgets don’t dovetail well. To be precise, in America’s case they’re off by ~ 15% as measured by 2016’s $3.8 trillion in expenditures versus $3.3 trillion in revenue, and those numbers were even wider during COVID-19. Even consistently running huge deficits and burdening their grandchildren with the tab leaves the public complaining about insufficient services and high taxes. In 2020, Donald Trump asked a private audience at Mar-a-Lago “Who the hell cares about the budget?” The current answer is Republicans when a Democrat is in office and nobody when Republicans are in office.

Reagan’s budget director, David Stockman (right), a follower of Austrian free-market economist Friedrich Hayek (Chapter 9), quickly learned the limits of what a full-blown Reagan economic revolution would entail. Stockman, the “father of Reaganomics,” realized that since cutting most non-military spending would decimate “Social Security recipients, veterans, farmers, educators, state and local officials, [and] the housing industry…democracy had defeated the [free market] doctrine.” In other words, it would be political suicide to cut off all those demographics since they can vote. Senate Majority Leader Mitch McConnell reiterated this point in 2018 when Republicans had control of both chambers and the White House, meaning that, unless they’d been bluffing all these years, they could finally cut Social Security and Medicare all they wanted to balance the budget and offset tax cuts. That would’ve required legal changes rather than just lowering the amounts (apportionments) because those entitlements come under mandatory spending rather than discretionary, but they could’ve changed the law. The problem is that most GOP voters who favor lower spending and smaller government don’t actually want cuts to include Social Security or Medicare, just Medicaid for the poor. In an unprecedented burst of honesty, McConnell said the pain that comes with meaningful cuts makes it “difficult if not impossible to achieve when you have unified government” (translation: we need the Democrats to win back at least one chamber so that they can take the blame among a voting public that, collectively, wants higher spending for lower taxes). The truth is that democracies and budgets don’t dovetail well. To be precise, in America’s case they’re off by ~ 15% as measured by 2016’s $3.8 trillion in expenditures versus $3.3 trillion in revenue, and those numbers were even wider during COVID-19. Even consistently running huge deficits and burdening their grandchildren with the tab leaves the public complaining about insufficient services and high taxes. In 2020, Donald Trump asked a private audience at Mar-a-Lago “Who the hell cares about the budget?” The current answer is Republicans when a Democrat is in office and nobody when Republicans are in office.

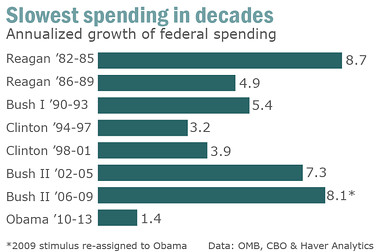

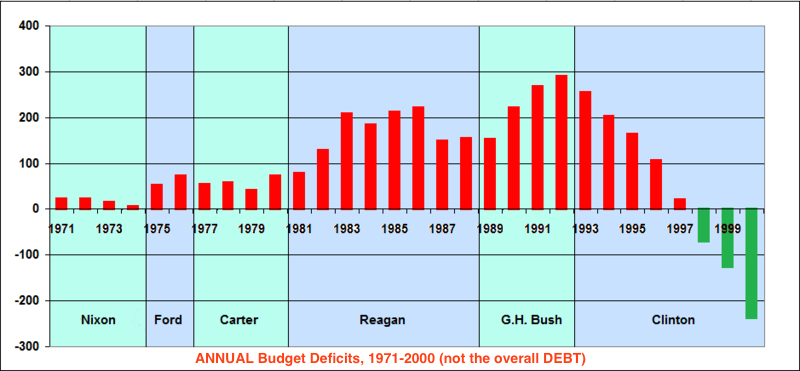

The result of Reagan’s concession to core New Deal programs, when combined with increased military spending and tax cuts, was ballooning debt. Rather than always trying to sell the multiplier effect that tax cuts would increase revenue, Reagan often candidly told American voters that he was willing to plunge the country into debt to win the Cold War if that’s what it took. And, Reagan likely knew (wisely if cynically) that overspending helps a sitting president and punishes his successors — a lesson his successors took to heart. George W. Bush’s VP Dick Cheney said Reagan proved that, in politics, “deficits don’t matter.” Adjusted for inflation, Abraham Lincoln and Franklin Roosevelt are the runaway leaders in growing the size and cost of the federal government because of the Civil War and World War II. But aside from them, what presidents oversaw the most growth in the size of the national government? Surprisingly, George W. Bush (87%) and Reagan (82%) in non-inflation-adjusted numbers (Source: USGovernmentSpending.com). This is as good a time as any to remind ourselves that, while presidents submit budget proposals under the Constitution, Congress is in charge of the nation’s purse strings as far as setting budgets, though presidents sign off. Given our current partisanship, it’s heartwarming that Reagan got along well with House Speaker Tip O’Neill (D-MA), but really their bipartisan budget compromises just meant mounting debt for future Americans.

Reagan’s supporters often claim that the debt-to-GDP ratio actually shrank under Reagan, meaning that the economy grew more than the debt, and the ratio of federal spending to the overall economy fell, but that’s not the case. The Debt-to-GDP ratio grew in the 1980s (at the top of the chapter we looked at annual spending vs. GDP rather than debt). According to the multiplier theory of supply-side economics, lower tax rates were supposed to stimulate growth enough to increase overall tax revenues even though tax rates dropped but that didn’t happen. Nonetheless, the economy took off on a long bull run, lasting through the late 1990s. By that measure, and a booming stock market, Reaganomics was a success.

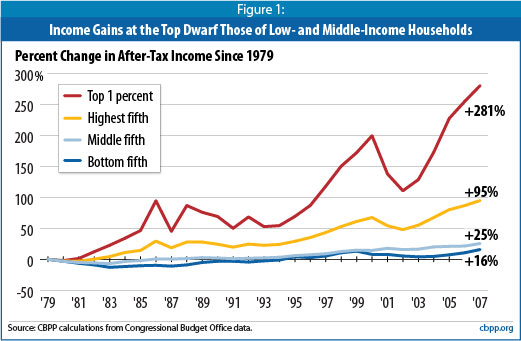

But did increased wealth “trickle down” to workers as supply-side advocates promised? Again, yes and no. Trickle-down economics isn’t something that’s true or false, as money is constantly flowing in both directions, including down in the form of salaries. Also, understand that some of the inequality charts you might see are skewed because they’re based on earnings before taxes that hurt the rich and government aid that helps the poor (ours on the left is after taxes). As explained in Rogé Karma’s optional article below, these stats are also complicated by our lack of reliable statistics on tax evasion (conservative scholars assume that wealthier Americans cheat less because they’re already rich). But we have some basic stats and the question is whether, as the political slogan implied, making the rich richer created a net gain for workers in relation to the rest of the economy. There’s no doubt the economy grew over the next twenty years, and the booming stock market of the 1980s and ’90s helped all workers tied to defined-benefit pensions and directed-benefit 401(k) or IRA retirement funds, along with stimulating overall growth. As our graph shows, working classes didn’t suffer significant wage reductions on average between 1980 and 2007, just before the Great Recession. Wealthy people don’t necessarily earn money at the expense of the poor in a zero-sum game. The overall earning power of most workers stagnated, though, except for people in the upper 20%. Also, most workers didn’t stay at one job long enough to take full advantage of either type of retirement fund, and most weren’t investment-savvy enough to manage their own directed funds to the fullest advantage.

Many economists claim that it’s wrong to look at the economy like a zero-sum pie and ask who is getting the biggest piece because the pie itself is growing. That’s true to a certain extent and, yes, it’s true that America’s poor suffer more from diseases of abundance (diabetes, obesity, etc.) than hunger. But regardless of the size of the pie, it is finite at any given moment, and the gap between rich and poor widened between 1980 and 2019 (COVID), with most of the money trickling up. Really, it was more like a tidal wave than a trickle. While the rich have gained more proportionally than the working and middle-classes, the ultra-rich (top 1%) have gained far more than the poor, middle or even regular rich. The top 1% lost ground in the Great Recession of 2008-09, but when the slow recovery kicked in around 2010, they increased their lead over the bottom 99%. Much of that wealth is in the hands of entrepreneurs who’ve created jobs and products for the rest, but much of it has gone to investment bankers and hedge-fund managers who stash their earnings in offshore accounts to avoid paying taxes. Tax shelters, combined with the fact that taxes are lower on investments than earned income, mean that most wealthy now pay a lower effective tax rate than the middle and upper-middle classes and, if you don’t like it, you’re guilty of fomenting “class warfare” and favoring “totalitarian government.” By 2012, the richest 400 people in the U.S. had more money than the bottom 50% of the population and wages stood at an all-time low as a percentage of GDP. However, since COVID-19, working class wages have risen at a faster rate than upper classes. Still, 40+ years after the Reagan Revolution, the public is now skeptical enough about trickle-down economics that 21st-century conservatives have dropped the term from their campaign platforms while still favoring supply-side economics.

Another key to the Reagan Revolution was deregulation. Reagan was adamant in his aforementioned philosophy that government is not the solution to our problems; government is the problem. The deregulatory trend started under Carter in the 1970s but gained momentum under Reagan. He rolled back environmental and workplace safety (OSHA) regulations, loosening rules on pesticides and chemical waste, and got rid of many rules governing banking and accounting. The financial changes, especially the evolution of Special Purpose Entities (SPE’s), contributed to problems like the Savings & Loan Crisis, Michael Milken’s junk bond-related fraud, and Enron in the late 1990s. The taxpayer bailout of corrupt Savings & Loans cost Americans 3-4% of GDP between 1986 and ’96. On the other hand, allowing accountants to “cook their books” may have stimulated the economy and Milken used some of the money he stole to help fund medical research. But, ideally, one purpose of accounting, other than to run a business responsibly for your own sake, is so that other people (employees, investors, IRS) can get a feel for what’s going on. Reagan also cut funding for the Small Business Administration (1953-) the only government agency aimed at helping small entrepreneurs, but one that could be spun as yet more bureaucracy and costing taxpayers because of some failed loans.

Another key to the Reagan Revolution was deregulation. Reagan was adamant in his aforementioned philosophy that government is not the solution to our problems; government is the problem. The deregulatory trend started under Carter in the 1970s but gained momentum under Reagan. He rolled back environmental and workplace safety (OSHA) regulations, loosening rules on pesticides and chemical waste, and got rid of many rules governing banking and accounting. The financial changes, especially the evolution of Special Purpose Entities (SPE’s), contributed to problems like the Savings & Loan Crisis, Michael Milken’s junk bond-related fraud, and Enron in the late 1990s. The taxpayer bailout of corrupt Savings & Loans cost Americans 3-4% of GDP between 1986 and ’96. On the other hand, allowing accountants to “cook their books” may have stimulated the economy and Milken used some of the money he stole to help fund medical research. But, ideally, one purpose of accounting, other than to run a business responsibly for your own sake, is so that other people (employees, investors, IRS) can get a feel for what’s going on. Reagan also cut funding for the Small Business Administration (1953-) the only government agency aimed at helping small entrepreneurs, but one that could be spun as yet more bureaucracy and costing taxpayers because of some failed loans.

Mergers were another hallmark of Reaganomics, as courts were hesitant to prosecute monopoly cases. Remember all the hullabaloo about trust-busting in the Progressive Era? An upstart football league called the USFL led by, among others, Donald Trump, sued the NFL in an antitrust case in 1986. A lower court determined that the USFL was right — the NFL did have a monopoly on pro football — and awarded the USFL a grand total of $1. While that particular case wasn’t influential, it symbolized the era. The Reagan Revolution led to a wave of mergers in the 1980s and ’90s that continues to this day. Unions didn’t do much better than trust-busters in the 1980s. Shortly into Reagan’s presidency, air traffic controllers went on strike. He had the FAA order them back to work and fired over 11k that refused. The graph below is striking, but remember that correlation doesn’t necessarily imply causation (see Rear Defogger #6).

Media Fragmentation

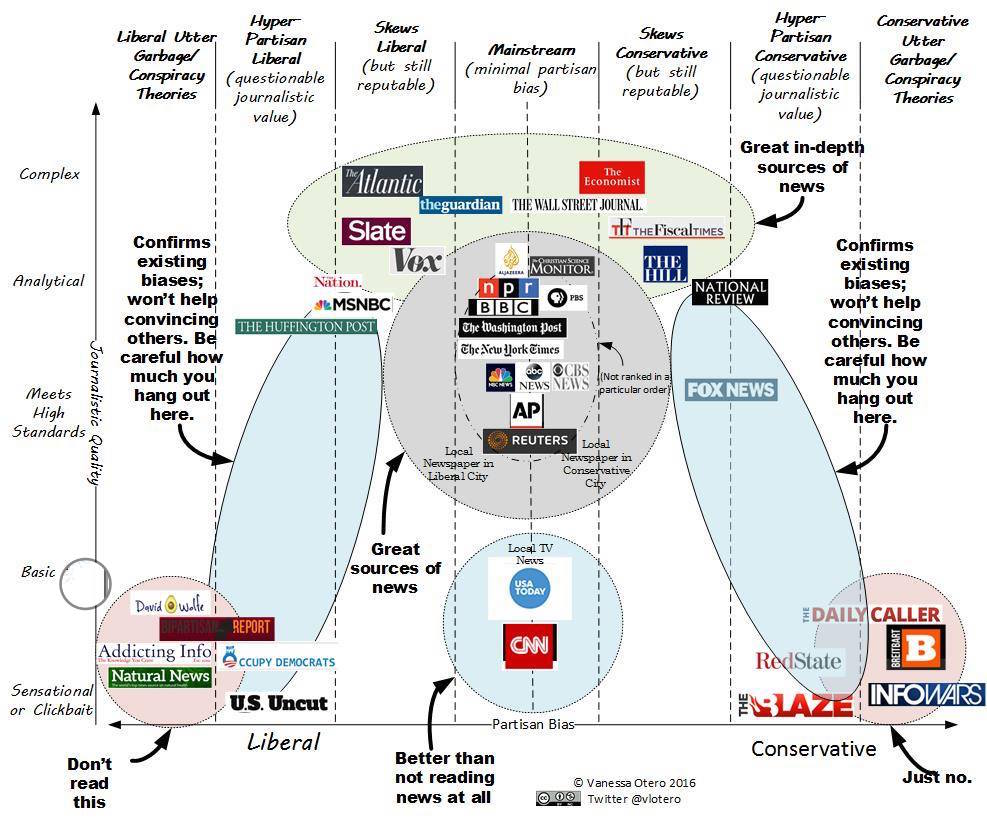

Deregulation also impacted media. This lengthy section is important since free speech is a double-edged sword within democracies and currently challenging our own. Partly to reward Rupert Murdoch for helping him win New York state in 1980 via Murdoch’s New York Post, Reagan got rid of the law preventing simultaneous ownership of newspapers and television stations, allowing Murdoch to acquire Twentieth Century Fox and spin-off FOX News in 1996. In 1987, the FCC (Federal Communications Commission) revoked their 1949 Fairness Doctrine requiring TV and radio broadcasts to be fair and “tell both sides of a story” when touching on controversial topics. There was bipartisan support in Congress to codify the doctrine into law, but President Reagan vetoed the bill. The Fairness Doctrine was arguably a violation of the First Amendment, but its retraction fragmented news into what it is today, where most conservatives and liberals just listen to their own tribe’s spins, with little center of gravity in the middle to rely on for “straight news.” Like the Newspaper War of the 1790s, when Federalist and Democratic-Republican papers just reinforced their own partisans’ views, we’ve returned to the narrowcasting journalism model. In the run-up to the Dominion Voting Systems defamation suit trial in 2021-23, for instance, FOX essentially argued for the First Amendment right to disinformation (they settled out of court). FOX claimed that, technically, they were just reporting other peoples’ misinformation about the 2020 election results. Anonymous influencers, then shared by Trump and Elon Musk, are currently arguing on X that undocumented migrants are being registered to vote by the millions in the 2024 election, even though it’s virtually impossible to register without an SSN. SCOTUS is currently weighing whether or not disinformation, as opposed to misinformation, is protected under the First Amendment, but it’s a fine line because people can always argue later than they just wrong. In the meantime, disinformation-for-hire is a booming industry for those looking to profit off our republic’s decline.

In 1988, lambasting what he later called the “Four Corners of Deceit” — science, government, media, and academia — Rush Limbaugh of Sacramento launched his radio show a year after the Fairness Doctrine deregulation. Today, many Americans think a cabal of those four groups is conspiring against them. Limbaugh, whose bombastic Manichaeanism echoed Tom Coughlin (Chap 9), Carl McIntire from the ’50s, and Joe Pyne in the ’60s (Chap 16), argued that feminism was launched to give unattractive women entry to society, called gays perverts and, once, at the height of the AIDS epidemic, played “I’ll Never Love This Way Again” in the background while mirthfully reading off the names of perished victims. He pushed the envelope racially, too, telling one black caller to “take that bone out of your nose and call me back,” saying everyone on composite wanted posters looked like black politician Jesse Jackson and that the National Basketball Association (NBA) should be renamed the Thug Basketball Association, and questioning Obama’s citizenship and calling him “Barack the magic negro.” But his millions of devoted “Dittoheads” interpreted his engaging banter as refreshing, courageous integrity in an era of irritating and stifling political correctness. In the 21st century, he and his offshoots feasted on the perceived excesses of woke politics like sharks on chum.

Limbaugh was irreverent, especially in his younger years, influentially playing rock and country songs as bumper music in and out of commercials, and connecting to listeners in a way that journalists hadn’t prior to deregulation, especially conservatives who didn’t have a voice in mainstream near-left media. His shows gave succor to conservatives in liberal enclaves like Seattle, San Francisco, or Austin. Limbaugh shaped contemporary conservative politics more than Republican presidents and reshaped media by blurring journalism and entertainment into so-called infotainment, as chronicled in the optional article below by Brian Rosenwald: “Limbaugh’s style would come to be reflected in everything from late night comedy shows to cable news channels to podcasts of every ideological flavor and style — the very things that define our political media in 2021. Whether one likes Rachel Maddow, Stephen Colbert, Joe Rogan, or Sean Hannity, he or she is engaging the media world created by Limbaugh.” Limbaugh saved AM radio when most music had migrated to FM and ushered in a wave of conservative talk show hosts, including future VP Mike Pence. Due to human nature, much of the public prefers infotainment over drier analysis if given the choice, while comedians like Colbert, Jon Stewart, John Oliver, and Greg Gutfeld wove substance into comedy to make substance palatable. Polls showed that people that voted for both Barack Obama and Donald Trump saw each as being more entertaining television than other candidates. Trump also benefitted insofar as many viewers got to know him as host of NBC’s Celebrity Apprentice from 2008-2015, just as many Ukrainians got to know Volodymyr Zelenskyy playing a president on TV. When Trump left office in 2021, ratings sank for all cable news, right and left.

Adversarial journalism profited from another de-regulatory law, the forenamed 1996 Telecommunications Act signed by Bill Clinton that allowed for greater consolidation and vertical integration among communications companies. Crucially, Section 230 of the 1996 law exempts Internet providers and hosts from liability regarding most content on their platforms, but contributors to the platforms aren’t liable either. Currently, neither social media nor toxic anonymous message boards with Holocaust humor (e.g., 4chan > 8chan > 8kun) are legally accountable for events that result from their content, up to and including even mass shootings, political violence, and disinformation. Section 230’s broad immunity raises thorny issues involving the First Amendment. The optional article below by Jan Werner Müller cautions against blaming social media, in particular, for today’s problems. After all, we don’t blame telegraphs, phones, radios, TV’s, and newspapers for past troubles like World War II. Yet, the Web has more capacity to manufacture crises and manipulate perceptions than older mediums. By comparison, courts have been fairly lenient toward newspapers over the last fifty years, generally erring on the side of protecting them from libel suits if they correct mistakes (e.g., Sarah Palin’s suit against the New York Times, NYT). But there’s at least a line there with disinformation that journalists can’t cross with impunity. Online versions of legacy media (newspapers, magazines, etc.) still can’t libel, but other platforms’ libel laws are, at best, murky. And, even on TV, MSNBC’s Rachel Maddow and FOX’s Tucker Carlson fended off defamation and slander suits, respectively, because judges ruled that their audiences understood their op-ed commentaries weren’t to be taken literally.

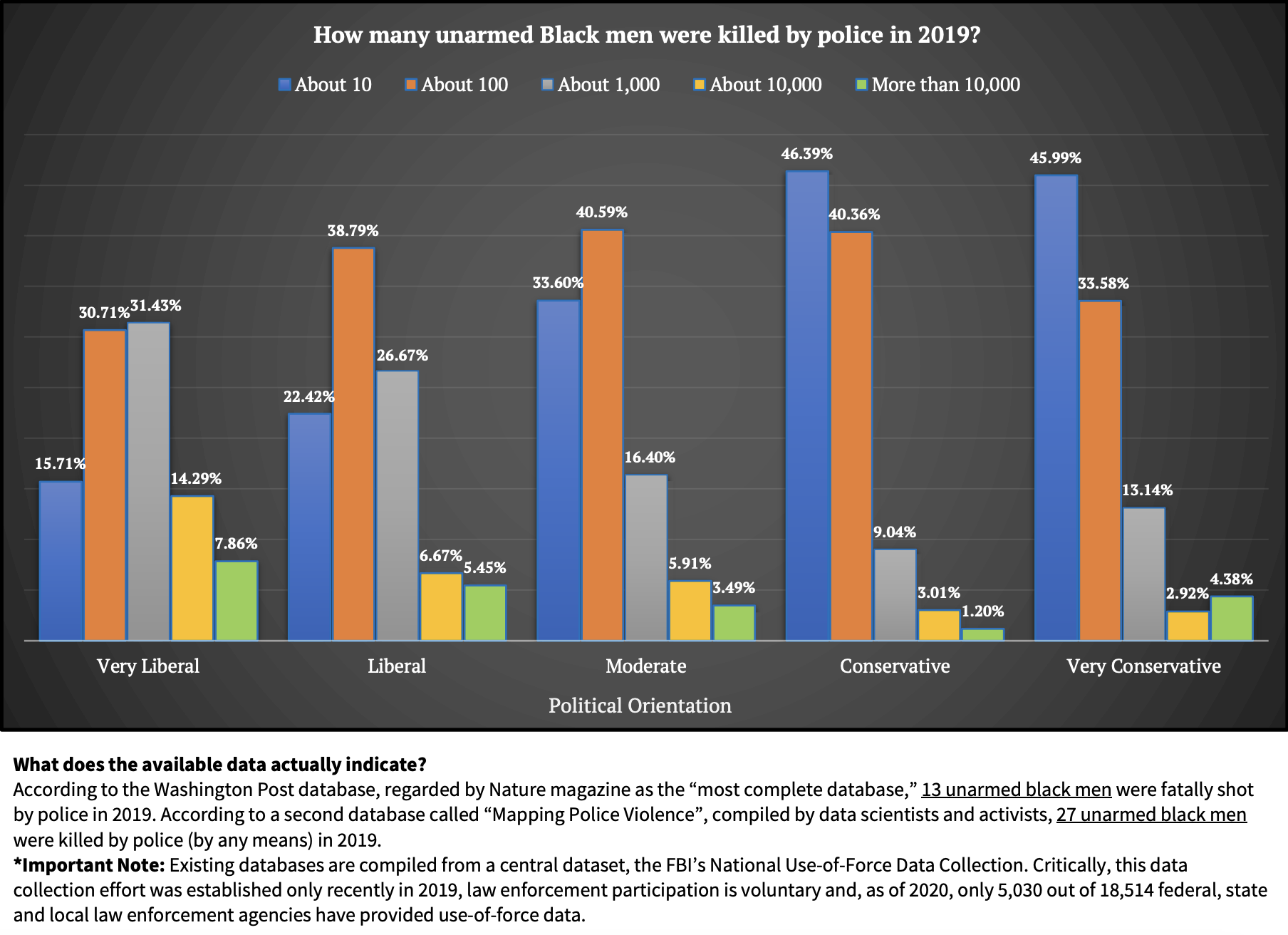

The Sinclair Broadcast Group brought a right-leaning if subtler approach to local news at the hundreds of network affiliates it bought in the South and Midwest. Much news now is what people used to refer to as op-ed (for opinions and editorials) within each tribe’s echo chamber or filter bubble and the respective chambers don’t report on the same stories. Blindspot is a good reminder of this selection bias (Rear Defogger #21). Another advantage that op-eds have for news outlets is that they’re cheaper to fund than investigative journalism. Op-eds are beneficial to democracies and fine in their own right (as is bias) but, after 1986, unwary viewers weren’t always distinguishing between fact and opinion or recognizing bias, except in others, and media companies were cashing in. Rolling Stone journalist Matt Taibbi described modern media firms as working backward, first asking “How does our target demographic want to understand what’s just unfolded? Then they pick both the words and the facts they want to emphasize.” FOX News’ belated disinformation after the 2020 election, after first reporting accurately, was a dramatic example. But it’s true across the political spectrum. Since it’s human nature to suffer from anchoring and confirmation bias and easier to confirm preconceptions, most people just choose their “truths” from a virtual buffet table of options and some politicians exploit the “post-truth” era. If deregulation opened up television and radio, the advent of the World Wide Web obliterated any hope of an agreed-upon reality. That can be true even when opposing sides consult the same source, as is the case with the Washington Post’s Fatal Force crime database, based on FBI statistics.

Online, the new model often didn’t go far beyond maximizing “eyeballs” or “hits,” with no profit motive to teach reality or encourage intelligent debate because many people find that boring. There’s no money in pointing out, for instance, that big numbers of Americans currently favor reasonable compromises on guns and abortion, so uncompromising voices speak for the left and right. Absolutists are “good copy” as they say in journalism. And few people will subject themselves to the emotional trauma of hearing views they disagree with. Just as trees are worth more to the economy as lumber than absorbers of CO2, Americans are worth more to media as hive-minded partisans. Algorithms are designed to learn your leanings and emotional triggers and reinforce what you already think. We all look at different feeds tailored to what robots think will make us happy or interest us, which keeps us online longer. Like the Yellow Journalism of a century ago, the more they feed us what we want, the more money they make on advertising. The more you show an inclination toward conspiracy theories, the more conspiracies you’ll see. Imagine if each student saw different chapters in this textbook as artificial intelligence altered them to suit their preferences in exchange for advertising revenue. That’s what’s already happening on social media, which is where many people get their news and their views of history (aka e-history). If 36 students in this course section researched the war between Israel and Hamas, not only would they get 36 different versions from different outlets, they’d get slightly different versions from the same outlet. That doesn’t mean that it’s impossible to understand events, but you need to triangulate your sources and understand both their leanings and your own, which takes effort.

Whereas the best minds two generations ago worked on weapons of mass destruction, today’s work on best syncing and addicting you to social media and keeping you in a feedback loop. It offers little emotional jolt rewards like a slot-machine dropping you occasional quarters. The most valuable commodity in today’s economy is neither gold, crypto nor oil, but rather our limited attention spans and the personal data companies can collect when we’re engaged. Personal data is our coin of the realm (currency), as they said in 18th-century England. This “attention extraction” model is nothing short of the biggest business ever and largely responsible for the booming stock market of the 21st century. Companies often have no clue where their ads land since they’re funneled through the search engine to wherever there’s traffic. Hopefully, ex-Facebook executive Tim Kendall overstated things when he prophesied in the 2020 docudrama The Social Dilemma that social media will inevitably lead to civil war. But it has already fueled/enabled ethnic cleansing in Myanmar, degraded civic discourse, fueled all manner of conspiracy theories of the type formerly limited to tabloids, and hastened civil unrest around the world, including insurrection against the U.S. government. When social media’s algorithms smell smoke, they rush to the fire and pour gasoline on it. Ex-Google designer Tristan Harris called social media a “race to the bottom of the brain stem.”

Facebook’s own research revealed how they could impact levels of political violence and discord in countries like Hungary, Poland, Spain, and Taiwan almost as if adjusting a thermostat (countries vary in how much censorship they allow). In 2021, whistleblower Frances Haugen testified before Congress that they altered their formula to sow divisiveness and that their own research revealed that Instagram contributed to anxiety and self-harm, especially among teenage girls. It has worsened adolescent bullying, as the victim can’t escape at the end of the school day. The Chinese company TikTok limits exposure for Chinese teenagers to 40 minutes per day, whereas American teenagers are now pushing ~ 100 m.p.d. Extra sleep deprivation alone contributes to psychological problems, regardless of content. Many of the industry’s inventors are understandably feeling guilty as they initially set out to just get rich while we pushed “like” for puppy videos and pictures of our cousins’ Grand Canyon trip. Chris Wetherell lamented after his invention of the Retweet button that “we might have just handed a four-year-old a loaded weapon.” In an optional article below, Jonathan Haidt explains why a better metaphor might’ve been darts and how small subsets on the left and right used these darts to dominate public discourse in a way that’s silencing the center and weakening the fabric of American society.

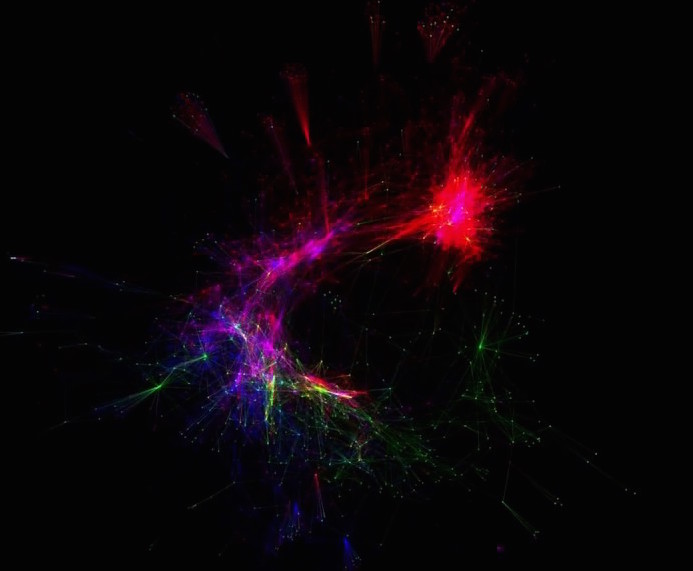

Much of our political discourse is Tweeting or venting on blogs with like-minded people or having profanity-laced exchanges with faceless adversaries in comment boxes that go nowhere. Former Facebook VP Chamath Palihapitiya said, “We have created tools that are ripping apart the social fabric of how society works.” For conservative columnist David Brooks, the Web accelerated the longstanding “paranoid style” in American politics that we touched on in Chapter 16. Now, we mostly just talk past each other, as shown in this image that, contrary to first impressions, wasn’t taken by the Hubble Telescope but rather purportedly mapped 2016 election-oriented traffic in the Twitterverse:

ELECTOME Showing Tweets About 2016 Presidential Election With Trump Supporters in Red, Clinton in Blue, Undecided in Yellow, and Pink Interaction Between Clinton and Trump Supporters. Source: Massachusetts Institute of Technology

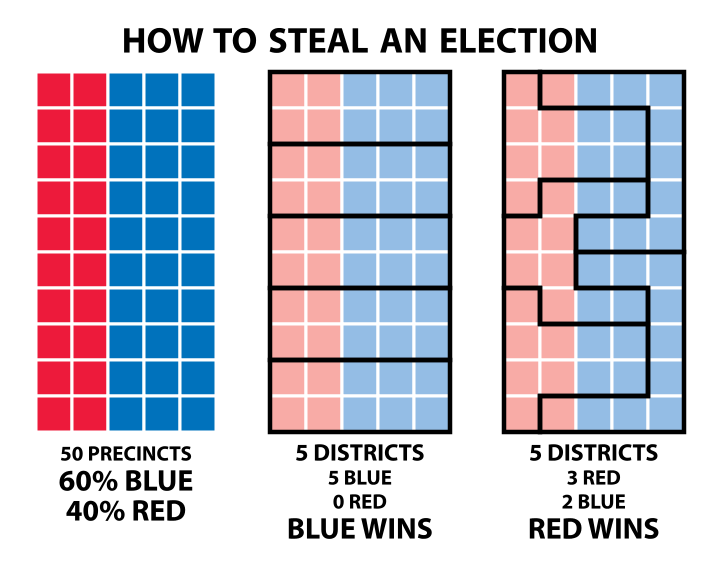

What can we do to mitigate this dystopian development? In addition to the basic responsibilities of citizenship, like voting and paying taxes, Americans now have to filter news to find out what’s going on. Judge theories based on the quality of evidence, not the quantity (see Rear Defogger #25 on the tab above). “Do your own research” sounds like good advice (as educators, we encourage independent thinking), but, online, it’s a hop-skip-and-a-jump from D.Y.O.R. to just choosing your own reality. Going further out into the fringes of the Web doesn’t mean you’re getting closer to the truth. If you have any genuine interest in reality, then the first thing you should research is how to do research. That starts with examining evidence (primary sources) when possible and humble open-mindedness to experts, who aren’t necessarily wrong or conspiring against you, but usually imperfectly acting in good faith. Talk to, or email, a reference librarian who can direct you to the best online sources if you can’t visit a library. Guard against the “beginner’s bubble” initial over-confidence that often accompanies D.Y.O.R. If you find the prospect of talking to a reference librarian too daunting, nerdy, or time-consuming, the key with most online sources is cross-checking other sites. If you’re considering dabbling in reality or reality curious, check your source’s reputation at Media Bias/Fact Check. English poet Alexander Pope, most famous for “to err is human; to forgive, divine,” also wrote that “a little learning is a dangerous thing.” Most importantly, read smart people across the spectrum.