Innovation

Innovative and technological advances are occurring at an dizzying, ever-accelerating pace, opening up exciting opportunities, demanding new digital skills, and raising questions and concerns.

On this page you will find definitions, writings, and links to articles and videos spotlighting emerging ideas and technology, providing clarity or insight around complicated issues, and offering additional food for thought surrounding topics relating to digital fluency and innovation.

of CEOs believe innovation is critical to growth

McKinsey & Company

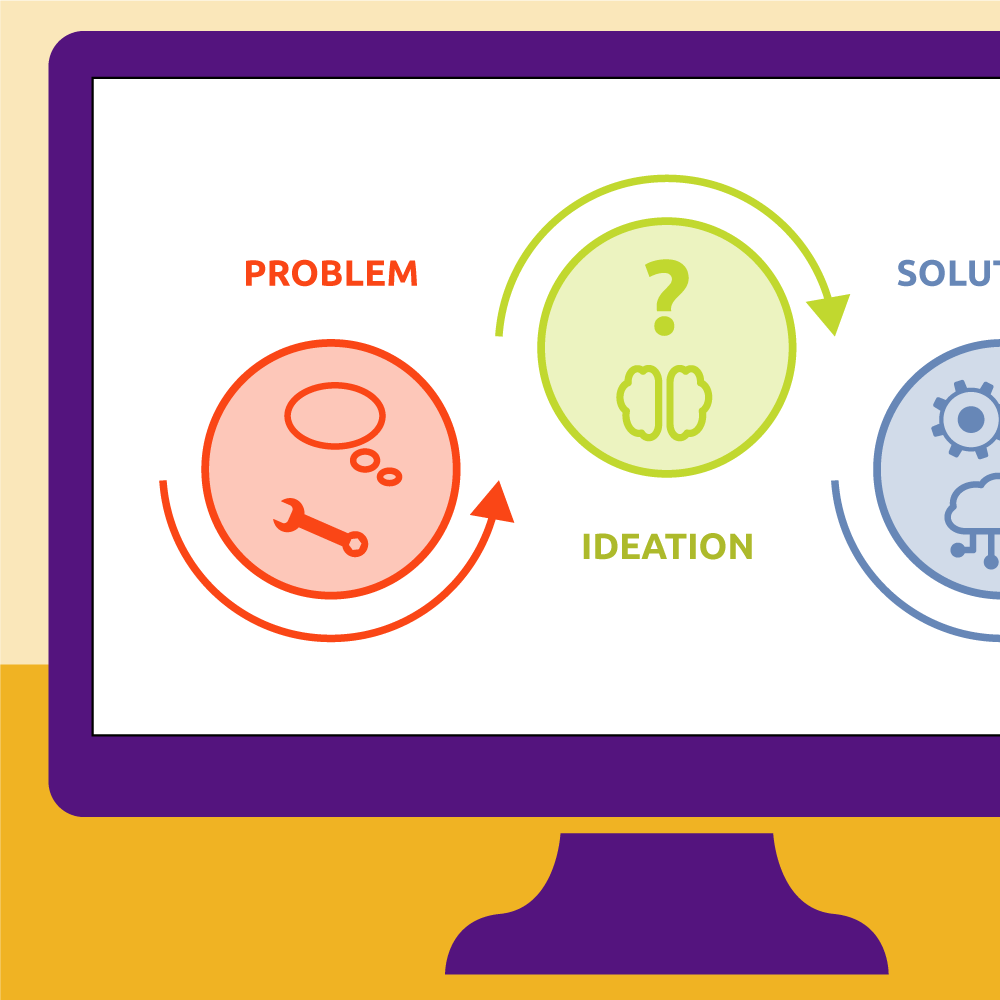

Digital Fluency and Innovation go hand in hand

What it means to be Digitally Fluent in the 21st century

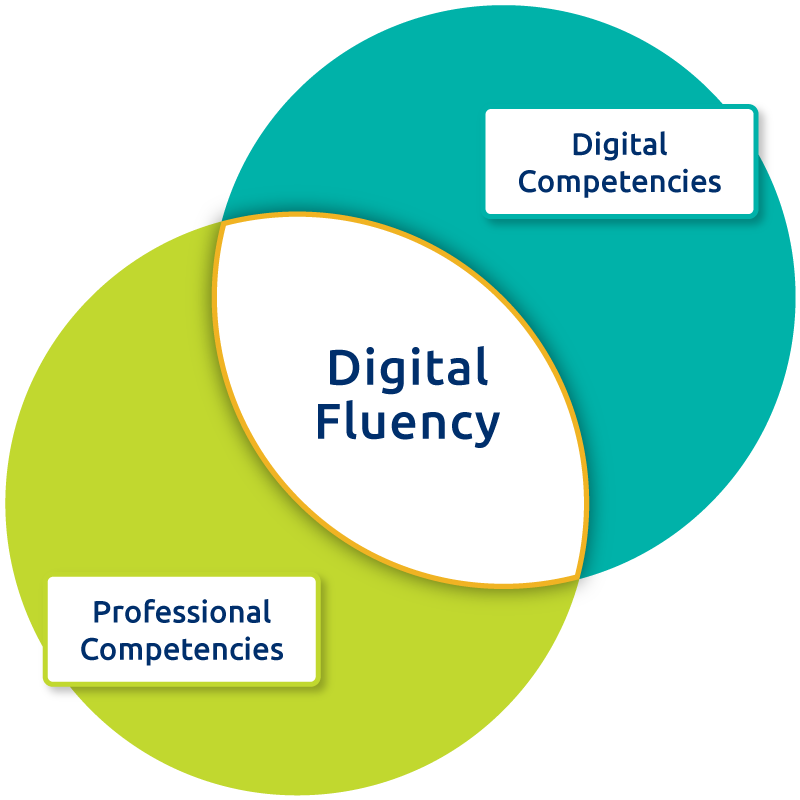

To be digitally fluent in today's workplace is to have deep knowledge of many tools and to know what technology to use to best achieve one's goals. It is also the ability to effectively transfer digital skills from one technology to another to problem solve and communicate. Finally, Digital fluency requires both digital and professional competencies.

Examples of digital competencies might include adept use of word processing, presentation, and spreadsheet tools, ability to manage, analyze and visualize data, web and social media skills necessary to increase one's presence on the internet, problem solving skills using systems thinking and AI, and project management.

In addition to strong digital skills, businesses want employees who have professional competencies such as critical thinking, oral and written communication skills, creative thinking, problem solving, teamwork, and ethical reasoning.

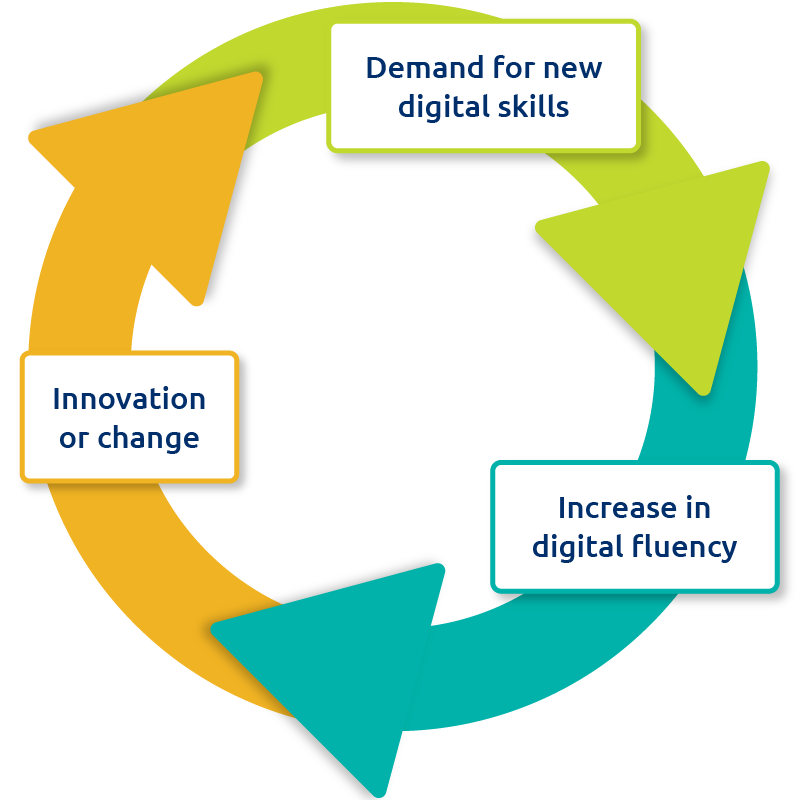

Innovation and Digital Fluency drive each other

Keeping digital fluency skills current requires us to be a lifelong learners and adapt to new technology. Societal changes (or events) and innovations in technology drive demands for updated or new digital skills. Increasing access to digital fluency education closes skills and equity gaps and helps employers fill job vacancies. Having a digitally fluent workforce generates more opportunities for innovation, and so the cycle continues.

Innovation mindset of U.S. employees is five times higher in companies that have a robust culture of equality, compared to least-equal companies.

apnews.comTechnical terms you may encounter

Remaining digitally fluent requires curiosity and the willingness to be a lifelong learner to keep up with new innovations and technological advances. Here you will find definitions to terms you might find on this website, in the news, or on the internet. Many of the definitions have links to articles that you could read to learn more about the term being defined. These definitions and links are meant to be starting points, and this list will continue to grow.

A

The theory that technological growth is exponential, and that advancements in technology, along with the increasing adoption of those advancements, make it easier and faster to improve technology.

Some worry that humans eventually won’t be able to keep up with the accelerating change. Others argue that change could only accelerate at the pace humans can tolerate as a whole, because other concerns, like societal and environmental needs, would balance out or hinder the advancements.

Here are some links to articles and videos about the exponential growth of technology:

How Fast is Technology Advancing? (2023) – article from Zippia.com. Lots of stats and graphics.

Half of All Companies Now Use AI – short video from World Economic Forum (1:36 minutes), brings up ethical concerns

Why Is Technology Evolving So Fast? – article from Tech Evaluate

The Theory of Accelerating Change – YouTube video from THUNK (9:23 minutes). Presenter argues that

Ethics of AI

A set of principles used to guide moral and responsible use of artificial intelligence. Humans are still grappling with what those principles should be and how to enforce them as AI use grows and increasingly impacts life.

Concerns include:

- Is AI biased? Will it be used to discriminate? Will it be representative of everyone, or just those in power?

- What about Deepfake AI? Should limits be put on what could be produced? How do we control synthetically-produced content when it is used to change /represent/steal the identity of real people?

- Will AI replace human jobs or even entire career fields?

- Will AI steal (or be used to steal) creative works (art, writing, music, film, etc.)?

- Will machines (or bad people using machines) threaten our privacy, safety, democracy, and/or well-being?

- What is the environmental impact of servers requiring immense resources (power and water) to operate?

- Will AI become monopolized, with only a few organizations (like Google and Microsoft) owning all or most of the AI systems?

- How much control should we give to AI over how we live our lives?

These are just a few of the many concerns ethicists, scientists, educators, engineers, leaders, artists, and more have raised, and as AI weaves or bumps into more areas of our lives, additional concerns will surely pop up.

Want to learn more about AI Ethics?

Here are links to an article and a YouTube video, to get you started:

AI Ethics: What It Is and Why It Matters – article from Forbes.com

What is AI Ethics – YouTube video from IBM (6 minutes long)

An algorithm is a set of step-by-step instructions to do something. In math this could be steps used to solve a long division problem. In everyday life this might be steps typically done when tying shoes or getting ready in the morning to go to work or school. In computer programming an algorithm tells the computer how to solve a particular problem.

The parts of a computer algorithm usually consist of input, processing, and output. More sophisticated computer algorithms may also involve a stage to store data for later use.

In artificial intelligence, algorithms are more complex. AI algorithms take in data, and use that information to learn and adapt, then performs tasks based on what has been learned from that data.

Here are some articles you could read to learn more about algorithms as they apply to computer programming and artificial intelligence:

Artificial Intelligence (AI) Algorithms: A Complete Overview – article from Tableau

AI vs. Algorithms: What’s the Difference – article from CSM Wire

Also called machine bais, as in machine learning bias. AI Bias occurs when human bias is introduced into the training data or AI algorithm.

Governments, organizations, businesses, social media and entertainment platforms, and more, are increasingly using artificial intelligence (AI) to filter, make predictions, identify, and create. If the AI systems contain biases against groups of people, how might those biases potentially result in issues of fairness, transparency, and equity?

“It’s important for algorithm operators and developers to always be asking themselves: Will we leave some groups of people worse off as a result of the algorithm’s design or its unintended consequences?” Brookings Institution

Ways biases could be introduced to AI algorithms include:

- The data used to train AI systems may contain historically-flawed information against groups of people. For instance, information taken off of the internet might contain historically racist or sexist references and/or harmful stereotypes.

- The people doing the training (engineers, scientists, etc.) may introduce biases, depending on the data they used to train the AI.

- Underrepresentation of groups of people (race, age, sex, culture, location, etc.) could also cause biases, by excluding them. An example would be to not show women, blacks, or Hispanics in STEM career fields.

Want to learn more about algorithmic bias? Check out these articles:

Why Algorithms Can Be Racist and Sexist: A Computer Can Be Faster. That Doesn’t Make It Fair. – article from Vox

Algorithmic bias detection and mitigation: Best practices and policies to reduce consumer harms – article from the Brookings Institution

Algorithmic Bias: Why Bother? – article from California Management Review | University of California, Berkeley

How AI Reduces the Rest of the World to Stereotypes – article from restofworld.org

John McCarthy first coined the term “Artificial Intelligence” in 1956, as the science and engineering of making intelligent machines.”

Artificial intelligence (or AI) refers to computer intelligence, as opposed to human intelligence. It is the ability of a computer to perform or improve upon specific tasks that the human mind could do.

Three Stages of AI

AI experts typically distinguish between three evolutionary stages of intelligence that a machine can acquire: Weak, Strong, and Super AI.

Weak AI (also referred to as Artificial Narrow Intelligence, or Specialized AI) is the most common type of AI that we see today. It is AI that is trained to do specific tasks under constraints. Examples of Weak AI include:

- Smart assistants such as Siri or Alexa

- Facial recognition

- Search Engines like Google or Bing

- Generative AI like ChatGPT or DALL-E

- Self driving cars

Strong AI (also called Artificial General Intelligence (AGI) or Deep AI) is the second stage in the evolution of AI, and does not currently exist. Strong AI would be artificial intelligence that is equal to what the human mind could do and can complete several tasks as opposed to one. Strong AI would also exhibit characteristics such as:

- Self awareness or consciousness

- Ability to independently solve problems

- Make decisions for the future based on observations and experiences of the past

- Be able to understand emotions, beliefs and thought processes

Super AI or Artificial Superintelligence (ASI) is the third evolutionary stage of AI, and is merely hypothetical at this point in time. The stuff of scientific books and movies, this type of AI would surpass everything that humans could do and also have its own emotions, desires, beliefs and needs.

Four Types of AI

In addition to the three stages of AI (weak, strong, and super), there are also four types of AI: Reactive, Limited, Theory of Mind, and Self Aware. Currently only Reactive and Limited Memory types of AI exist, but scientists and engineers are actively working on creating the other two types.

- Reactive – performs a narrow range of pre-defined tasks. Input and output with limited learning. (Weak AI)

- Limited Memory – Computer is trained (machine learning), using previous data and pattern analysis to make predictions. (Weak AI)

- Theory of Mind – AI system that recognizes and adjusts its behavior based on the thoughts and feelings of humans. Does not currently exist. (Strong AI)

- Self Aware – AI is not only aware of the thoughts and feelings of humans, but also aware of its own emotions and needs. Does not currently exist. (Super AI)

Links to learn more

Artificial Intelligence is a vast topic and there are tons of articles and videos about AI to be found on the internet. Should you wish to dive deeper, here are links to articles and a YouTube video, to get you started:

Types Of Artificial Intelligence | Artificial Intelligence Explained | What is AI? YouTube video by edureka! (12:50 long)

What is Artificial Intelligence (AI)? How does AI work? – article from BuiltIn.com

AI is a Buzzword. Here are Real Words to Know – article from Medium.com

AI Hallucination refers to when AI (such as ChatGPT or DALL-E) creates results that are unanticipated, untrue, or not following any data it was trained on.

While we want and expect novel results from text-to-art generative AI models such as DALL-E 2 or Midjourney (like purple flying cats or alien worlds), we don’t want made-up statements from an advanced chatbot like ChatGPT. However, OpenAI, the creator of ChatGPT, has stated that while ChatGPT 4 is more accurate that previous versions, it still has an error rate of 15-20%, and they caution users to double-check the results. “Care should be taken when using the outputs of GPT-4, particularly in contexts where reliability is important.” Internal factual evaluation of GPT models, source: OpenAI

Why does hallucination occur?

There are a number of reasons AI hallucinates, here are a few:

- The data the AI model was trained on is incomplete. ChatGPT was trained on information going up to 2021, so if you were to ask who won the World Cup in 2022, it would be unable to factually answer the question.

- The data the AI model was trained on is biased. There is a lot of content on the web that is racist, sexist, or contains other biases that if the AI were trained on that information, could result in answers that reflect those biases.

- The data the AI model was trained on is incorrect. There is a lot of information on the web that aligns with conspiracies, or conflicts with scientific fact or consensus.

- Sometimes errors occur because the AI doesn’t understand the question due to how it was worded. Whereas a human might ask for clarification, a computer just generates a response matching what it calculates as the most statistically-probable answer based on the association of a string of words.

Fact-check your responses

ChatGPT and other generative AI models are very confident in their responses – regardless of whether those responses are true.

Here are some articles and videos to read and watch to learn more about AI Hallucinations:

AI hallucinations explained – YouTube video from Moveworks

What are AI Hallucinations, and how do you prevent them? – article from Zapier.com

What is an AI Hallucination, and how do you spot it? – article from MakeUseOf.co

B

Bias, as a general term, is included here because its presence can significantly impact technical work, leading to flawed designs, inaccurate results, and unfair outcomes.

Bias is a prejudice or inclination that influences an individual’s thoughts, feelings, and actions, often in an unfair or illogical way. It can lead to distorted perceptions, judgments, and decisions, deviating from accurate, rational, or equitable assessments.

Biases can be conscious or unconscious, and they can arise from various sources, including cognitive processes, cultural influences, personal experiences, and societal attitudes.

Types of Bias

- Cognitive Biases: These result from the brain’s information processing shortcuts and limitations, such as confirmation bias, anchoring bias, and hindsight bias.

- Social Biases: These involve prejudices based on group membership, such as racial bias, gender bias, or ageism.

- Implicit Bias: Also known as unconscious bias, these are automatic, unintentional, and deeply ingrained attitudes or stereotypes that affect our thoughts and actions.

- Explicit Bias: These are conscious and deliberate prejudices or attitudes that people are aware of and express openly.

Sources of Bias

- Cognitive Shortcuts: The brain uses heuristics to simplify complex tasks, which can lead to biases.

- Cultural Influences: Societal norms, values, and attitudes can shape an individual’s biases.

- Personal Experiences: Unique life events and interactions can contribute to the formation of biases.

- Stereotypes: Oversimplified and generalized beliefs about groups of people can lead to biased thoughts and actions.

Effects of Bias

- Distorted Perceptions: Biases can alter how we perceive and interpret information.

- Poor Decision-Making: Biases can lead to illogical or suboptimal decisions.

- Discrimination: Biases can result in unfair treatment, prejudice, and inequality.

- Strained Relationships: Biases can negatively impact interpersonal and intergroup relationships.

Mitigating Bias

- Awareness: Recognizing and acknowledging biases is the first step in addressing them.

- Education: Learning about different types of biases and their effects can help reduce biased thoughts and behaviors.

- Exposure: Interacting with diverse groups of people can challenge and diminish biases.

- Deliberate Effort: Consciously working to overcome biases and change beliefs and attitudes.

Synonyms of Bias: bigotry, favoritism, intolerance, preconceived idea/notion/opinion, prejudice, unfairness.

Antonyms of Bias: equitableness, fair-mindedness, justice, neutrality, objectivity, open-mindedness, tolerance.

Here are some articles to learn more about Bias:

Unconscious Bias: 16 Examples and How to Avoid Them in the Workplace

C

There are many definitions of critical thinking but essentially it is the ability to question and evaluate information objectively, looking for assumptions or biases (yours or others). You may need to do research to find answers to a question, or to make your own informed opinion, rather than accept information at face value from a single source.

Many employers seek out job candidates who have strong critical thinking skills. Indeed.com has a good article about the importance of critical thinking in the workplace.

D

Data analytics involves examining data to find trends and solve problems. Often a process is followed, including:

- Defining what information is needed and why

- Gathering/finding the data

- Inspecting/verifying/validating the data

- Analyzing the data to find trends/patterns/relationships. This step could also involve modeling or visualizing the data to better understand it

- Sharing/presenting the data – visualization may occur in this step to make the data understandable, engaging, and to tell a story about the data

- Next steps – what do you do with the data findings?

Want to learn more about Data Analytics?

Digital Fluency & Innovation at ACC offers a microcredential in Data Analytics – check it out!

Artificial Intelligence > Machine Learning > Deep Learning

Deep Learning is a subfield of Machine Learning, which is a subfield of Artificial Intelligence. Deep learning is a type of machine learning that uses neural networks to process and analyze complex data, looking for patterns. Because the data is complex, the neural networks have more than three layers of nodes.

Deep learning requires an incredible amount of resources (monetary and environmental) to run, and there are concerns about sustainability.

Applications where deep learning is used include virtual assistants, driverless cars, digital image restoration, cancer detection, language learning apps, credit card fraud detection, and satellite imagery.

Here are some articles to learn more about Deep Learning:

AI vs. Machine Learning vs. Deep Learning vs. Neural Networks: What’s the Difference? – article from IBM

What is Deep Learning? 3 things you need to know – article from MathWorks

Deepfakes are fake audio recordings, images, or videos that are created by artificial intelligence. They are not real. They can be used for comedic or artistic purposes (the Pope in a puffer jacket, as an example), but often they are used to spread misinformation, or for harm or revenge. DeepTrace Labs found that 96% of deepfake videos posted online in 2019 were pornographic, targeted toward actresses and female musicians, and were almost always nonconsensual. The majority of the remaining 4% of deepfake videos found online were of a non-pornographic nature and were of men.

Problems with Deepfakes:

- They can be used to spread misinformation – A person or organization wishing to do harm could make deepfake video showing a famous person or politician saying something fake to spread lies or to hurt that person/politician’s reputation.

- They can erode people’s confidence that what they are looking at, or listening to, is true – People tend to think they can detect deepfakes when often the opposite is true. If people are repeatedly told what they are looking at or hearing is fake then they won’t know what to trust and will doubt content that is real and even necessary to believe.

- They are getting harder to detect – As AI capabilities continue to grow and learn, it will be harder to determine whether an image, audio recording, or video clip is real or synthetically altered/created.

What are deepfakes, and how can you spot them? – article from The Guardian

Mapping the Deepfake Landscape – article on LinkedIn

Fooled twice: People cannot detect deepfakes but think they can – article from ScienceDirect.com

The idea that individuals and organizations need to be willing and able to adapt to new technology to work more efficiently and achieve business goals.

An example of digital dexterity is how many businesses and employees had to adapt to working remotely and/or creating a greater online presence to sell goods and services, as a result of the COVID pandemic.

Here is an article from Apty.com that explains why digital dexterity is important in the workplace.

Digital Literacy is the ability to use digital tools such as Microsoft Word or Adobe Photoshop, even the internet, in a general manner.

Digital Fluency, on the other hand, is the ability to transfer digital skills from one technology to another to problem-solve and communicate. It involves not only technical skills but also professional skills like critical thinking, problem solving, teamwork, ethical reasoning, and communication skills. Finally, digital fluency requires being able to choose the best tool for the job and to embrace learning new tools to meet growing or changing digital demands.

Here is an article that digs deeper into the difference between digital literacy and digital fluency:

Digital Literacy vs. Digital Fluency – Understanding the Key Differences – article from W3 Lab

The Digital Fluency and Innovation program at Austin Community College offers microcredentials that teach technical and professional skills employers are looking for.

G

A Gantt chart is a bar chart used in project management that visualizes the dependency between tasks and a timeline. Gantt charts help project managers and team members easily see what needs to be done and when, and what tasks/activities overlap each other.

Typically a Gantt chart is divided into two parts: tasks/activities are listed on the left side (vertically), and all the schedule is on the right side. Dates are listed horizontally along the top of the schedule side, and schedule bars are in rows showing start, duration, and stop dates for each task/activity.

Common spreadsheet software programs, like Microsoft Excel or Google Sheets, don’t have a built-in Gantt chart template, but there are lots of tutorials and templates available on the web to create your own Gantt chart in those programs, or you could use a project management application.

Learn more about Gantt charts or make your own:

What is a Gantt Chart? – article from Gantt.com

How to make a Gantt chart in Excel – tutorial article by Ablebits.com

Generative AI is any kind of artificial intelligence that can create, or generate, new content based on what content it has been fed. This could include text, pictures, videos, audio, code, or synthetic data (see also Synthetic Data). The request is entered via a prompt (the input, where the user describes what they want), and returns new, generated content (the output).

Generative AI models can only produce results based on the data it has to work with. ChatGPT is a text producing model, and thus cannot produce images. DALL-E on the other hand, is designed to produce images, not text.

Accuracy, bias, and copyright are some of the concerns people have about generative AI, and artificial intelligence in general (see Artificial Intelligence, AI Ethics, and Algorithmic Bias).

Below are links to some articles and a video to help you dig deeper into the subject of generative AI:

Generative AI: What is it and how does it work? – YouTube video from Moveworks

What is Generative AI? – article from Built In

What is Generative AI? Everything You Need to Know – article from TechTarget Network

AI-generated images from text can’t be copyrighted, US government rules – article from Engadget.com

I

Having an innovation mindset means you are open to new ideas and change and can pivot quickly when needed, you embrace creativity and thinking big, you are resilient when things don’t go as planned and are willing to keep trying. Having an innovation mindset also means you can collaborate and work together in a team.

Embracing and Growing an Innovation Mindset – article from Infused Innovations

How to Build an Innovation Mindset? 6 Steps to Cultivate the Innovation Mindset – article from Medium.com

Inventions are brand new things or concepts that never existed before. The automobile is an example of an invention. It caused a huge leap in human capability, by greatly reducing the time it took to get people or goods from one place to another.

Innovations are improvements of, or new ways to use, an existing invention. Going back to the example of a car, Uber ride for hire or Lime e-scooters are examples of innovations to motorized transportation that increased the freedom and near instant gratification of getting from one place to another, by not requiring the ownership of a vehicle, or having to rely on a traditional taxi to provide transportation.

Many experts will argue that a lot of inventions did not arise from a single “lightbulb” or “Eureka!” moment, but instead evolved over many iterations, improvements on failures (their own or other people’s), or from conversations or collaborations.

Furthermore, many inventions, when they first come out, are not ready for mass consumption and it is often innovations that make the invention available to more people.

Evolution, Not Revolution. How Innovation Happens – article from LinkedIn

Where Good Ideas Come From – excellent TedTalk video featuring Steve Johnson (7:29 minutes)

Innovation vs. Invention: Make the Leap and Reap the Rewards – article from Wired.com

Can AI Ever Rival Human Intelligence? – article from Fast Company that talks about AI and innovation

“Integrative learning helps you draw from what you know and combine it with new insights to solve problems” Carol Geary Schneider, President Emerita of the Association of American Colleges and Universities.

Integrative learning is the ability to apply knowledge of what you know to other similar applications and to know what knowledge to use to solve problems. An example would be applying knowledge of Microsoft PowerPoint to use Google Sheets so that you can create presentations in a collaborative environment.

People who are comfortable with integrative learning can be flexible and adapt to change as needed. They can apply their knowledge to new situations.

Why Does Integrative Learning Matter? – article from Nullclass.com

Physical objects or devices that can connect to and exchange data with other devices and systems over the internet. Examples of IoT devices include smart thermostats, smart home appliances, robotic vacuum cleaners, and personal digital assistants like Alexa and Siri.

Here are articles explaining the Internet of Things in more detail:

What is IoT? – article from Oracle.com

What is the IoT? Everything you need to know about the Internet of Things right now – article by ZDNet.com

L

Large language models are a form of artificial intelligence that is fed billions of words to learn the patterns and connections between words and phrases. It then creates models to produce new content that mimics the style and content of the original text. ChatGPT is a large language model.

Want to learn more? Here are some videos and articles to start your learning about large language models:

What are large language models (LLMs) and how do they work? – YouTube video from Boost AI (2:31 minutes). Good explanation up to about 2:00, after that the presenter promotes Boost AI.

What are Large Language Models used for? – article from Nvidia

A Very Gentle Introduction to Large Language Models without the Hype – article from Medium by Mark Riedl

M

Artificial Intelligence > Machine Learning > Deep Learning

Machine learning is a subfield of artificial intelligence. It refers to when computers learn without human input (programming), by analyzing patterns and making predictions based on data.

Real-world applications of machine learning include facial recognition, fraud detection, speech recognition, healthcare diagnostics, video recommendations, and equipment failure predictions used for maintenance.

Here are some articles and a video that offer deeper, learner-friendly explanations and real-world examples of machine learning:

Machine Learning, Explained – article from MIT Sloan School of Management

Machine Learning – article from Trend Micro that takes a security view (that is their business, after all) but also has easy to understand explanations of machine learning

What is Machine Learning? – YouTube video from OxfordSparks.com (2:19 minutes)

Coined by Neal Stephenson in his 1992 science fiction book Snow Crash, the metaverse is a fictional, 3-D world where everyone interacts virtually.

There’s disagreement by experts today regarding whether you need virtual reality glasses to truly immerse yourself in the metaverse, or if using a desktop computer or augmented reality could also count.

Here are some articles to explain more about the metaverse:

What is the Metaverse, Exactly? – article from Wired magazine

What is the Metaverse and Why Should You Care? – article by Forbes magazine

A model has different meanings, depending on the application, but basically it is a representation of something:

Three-dimensional

- In fashion, a model is a person who wears or displays something to give you a real-life representation of the designer’s vision

- In architecture or industrial design, a model is a 3-D representation of the thing that has been designed (building, engine, etc.) – this could be a scaled physical model or a digital one

- In science, a model could be a 3-D representation of a complex system used to teach or better understand something. An example could be a physical or digital representation of the human body that shows all the systems (like skeletal, muscular, circulatory, nervous, etc.) to teach anatomy or to better understand medical phenomena

System or procedure

- In medicine, a medical model is a standard set of procedures that doctors use to diagnose and treat patients

- For predicting the weather, computer programs take complex data from various sources (satellites, weather balloons, aircraft, local weather stations, etc.) measuring various phenomena (location, temperature, altitude, wind speed, humidity, etc.) and use mathematical models to predict what the weather will do next

- In machine learning, models are mathematical representations of what the computer has learned (trained on) using pattern recognition. The models are then applied to new data to make predictions and generate output.

N

- Input layer – Nodes receive information from the outside world that it will use to learn, recognize, or process the data, then send it on to the next layer.

- Hidden layer – this is where the input is transformed into something the output can use. Each node within this layer examines the information and give it a mathematical weight, depending on how much that data correlates to what the processor (node) is looking for, and then passes the data along to the next layer. The hidden layer itself could contain more than one layer of nodes, depending on the complexity of the data being analyzed.

- Output layer – the final result of all the data processing.

P

Pixelation occurs when bitmap images (i.e. digital photos) are scaling to the point where the image looks blurry or where individual pixels can be seen (the smallest part of the image – looks like squares).

Pixelation can be avoided by moderating how much an image is scaled, or by using images that have a higher resolution (pixels per inch. More pixels per inch = higher resolution).

The trade-off is that higher resolution images tend to have a larger file size. Large file sizes can weigh a website down, increasing the time it takes for the site to load, which can result in visitors giving up and going to a different website. On the other hand, pixelated or blurry images can make a website look less professional and erode visitor confidence.

The trick is to optimize an image so that it is as low a file size as possible while still looking crisp.

Want to learn how to use Photoshop to optimize images for web and more? Check out the Photoshop for Web microcredential offered by Austin Community College’s Digital Fluency and Innovation program.

Project management is the process of leading a team to reach all project goals within time, scope, and and/or budget constraints. There are many different approaches to project management, often depending on the goal or type of project, but common elements include:

- Conception and initiation – determine things like the purpose, client needs, deliverables or outcome, responsibilities, scope, etc.

- Planning – determine the schedule, budget, team, resources, etc.

- Execution – making sure the planned terms get implemented

- Monitoring and controlling – making sure everything goes smoothly, on time, and on budget, and correct anything that does not/address problems quickly

- Closing – resolve any unfinished items and close contract/project

Want to learn more? Check out the Digital Fluency & Innovation microcredential in Project Management.

The rise in generative AI has created a demand for people who can train new AI tools to generate more accurate and relevant answers. A prompt engineer is someone who can frame prompts (questions or commands written in the prompt window) and test that the AI will dependably produce accurate responses. Prompt engineers also look for bias, misinformation, and harmful responses.

Experts predict this new career field will not last long, as large language models learn what they need to be more accurate and no longer require the tuning of prompt engineers.

Here are articles about prompt engineering:

‘Prompt Engineering Is One Of The Hottest Jobs In AI.’ Here’s How It Works – article by Business Insider

The AI Job That Pays Up to $335K—and You Don’t Need a Computer Engineering Background – article by Time magazine

Here is a free, open-source course to learn how to create the right prompts to get the best results:

Learn Prompting – learning platform

A pivot table is a tool used in many spreadsheet applications (such as Microsoft Excel, Apple Numbers, and Google Sheets) and some database apps (such as Oracle Database and Microsoft Access). It allows the user to collect some data from one table/spreadsheet and summarize or manipulate that data on another table/spreadsheet.

Pivot tables are often used to analyze data. Instead of manually sifting through large amounts of data, a pivot table can used to summarize, filter, and/or format that data, to look for patterns and visualize it.

What is a Pivot Table? – article from ComputerHope.com

How to Create a Pivot Table in Excel: A Step-By-Step Tutorial – article from Hubspot.com

How to Create Pivot Tables in 5 Minutes (Microsoft Excel) | Indeed – YouTube video from Indeed (7:45 minutes)

Visualizing Data with Pivot Chart: Part 1 – article from Pluralsight.com

S

Some people confuse search engines with web browsers, or web browsers with web servers – here’s some clarification:

- Web Page – A web page is a document made up of

- HTML (the code that displays the basic content of what you see on a web page – like titles, paragraphs, bulleted lists, links, etc.)

- CSS (the code that adds styling to a webpage or website – like colors, sizing and positions of elements like borders, and some animation)

- Scripts (code that adds interactivity – like hover effects on buttons)

- Media (images, sounds, videos)

These are common elements of a webpage, though some web pages may vary.

- Website – a collection of linked web pages, having a domain name (unique address used to find the website) and a home page (the page that a web search lands on – like Target.com).

- Web Server – a computer that hosts one or more websites, and makes it possible to deliver pages of the website to users who want to view them over the internet. It is a physical computer that stores all the data that makes up one or more websites.

- Web Host – a service that provides spaces (via servers) on the internet for websites to exist. They usually take care of the security, setup, and technical issues as well. Examples of web hosts include Bluehost, Hostinger, SiteGround, and DreamHost.

- Web Browser – A software program that retrieves and display web pages from servers on the internet. Browsers also allow you see what you searched for using a search engine. Examples of web browsers include Chrome, Firefox, Safari, Edge, and Opera. You need to install a browser on your computer to use it and you can have multiple browsers, which you can use simultaneously.

- Search Engine – A search engine is a specialized website on the internet. You need a browser to use a search engine. A search engine crawls through the internet to find content that you are searching for. It ranks the search results by relevancy and displays them in a list. Businesses related to a search keyword can pay to have their links displayed first as “sponsored” links (like Walmart or Target showing up when you search “housewares”). Examples of search engines include Google, Bing, and DuckDuckGo.

Want to learn more about computers and the internet? Check out our Intro to Computers and Internet Search microcredential.

A spreadsheet is an electronic document, consisting of rows and columns, arranged in a grid of cells, that can be used to capture, display, calculate, and manipulate data. The cells hold numerical data and text, can be sorted, and can have variables or formulas applied to them.

There are hundreds of careers that use Excel or require spreadsheet knowledge, including accounting, engineering, economics, statistics, construction management, project management, purchasing, sales management, market research, office administration, and more.

Want to learn more about spreadsheets? Check out Digital Fluency & Innovation’s Spreadsheets microcredental.

Any data (or content) that is generated by a computer as opposed to data that is collected from real events. It is often used where real-world data is lacking or contains sensitive information.

Some industries that use synthetic data include automotive, banking, finance, security, marketing, manufacturing, and robotics.

This article from Turing goes in to detail about synthetic data and gives real-world examples of synthetic data.

Systems Thinking is a way of looking at all the parts of problem or system that influence or depend on each other to come up with a solution or to better understand relationships and complexities. Systems are anything that has interconnected parts or elements. Examples of systems include the human body, car engines, manufacturing processes, transportation systems, the internet, weather systems, and business structures.

Often, because of interdependencies, if one part of the system is not working well, the rest of the system suffers. Systems thinking looks at all the parts and tries to identify problems and solutions to make the system work better or efficiently.

Here are some articles to dive deeper into the subject of Systems Thinking:

Systems Thinking – The New Approach to Sustainable and Profitable Business – article from Network for Business Sustainability

T

Technology + Equity = “Techquity.”

Ken Shelton defines techquity as “merging the effective use of educational technologies with culturally responsive and culturally relevant learning experiences to support students’ development of essential skills.” Techquity is not only ensuring equal access to technology, but also making sure that the learning experience is rich and meaningful for everybody.

A relatively new deep learning model used primarily for natural language processing (NLP), and computer vision (CV). In NLP, applications could include translations between two languages, or summarization of a body of text. In CV, applications could include 3-D modeling in augmented reality, or navigation for an autonomous vehicle or a robot.

One unique feature about transformer models is that rather than process data piece by piece in a sequential manner (like a sentence word by word or an image pixel by pixel), transformers take the entire sentence or image at once and put attention on the relationship between between the words or pixels. The result is usually more accurate and computed faster. The downside is that transformers require huge amounts of data (and resources) and unexpected output can occur.

Articles to learn more about Transformer models of AI:

What is a Transformer Model? – article from Nvidia