“If this is Hoover or Roosevelt, I’m damn sure going to be Roosevelt!” — George W. Bush (R) in 2008 after Lehman Brothers bankruptcy tanked the stock market.

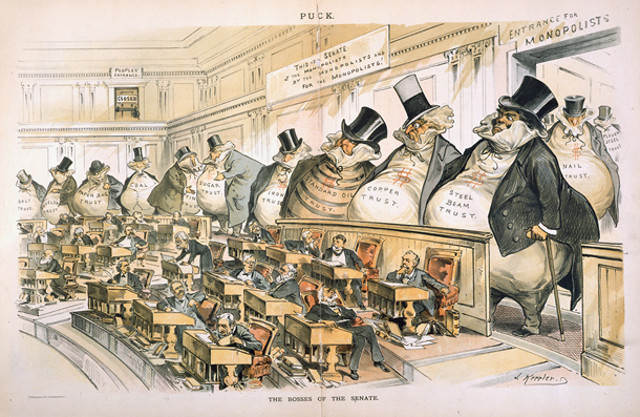

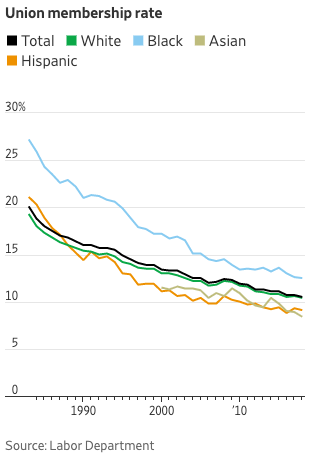

Between 2000 and 2020, America drifted left on some cultural issues but continued to drift right economically as unions weakened, workers labored longer hours for less overtime pay, Wall Street banks grew larger in relation to the rest of the “real” economy, and independent contractors lacked benefits in a gig economy. With millions struggling to make ends meet in the retail and service sectors or fulfillment centers and manufacturing jobs lost to outsourcing and automation, class lines hardened to the point that America had less upward mobility than European countries. By 2020, nearly all American families receiving SNAP food benefits (f.k.a. stamps) were working rather than unemployed, with companies shoving their cost of living onto other taxpayers, including healthcare. But multinational firms transcended borders after the Cold War, cashing in on capitalism’s global victory and the Internet, leaving U.S. technology with the five biggest public companies in history: Apple, Microsoft, Amazon, Alphabet (Google), and Facebook. Corporations also won the legal right in Citizens United v. FEC (2010) and American Tradition Partnership, Inc. v. Bullock (2012) to unlimited “dark money” Super PAC campaign donations to buy politicians, judges, and state attorneys general because, as “citizens,” corporations were exercising their “free speech.” Religiously, Christian Fundamentalism spread from the Bible Belt across the country and, politically, the Reagan Revolution kept liberals on the defensive. Taxes and faith in government stayed low, with only a tenth as much spent on infrastructure as fifty years earlier (0.3% of GDP vs. 3%) and congressional approval ratings dropped to all-time lows as corporate lobbies brazenly bought off legislators. Ronald Reagan emboldened conservatives in the same way that FDR’s New Deal emboldened liberals a half-century earlier. And, just as FDR would’ve found some of LBJ’s Great Society too liberal, Reagan wouldn’t be conservative enough today to run as a Republican.

By the mid-2010s, Western countries were shifting away from the traditional left-right economic spectrum that had defined politics for a century toward a dichotomy between those who embraced globalization and the postwar Western alliance (NATO, EU, etc.) and those with a more nationalist viewpoint represented by Donald Trump in the U.S. and Eurosceptics Theresa May in Britain, Viktor Orbán in Hungary, Geert Wilders in the Netherlands, Marine Le Pen in France, Lech and Jaroslaw Kaczynski in Poland, Beppe Grillo in Italy, and Jörg Meuthen in Germany. Jair Bolsonaro was Brazil’s “Tropical Trump” and billionaire prime minister Andrej Babiš styled himself the “Czech Donald Trump.” Trump strategist Steve Bannon called Switzerland’s Christoph Blocher — who’d kept Switzerland out of the European Union long before Britain’s “Brexit” — “Trump before Trump.” United in their opposition to unchecked immigration and free trade, these nationalists transcended the left-right economic spectrum. Scottish nationalist and First Minister Nicola Sturgeon was a democratic socialist and Trump oscillated between political parties from 1987-2011. The 2007-09 Financial Crisis and Middle Eastern immigration exacerbated the new globalist-national divide, but it also stemmed from traditional middle-class economic concerns. The issues that defined the old, overlapping economic spectrum were still more important than ever, as gains from increased productivity flowed almost exclusively to the wealthy, frustrating the middle classes and making it hard for the right to argue the merits of trickle-down economics convincingly to a broad GOP coalition. Despite increased economic productivity, wages remained largely flat between the 1970s and 2010s (relative to inflation) except for the wealthy. Forty percent of community college students nationwide experienced some form of housing instability in any given semester. These 2012 numbers from Harvard and Duke’s business schools, published in the left-leaning Mother Jones, explain the widening wealth gap:

With the world’s top 1% now worth more than the bottom 99%, it’s likely that liberal parties will pursue increasingly socialist means to redistribute wealth within market economies (e.g. “Jobs-for-All” with $15 minimum wage, right) while conservative parties steer the conversation away from economics toward culture wars, or at least discourage “class warfare.” Right-wing populism offers workers curtailed immigration and tariff protectionism, but tariffs have an incidental, mixed impact on workers because they disrupt exports and raise prices/costs and halting immigration can undercut the economy, as we saw in Chapters 7-8. We still have similar debates about inequality as we had forty years ago even though the ultra-rich have way more money than they did then. For example, if we saw that, during COVID-19, Amazon’s Jeff Bezos netted $35 billion per month, our reaction would be similar to if he’d netted $35 million per month — still a lot but a thousand times less.

With the world’s top 1% now worth more than the bottom 99%, it’s likely that liberal parties will pursue increasingly socialist means to redistribute wealth within market economies (e.g. “Jobs-for-All” with $15 minimum wage, right) while conservative parties steer the conversation away from economics toward culture wars, or at least discourage “class warfare.” Right-wing populism offers workers curtailed immigration and tariff protectionism, but tariffs have an incidental, mixed impact on workers because they disrupt exports and raise prices/costs and halting immigration can undercut the economy, as we saw in Chapters 7-8. We still have similar debates about inequality as we had forty years ago even though the ultra-rich have way more money than they did then. For example, if we saw that, during COVID-19, Amazon’s Jeff Bezos netted $35 billion per month, our reaction would be similar to if he’d netted $35 million per month — still a lot but a thousand times less.

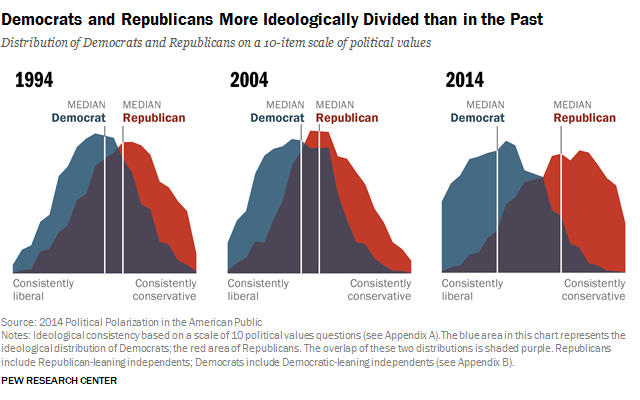

In American domestic politics after the Reagan Revolution, the GOP moved to the right and the Democrats followed by moving partway to the right on economics but not culture, and the gap between the two parties grew because of factors we covered in the last half of the previous chapter: media fragmentation, enhanced gerrymandering, and uncompromising, rights-based party strategies, along with the Monica Lewinsky scandal and contested 2000 Election in the optional section. Other factors magnifying partisanship were the end of the Cold War (removing a unifying cause) and greater (not less) transparency on Capitol Hill, leading to fewer backroom compromises outside the view of partisan lobbyists and voters. Social media poured accelerant on the partisan fire, creating an “outrage-industrial complex” also covered in the previous chapter.

All these factors amplified the partisanship that’s been a mainstay of American democracy, creating near dysfunctional Gridlock in Congress worsened by increased parliamentary filibustering that required 60% super-majorities on Senate bills. The logic behind the filibuster was to create opportunities for the minority party to help shape legislation in the spirit of compromise but, since voters wouldn’t tolerate their respective parties compromising, the filibuster just contributed to gridlock. Increasingly, presidents by-passed Congress with executive orders to get things done and Republican state attorneys general in states like Texas sued Democratic presidents to block national legislation in their respective states (i.e. a modern-day version of nullification theory). Democrats employed their own nullification by legalizing marijuana at the state level and creating [immigrant] sanctuary cities in defiance of federal law.

Some gridlock is a healthy and natural result of the Constitution’s system of checks-and-balances, though New Yorkers invented the actual term gridlock in the early 1970s to describe traffic. However, too much gridlock disrupts the compromises that keep the political system functioning. For instance, the bipartisan Simpson-Boles plan to balance the budget long-term with small compromises on both sides never even made it out of committee and likely wouldn’t have passed if it did. Meanwhile, among voters, rifts opened in the combustible 1960s evolved and hardened into the “culture wars” of the next half-century.

Complicating and dovetailing with these culture wars was a libertarian push back against regulations on guns, drugs, sexual orientation, and gambling. Taking advantage of an omission/loophole in the 1934 National Firearms Act — requiring FBI background checks, national database registration, fingerprinting, photo, and fees for fully automatic machine guns, silencers (until recently), hand grenades, missiles, bombs, poison gas, short-barreled rifles, and sawed-off shotguns — and expiration of the Federal Assault Weapons Ban (1994-2004), gun lobbies staked out a market among civilians for magazine-fed, semi-automatic rifles like the Colt AR-15, with some marketing even aimed at children (technically their parents). Their high-velocity, low-mass bullets tumble so as to obliterate organs beyond repair. An ATF-approved kit could “bump-fire” them into near fully-automatic machine guns until Trump’s DOJ outlawed bump stocks in 2018. As for the Assault Weapons Ban of ’94-’04, it didn’t have much impact on overall homicide rates (the vast majority of which don’t involve mass shootings), but it correlated with a lower average of people killed per mass shooting. But if the U.S. tried to reinstate the Assault Weapons Ban, politicians would be confronted with scenarios like this in the Kentucky legislature in 2020:

Complicating and dovetailing with these culture wars was a libertarian push back against regulations on guns, drugs, sexual orientation, and gambling. Taking advantage of an omission/loophole in the 1934 National Firearms Act — requiring FBI background checks, national database registration, fingerprinting, photo, and fees for fully automatic machine guns, silencers (until recently), hand grenades, missiles, bombs, poison gas, short-barreled rifles, and sawed-off shotguns — and expiration of the Federal Assault Weapons Ban (1994-2004), gun lobbies staked out a market among civilians for magazine-fed, semi-automatic rifles like the Colt AR-15, with some marketing even aimed at children (technically their parents). Their high-velocity, low-mass bullets tumble so as to obliterate organs beyond repair. An ATF-approved kit could “bump-fire” them into near fully-automatic machine guns until Trump’s DOJ outlawed bump stocks in 2018. As for the Assault Weapons Ban of ’94-’04, it didn’t have much impact on overall homicide rates (the vast majority of which don’t involve mass shootings), but it correlated with a lower average of people killed per mass shooting. But if the U.S. tried to reinstate the Assault Weapons Ban, politicians would be confronted with scenarios like this in the Kentucky legislature in 2020:

Despite no evidence that the U.S. was poised to invade itself or confiscate everyone’s weapons — the usually overly-paranoid but occasionally accurate “slippery slope” argument — the National Rifle Association (NRA) promoted semi-automatic sport rifles as a way for citizens to raise arms “against a tyrannical government run amok.” But the Second Amendment originally bolstered state government militias against a potential national (standing) army, not private militias opposing all government and police. Unsurprisingly and for obvious reasons, the Constitution (Article III, Section 3) forbids as treasonous citizens levying war against the United States and armed private militias are technically illegal in every state, which adds to their motivation for existing in the first place. NRA president Wayne LaPierre thought that banning semi-automatic rifles or bump stocks, even for 18-21 year-olds, would lead the U.S. down the path to socialism and argued for better enforcement of existing laws. At five million members, the NRA represents a small percentage of hunters (~17 million) and gun owners (~ 1/3 of American adults) and most NRA members weren’t as radical or absolutist as LaPierre. The idea that the Second Amendment forbids any gun regulation at all is new to American history, dating from the Smith & Wesson smart gun controversy of 2000. Conservative Justice Antonin Scalia, a strong supporter of the Second Amendment, acknowledged in McDonald v. Chicago (2010) that the government can regulate guns under 2A. But McDonald and District of Columbia v. Heller (2008) interpreted 2A as applying to individual gun rights, not just militias.

Six Million Wasn’t Enough (in the Holocaust) T-Shirt Worn By Proud Boy Protesting Joe Biden’s Election Certification in Washington, D.C., 2021

Law enforcement was outgunned by protesters in Nevada’s Cliven Bundy Standoff (4.14) and Charlottesville’s Unite the Right Rally (8.17) as citizens combined the Second Amendment right to bear arms with the First Amendment right to free speech and assembly, though in neither case did protesters open fire. Likewise, protesters In Dallas (2016), Kenosha (2020), and Portland (2020) were armed but those events didn’t escalate beyond a few deaths. Still, expansive interpretations of the First and Second Amendments appear on a collision course, especially in open carry states like Texas without special event restrictions. Media fragmentation compounds the volatility as the opponents in street confrontations don’t share an agreed-upon reality. If this trio of factors gets too combustible, the adults in the room will be hard-pressed to hold the center and keep the system functioning, despite the extremely low numbers of Americans in these groups. The far left isn’t as militarized as the far right, but protesters took over six square blocks of Seattle’s Capital Hill neighborhood for three weeks in 2020 (CHOP), enabled by lenient mayoral policy. America’s Antifa branch is the most high-profile leftist group but they’re decentralized, with no pull inside the Democratic Party since they tend to be anarchists and further left on the economic spectrum than politicians. Antifa affiliates’ methods range from peaceful (marching, organizing) to obnoxious (doxxing, harassment, property damage) to violent (fighting, milkshaking, rock-tossing), but most Americans agree with their opposition to fascism and racism if not their methods and desire to dismantle capitalism. On the far right, It won’t bode well if lines blur between their militias and law enforcement, as they did in Kenosha (vigilante murderer Kyle Rittenhouse didn’t have a permit to carry in Wisconsin), or if politicians incite private militias as Trump did in Michigan to protest COVID restrictions and to protest Joe Biden’s election in the Capitol Siege of 1.6.21 with the Boogaloo Bois, Proud Boys, and Oath Keepers. In his last debate with Biden, Trump told the Proud Boys to “stand back and stand by.” These paramilitaries’ members vary widely in their political views, ranging from weekend warriors playing army with their buddies all the way to hardcore neo-Nazis (right). The militias aren’t uniformly white, but many share Charles Manson’s sense of impending racial conflict (Chapter 16). The more serious variety think it’s their prerogative to impose their will on others with force rather than through democratic means like voting, debate, legislation, boycotts, op-eds, etc. and would feel naked showing up to a peaceful protest unarmed. Patriotic in the extreme, they have no faith in the American form of government except for how they imagine it to have been in 1776.

Washington, D.C. disallows even permitted concealed weapons near protests, which weakened the hand of those trying to overturn the 2020 election and/or lynch VP Mike Pence for certifying the electoral college results on January 6th as the White House drug its feet deploying the National Guard. If not for that restriction and the heroism of Republican judges, politicians, and election officials in swing states who stood their ground and backed Uncle Sam amidst death threats and intense pressure, including Trump’s infamous call to Georgia Secretary of State Brad Raffensperger, the constitutional republic would’ve temporarily ceased functioning for the first time since its inception in 1791, with the executive branch overriding the legislative. But in the two months between the election and certification, judges in SCOTUS and numerous lower lower courts distinguished between the quality and quantity of evidence for substantive voter fraud or rigged voting machines and refused to ban mail-in ballots ex-post facto. While the quantity of evidence for fraud, cheating, or “millions of missing ballots” outside the courtroom was enormous and will grow in coming years to meet the market, the actual evidence that Trump’s attorneys introduced in courts wasn’t so much low quality as non-existent, except for a handful of procedural complaints that wouldn’t have swung the election. They wisely conceded under oath that they really didn’t have even allegations of fraud, let alone evidence for it (in America’s legal system, the two go hand-in-hand). To argue otherwise would’ve exposed them to being disbarred, held in contempt of court or, worse, jail time for perjury. Peaceful transfers of power and faith in elections are the linchpin of democracy, not just a symbol. The Capitol invaders, though, weren’t trying to overthrow the U.S. so much as save it, overturning what they saw as a fraudulent election. As of May 2021, 33% of Americans agreed. The burden of proof is on them to explain why Trump’s attorneys didn’t introduce persuasive evidence if they had any.

What would’ve transpired next had the 1.6 insurrection succeeded is hard to guess, partly because there wasn’t a coherent, agreed-upon plan among its rebels, other than letting Q’s prophesied “storm” unfold, and partly because the U.S. would’ve been in uncharted territory. Neither the British invasion of Washington, D.C. during the War of 1812 nor the Confederate secession from 1861-65 disrupted the Constitutional framework, except for President Lincoln’s suspension of habeas corpus in the Upper South. After the 2021 Capitol Siege, there were rumors, still unsubstantiated, of a mini-coup whereby the Cabinet, Pentagon (JCS), and Justice Department effectively transferred leadership to Pence for the remaining two weeks of Trump’s presidency. Pence’s military aide had the nuclear football in tow even as they escaped the Capitol.

While overall crime rates, including gun-related violence, fell in the U.S. in the early 21st century, killers used semi-automatic rifles as assault weapons in mass shootings in Aurora (2012), Newtown-Sandy Hook (2012), San Bernardino (2015), Orlando (2016), Dallas (2016), Las Vegas (2017), Sutherland Springs, Texas (2017), Parkland, Florida (2018), El Paso (2019), Dayton (2019), Midland-Odessa (2019), and Boulder (2021). Bump kits allowed assassins like Stephen Paddock in Las Vegas to fire off 400-800 rounds per minute. It’s currently illegal to research federal statistics on gun violence. Ghost guns assembled from component parts also complicate the registration process because they lack serial numbers. After Sandy Hook, initial polls showed over 95% of Americans and over 80% of NRA members supported expanding background checks, but the measure failed in Congress. After Parkland, Trump initially threw his support behind universal background checks, but the NRA backed him down. The same scenario played out after El Paso. After each shooting, the GOP argued that it was inappropriate to discuss regulation so soon after a tragedy and that such regulations violated the Second Amendment and/or would be ineffective.

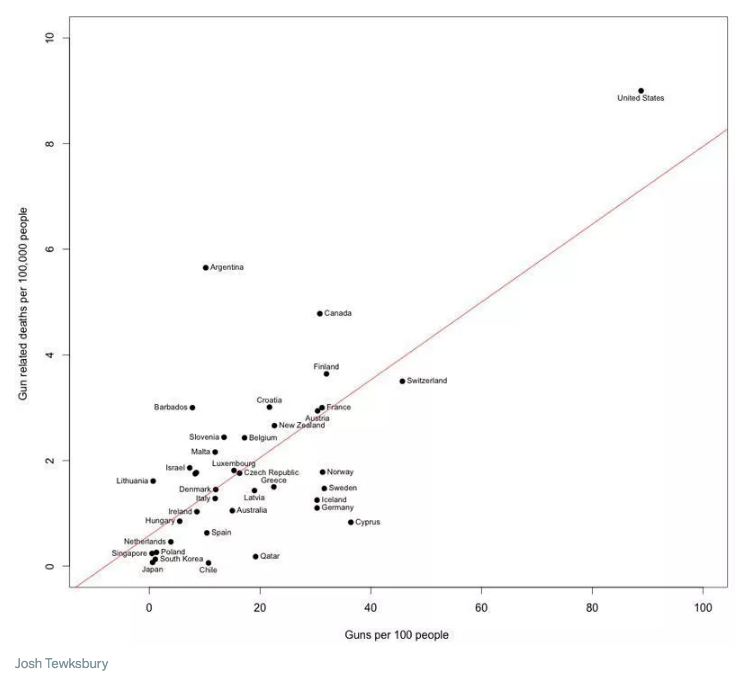

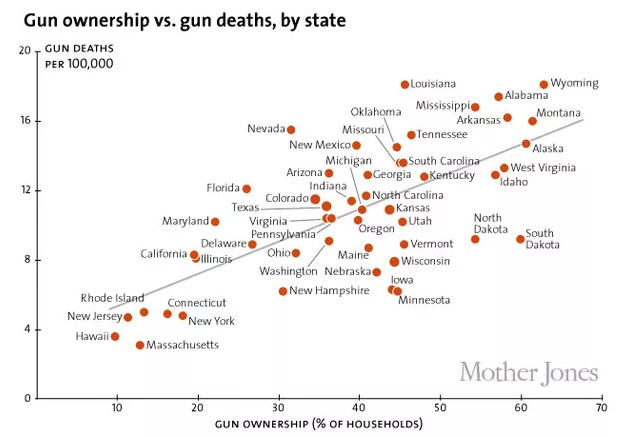

Americans remained divided on guns, with control advocates pointing out the ownership correlation to murder by country and state, while pro-gun lobbies stressed the need for more guns for protection amidst the mass shootings. Meanwhile, the death toll from domestic gun violence is far higher than other countries, killing more Americans in the first half of 2019, alone, than the country lost in the Normandy Invasion of 1944 and first month of combat against Germany. Suicide rates among white males are higher than other demographics and higher yet when the subject has access to guns. In 2015, Governor Greg Abbott admonished Texans in a Tweet® for not buying more guns, embarrassed that they’d fallen behind California for a #2 ranking. If the respective sides of the debate shared anything, it was a common concern for safety; they just had opposing views on how to attain safety. This graph charted other points of agreement circa 2017. In “Guns & the Soul of America,” conservative columnist David Brooks interpreted guns as being not just for protection or hunting but also a proxy for larger issues in the culture war — as “identity markers” for freedom, self-reliance, toughness, responsibility, and controlling one’s own destiny in a post-industrial world. Campaign TV ads in the reddest gerrymandered districts were an art form, testing who could be the gunniest while stopping short of actually putting their Democratic opponent in the cross-hair, as that would’ve risked future liability.

While the U.S. embraced near military-grade weapons for civilians and its legislators pushed to re-legalize silencers and prevent background checks, the U.S. simultaneously went in a more liberal but likewise libertarian direction on many social issues, including marijuana legalization in some states and same-sex marriage everywhere (Chapter 17). If Americans today aren’t more divided than usual, they are at least better sorted by those who stand to gain by magnifying their disagreements (e.g. cable TV manufactured an otherwise mostly non-existent “War on Christmas”). And they’ve sorted themselves better than ever, often into conservative rural areas and liberal cities, reminiscent of the rural-urban divides of the 1920s. As we saw in the previous chapter’s section on gerrymandering, this geographic segregation resulted in partisan districts of red conservatives and blue liberals, with interspersed purple that defied categorization (most Americans live in suburbs). The fragmented and partisan media encouraged and profited from animosity between citizens, selling more advertising and “clickbait” than they would have if politicians had cooperated and citizens respectfully disagreed over meaningful issues (around 20% of Americans were online as of 1997, but almost everyone was by 2000, especially on AOL). A 1960 poll showed that fewer than 5% of Republicans or Democrats cared whether their children married someone from the other party; a 2010 Cass Sunstein study found those numbers had reached 49% among Republicans and 33% among Democrats. This trend might not continue, as polls show that the actual brides and grooms (Millennials) are less rigid ideologically than their parents. In 2014, Pew research showed that 68% of Republican or Republican-leaning young adults identified their political orientation as liberal or mixed and similar polls show some young Democrats identifying as conservative (it’s also possible that many young people don’t know what liberal or conservative mean).

While the U.S. embraced near military-grade weapons for civilians and its legislators pushed to re-legalize silencers and prevent background checks, the U.S. simultaneously went in a more liberal but likewise libertarian direction on many social issues, including marijuana legalization in some states and same-sex marriage everywhere (Chapter 17). If Americans today aren’t more divided than usual, they are at least better sorted by those who stand to gain by magnifying their disagreements (e.g. cable TV manufactured an otherwise mostly non-existent “War on Christmas”). And they’ve sorted themselves better than ever, often into conservative rural areas and liberal cities, reminiscent of the rural-urban divides of the 1920s. As we saw in the previous chapter’s section on gerrymandering, this geographic segregation resulted in partisan districts of red conservatives and blue liberals, with interspersed purple that defied categorization (most Americans live in suburbs). The fragmented and partisan media encouraged and profited from animosity between citizens, selling more advertising and “clickbait” than they would have if politicians had cooperated and citizens respectfully disagreed over meaningful issues (around 20% of Americans were online as of 1997, but almost everyone was by 2000, especially on AOL). A 1960 poll showed that fewer than 5% of Republicans or Democrats cared whether their children married someone from the other party; a 2010 Cass Sunstein study found those numbers had reached 49% among Republicans and 33% among Democrats. This trend might not continue, as polls show that the actual brides and grooms (Millennials) are less rigid ideologically than their parents. In 2014, Pew research showed that 68% of Republican or Republican-leaning young adults identified their political orientation as liberal or mixed and similar polls show some young Democrats identifying as conservative (it’s also possible that many young people don’t know what liberal or conservative mean).

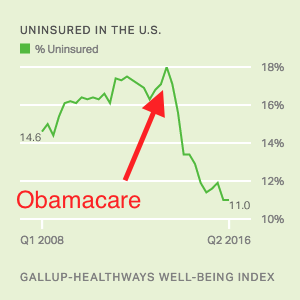

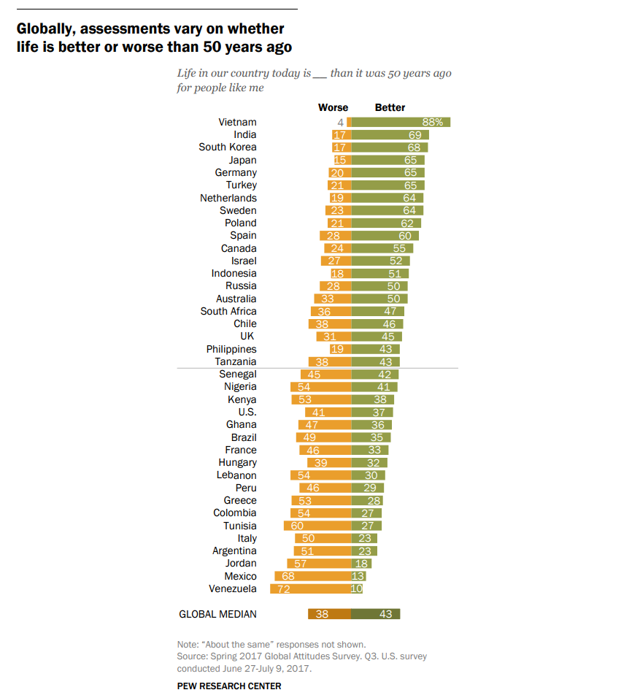

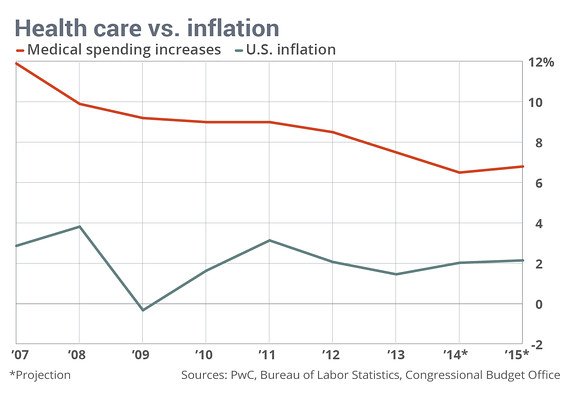

But more than ever, politicians struggled to please voters who disliked each other and, like children toward their parents, were both defiant toward and dependent on government. While it’s true that the U.S. narrowly averted economic and political disasters in 2008 and ’21, respectively, voters also thought other things were declining even when they weren’t. Declinism, aside from being a nearly universal cognitive bias, also sells better, resulting in a situation where most people thought the country was going downhill while their local area was improving, just as they thought public schools were “broken” even though their own children’s’ public schools were good. Americans couldn’t agree on much, but many felt aggrieved and had “had enough” even if they weren’t well-informed enough to know what exactly they’d had enough of. And those that did know didn’t agree with each other. Amidst this hullabaloo, Tweeting®, and indignation — with nearly every imaginable demographic perceiving itself as “under siege” — historians hear echoes of earlier periods in American history. Large, diverse, free-speech democracies are noisy and contentious countries to live in as you’ve already seen from having read Chapters 1-20. Partisan media is a return to the 18th and 19th centuries, while today’s cultural rifts seem mild compared to more severe clashes in the Civil War era, 1920s, and 1960s-70s, even if those eras weren’t saturated with as much media. In the early 21st century, hyperpartisanship and biased media complicated and clouded debates over globalization/trade, healthcare insurance, and the Financial Crisis/Great Recession that would’ve been complicated enough to begin with. These are three primary areas we’ll cover below, with some brief economic background to start.

But more than ever, politicians struggled to please voters who disliked each other and, like children toward their parents, were both defiant toward and dependent on government. While it’s true that the U.S. narrowly averted economic and political disasters in 2008 and ’21, respectively, voters also thought other things were declining even when they weren’t. Declinism, aside from being a nearly universal cognitive bias, also sells better, resulting in a situation where most people thought the country was going downhill while their local area was improving, just as they thought public schools were “broken” even though their own children’s’ public schools were good. Americans couldn’t agree on much, but many felt aggrieved and had “had enough” even if they weren’t well-informed enough to know what exactly they’d had enough of. And those that did know didn’t agree with each other. Amidst this hullabaloo, Tweeting®, and indignation — with nearly every imaginable demographic perceiving itself as “under siege” — historians hear echoes of earlier periods in American history. Large, diverse, free-speech democracies are noisy and contentious countries to live in as you’ve already seen from having read Chapters 1-20. Partisan media is a return to the 18th and 19th centuries, while today’s cultural rifts seem mild compared to more severe clashes in the Civil War era, 1920s, and 1960s-70s, even if those eras weren’t saturated with as much media. In the early 21st century, hyperpartisanship and biased media complicated and clouded debates over globalization/trade, healthcare insurance, and the Financial Crisis/Great Recession that would’ve been complicated enough to begin with. These are three primary areas we’ll cover below, with some brief economic background to start.

The American economy continued on a path toward increased globalization and automation that began long ago, with American labor competing directly against overseas workers and position-controlled (stationary) factory robots. Information technology assumed a dominant role in most Americans’ jobs and lives and traditional manufacturing jobs were increasingly outsourced to cheaper labor markets or displaced by automation, compromising middle-class prosperity. Automation began with the Industrial Revolution and has been steadily replacing workers ever since, deskilling parts of the workforce. Strip (surface) mining, for instance, conducted by excavators and earthmovers, starting eroding coal mining jobs decades ago. Most longshoremen have been displaced by the intermodal container system we saw in Chapter 15. Some economists argue that, despite all the talk of robotics, the actual rate of automation hasn’t increased (see optional Krugman article below). Yet, studies showed that more jobs were lost to automation (~85%) than outsourcing (~15%) in the first decade of the 21st century, even though the U.S. lost over 2 million jobs to Chinese manufacturing. The verdict isn’t in but, proportionally, the digital age hasn’t yet translated into the domestic job growth that accompanied the steam engine, railroad, electricity, or internal combustion engine, and Wall Street’s expansion hasn’t been accompanied by growth in the “real economy.” Apple, Microsoft, Google, and Amazon employed less than a million workers between them as of 2019. Online retail increasingly replaced brick-and-mortar as malls closed and For Lease signs popped up in strip malls. Automation and digitization made businesses more efficient than ever and American manufacturing was stronger than naysayers realized — still the best in the world — but it provided fewer unskilled jobs. Efficiency is a two-edged sword: sometimes technology destroys jobs faster than it creates others. If automated trucks displace our country’s drivers over the next 10-20 years, it’s unlikely we’ll find another 1.7 million jobs for them overnight.

But remember that almost no jobs exist today that were around a century ago, and vice-versa. Creative destruction always cuts in both directions. The loss of coal mining jobs has been more than offset by the increase in renewable energy and natural gas jobs. If we weren’t creating new jobs at roughly the same rate we’re losing them, then unemployment rates would be higher. But also realize that it’s a tough labor market for people without at least some training past high school in college, a trade school, or the military, and robots are displacing white-collar and blue-collar workers alike. Yet, many jobs remain unfilled and high schools focusing on Career & Technical Education (CTE) are gaining traction to fill gaps.

Most likely, the dynamic American economy will adjust as it always has before. Karl Marx feared that steam would spell doom for human workers and John Maynard Keynes feared the same about fuel engines and electricity. A group of scientists lobbied Lyndon Johnson to curtail the development of computers. From employers’ standpoints or that of the free market, robots are more efficient than humans and they never complain, show up late, get sick, join unions, file discrimination suits, demand pensions, or health insurance, etc. So far, at least, these fears of being taken over by robots haven’t been realized on a massive scale, but automation has gained momentum since Marx and Keynes and is well on its way to posing a significant economic challenge. Still, for those with training, America’s job market remains healthy, with unemployment under 5% as of 2019 and ~ 6% post-COVID in 2021. Humans are unlikely to go the way of the horse, partly because democratic societies have more power over robots than horses had over engines. But, unfortunately, the verdict isn’t in yet on whether the scientists who warned LBJ about computers were right. Hopefully, physicist Stephen Hawking, sci-fi writers, and singularity theorists are wrong about artificial intelligence (AI) taking control of humanity. Or, maybe, if it does, the robots will take pity on us. Those interested in a lighter take on these foreboding themes should view the visionary WALL-E (2008), one of the best movies of the young century.

Globalization: Pro & Con

Like automation, globalization didn’t start in the 20th century. Global maritime trade dates to the 15th century and even earlier with overland routes like the Silk Road and, earlier yet, during the Bronze Age. Trade has always been a controversial and important part of history. Even in ancient and medieval times, trade routes spread diseases like the Plague more readily, just as today’s hyper-connectivity hastened COVID-19 (some prognosticators predict that the post-pandemic world will rely more on local networks). It’s unlikely that early Christianity would’ve taken root without the Roman Empire’s vast road network. Languages and diets expanded because of trade. People have always wanted the advantages of free trade without the disadvantages, while politicians were left to sort it out. British trade controversies predate Brexit, tracing to the late Middle Ages with arguments over imported wool and violence toward foreign traders and, much more recently, the formation of the European Union in 1992 to expedite free trade and movement of workers. Free-trading colonial smugglers that spearheaded the American Revolution resented Britain’s restrictive, mercantilist zero-sum trade policies; then protectionist Treasury Secretary Alexander Hamilton aimed to incubate America’s early industrial revolution with tariffs (duties); then trade disputes and embargoes drove the U.S. into the War of 1812. Tariffs were a divisive enough issue between North and South in the 19th century to be a meaningful, if secondary, cause of the Civil War behind slavery. The U.S. then had high tariffs as the Industrial Revolution kicked into gear after the Civil War (Chapter 1), with the GOP touting protectionism. But analysts interpreted tariffs as worsening the Great Depression (Chapter 8) and they were disparaged in British history because the Corn Laws (1815-1846) artificially raised food prices even as the poor went hungry, enriching landowners at the expense of the working classes and manufacturers. Thus, tariffs fell out of favor after World War II as the U.S. and Britain reshaped the new global economy along the lines of free trade.

In this lengthy but important section, we’ll discuss the general pros and cons of free trade (without tariffs) after WWII, then analyze the ongoing rift in Sino-American trade. You’ve seen in the first twenty chapters that issues cross back and forth historically between the political parties. Trade policy cuts across partisan lines over time but also at any one point in time, including now. Both parties want the advantages of trade without the disadvantages, though that’s impossible.

The Return to Amsterdam of the Second Expedition to the East Indies on 19 July 1599, by Andries van Eertvelt, ca. 1610-20

When the U.S. and Britain set out to remake the world economy in their image after World War II and avoid more depressions (Chapter 13), they encouraged as much free trade as possible between America and Europe. In reality, though, there were virtually no countries that favored pure, unadulterated free trade. In America, most major economic sectors had lobbies contributing to politicians — some free trade, some protectionist, and some both. Most democratic countries, including those that signed the General Agreement on Trade and Tariffs (GATT) in 1947, had voting workers back home demanding favoritism for their profession. France, for instance, qualified its GATT inclusion with “cultural exceptions” enabling its cinema to compete with Hollywood imports and it maintained high agricultural tariffs. Translation: the upside of trade was great, but other countries couldn’t undermine farm-to-market La France profonde, or “deep France,” with cheap wine, bread, and cheese. In sum, the free-trade movement has lowered but not eliminated tariffs: the U.S. averages ~ 2.5%, Japan 2%, and China and European countries ~ 3%. These are averages, though, and vary widely among products.

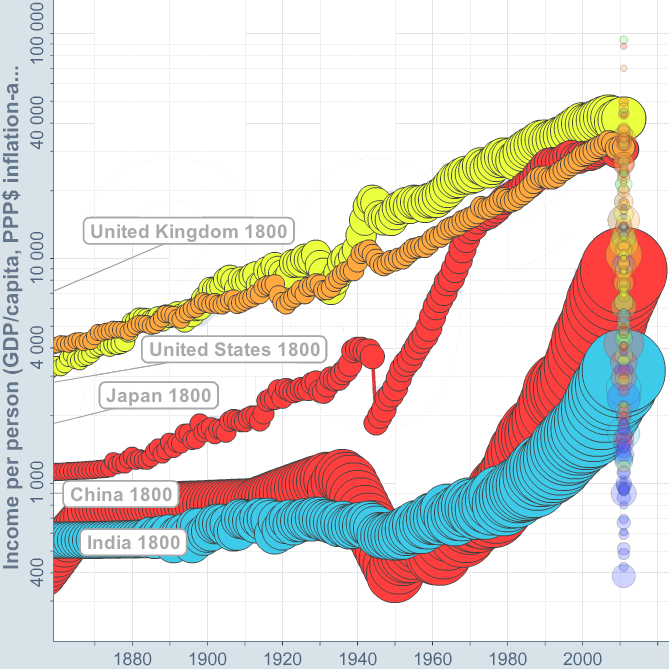

By the early 1990s, a near consensus of economists favored free trade but globalization threatened some American workers while strengthening others. Outsourcing work to other countries and automation weakened manufacturing labor, undercutting America’s postwar source of middle-class prosperity and upward mobility for blue-collar workers. Democrats had supported unions since the New Deal of the 1930s and they generally supported a Buy American protectionist platform to help workers, including taxes or duties (tariffs) on imports. They were the American version of the French farmers, in other words. But globally, economic inequality that had risen steadily since the dawn of the industrial revolution reached its peak in the 1970s, precisely when inequality within the United States was at its lowest. With freer trade policies, inequality has since grown in the U.S. while trending down worldwide.

By the early 1990s, a near consensus of economists favored free trade but globalization threatened some American workers while strengthening others. Outsourcing work to other countries and automation weakened manufacturing labor, undercutting America’s postwar source of middle-class prosperity and upward mobility for blue-collar workers. Democrats had supported unions since the New Deal of the 1930s and they generally supported a Buy American protectionist platform to help workers, including taxes or duties (tariffs) on imports. They were the American version of the French farmers, in other words. But globally, economic inequality that had risen steadily since the dawn of the industrial revolution reached its peak in the 1970s, precisely when inequality within the United States was at its lowest. With freer trade policies, inequality has since grown in the U.S. while trending down worldwide.

Tariffs are the main way to empower protectionism by discouraging free trade and protecting workers from one’s own country. This is more complicated than it might seem, though, because Buy American helps some workers and not others, especially those that work in industries that export and are susceptible to retaliatory tariffs from other countries (e.g. southern cotton exporters in the 19th century). Moreover, tariffs artificially raise prices for everyone. Buy American was also an awkward topic for mainstream Republicans because they fancied themselves as the more patriotic of the two parties but had mostly supported free trade over the years to boost corporate profits and/or out of a genuine belief that, overall, it helps workers. George W. Bush’s VP Dick Cheney said that globalization has “visible victims and invisible beneficiaries.”

As we saw in the previous chapter, Bill Clinton’s embrace of free trade created a window of opportunity for Ross Perot to garner significant third-party support in 1992, and Hillary Clinton’s ongoing support of globalization along with mainstream Republicans partially explains Donald Trump’s appeal in 2016. In 1992-93, Bill Clinton (D) wanted open trade borders with the United States’ neighbors to the north and south, Canada and Mexico, and, in 2000, he normalized trade relations with China. Two major candidates, Bill Clinton and George H.W. Bush, supporting free trade in 1992 left the door open for a third-party candidate to focus on the outsourcing of labor. In his high-pitched Texan accent, Perot quipped, “Do you hear that giant sucking sound? That’s your jobs leaving for Mexico.” He focused on Mexico because the issue at hand was whether the U.S., Canada, and Mexico would trade freely via NAFTA, the trilateral North American Free Trade Agreement (logo, left). Ronald Reagan promoted NAFTA in the 1980s, George H.W. Bush had agreed to it, and Bill Clinton promised to push it through the Senate and sign it. Perot was wrong about huge numbers of jobs leaving for Mexico, but a lot of American manufacturing and customer-service jobs left for China, India, Vietnam, and other places where companies could pay low wages and pollute the environment without concern for American regulations. There was a giant sucking sound, all right; it just went mostly toward Asia instead of Mexico. Meanwhile, workers came north from Mexico and Central America for jobs that existing Americans either weren’t interested in or asked more for. Historian Thomas Frank cites Clinton’s pro-NAFTA speeches — in which he described how the government would fund re-training for displaced workers but conceded honestly that some would lose their jobs — as weakening whatever residual grip Democrats retained on blue-collar Southerners.

As we saw in the previous chapter, Bill Clinton’s embrace of free trade created a window of opportunity for Ross Perot to garner significant third-party support in 1992, and Hillary Clinton’s ongoing support of globalization along with mainstream Republicans partially explains Donald Trump’s appeal in 2016. In 1992-93, Bill Clinton (D) wanted open trade borders with the United States’ neighbors to the north and south, Canada and Mexico, and, in 2000, he normalized trade relations with China. Two major candidates, Bill Clinton and George H.W. Bush, supporting free trade in 1992 left the door open for a third-party candidate to focus on the outsourcing of labor. In his high-pitched Texan accent, Perot quipped, “Do you hear that giant sucking sound? That’s your jobs leaving for Mexico.” He focused on Mexico because the issue at hand was whether the U.S., Canada, and Mexico would trade freely via NAFTA, the trilateral North American Free Trade Agreement (logo, left). Ronald Reagan promoted NAFTA in the 1980s, George H.W. Bush had agreed to it, and Bill Clinton promised to push it through the Senate and sign it. Perot was wrong about huge numbers of jobs leaving for Mexico, but a lot of American manufacturing and customer-service jobs left for China, India, Vietnam, and other places where companies could pay low wages and pollute the environment without concern for American regulations. There was a giant sucking sound, all right; it just went mostly toward Asia instead of Mexico. Meanwhile, workers came north from Mexico and Central America for jobs that existing Americans either weren’t interested in or asked more for. Historian Thomas Frank cites Clinton’s pro-NAFTA speeches — in which he described how the government would fund re-training for displaced workers but conceded honestly that some would lose their jobs — as weakening whatever residual grip Democrats retained on blue-collar Southerners.![]()

The pro-globalization argument is that free trade and outsourcing improve profit margins for American companies, boost American exports, and lower prices for consumers while providing higher wages and economic growth in developing countries. For free traders, tariffs are just hidden taxes that attempt to arbitrarily pick winners and losers in the economy and create general inefficiency; just let the free market dictate where goods, services, and jobs flow on their own. (NOTE: for pure free traders, all political borders are an impediment to economic growth). Globalizing service jobs like lawyers and architects, as opposed to just goods, would boost the economy even more. Free trade also offers consumers a wider range of products, ranging from Mercedez-Benz and Samsung electronics to Harry Potter novels. Globalization is how the San Antonio Spurs won NBA championships with players from Argentina, France, Italy, and the Virgin Islands and, more generally, why retail and food chains are nearly indistinguishable across Europe and North America. You could drive a German BMW built in South Carolina or an American Ford or John Deere tractor built in Brazil. South Korean LG builds solar panels in Alabama. The smartphone in your pocket — or maybe you’re even reading this chapter on it — might come from South Korea but contain silicon in its central processor from China, cobalt in its rechargeable battery from the Congo, tantalum in its capacitors from Australia, copper in its circuitry from Chile or Mongolia, plastic in its frame from Saudi Arabian or North Dakotan petroleum, and software in its operating system from India or America. Another pro-globalization argument is that it creates more jobs than it destroys, as foreign companies who otherwise wouldn’t operate in the U.S. open plants and hire American workers. Honda, from Japan, builds almost all the cars and trucks it sells in America in America. In 2014, Silicon Valley-based Apple started making Mac Pros® at Singapore-based Flextronics in northwest Austin, creating 1500 jobs.

Opponents of globalization point out that American manufacturers are undersold, costing jobs and lowering wages as companies exploit and underpay foreign workers. Barack Obama’s 2009 stimulus package included a Buy American provision. Are such provisions beneficial to the American economy? For at least some workers, yes. When jobs go overseas, they lose theirs and labor unions lose their hard-earned bargaining power. But tariffs also keep prices artificially high on parts and products, costing other workers and consumers. For instance, steel tariffs help American steel manufacturers but cost American builders and consumers buying steel, who would otherwise buy it cheaper on an open international market. Free trade lowers prices. The U.S. could put a tariff on clothing and save 135k textile jobs. But it would also raise the price of clothing, a key staple item, for 45 million Americans under the poverty line. Globalization, in sum, is why your smartphone didn’t cost $2k but also why you can no longer make good union wages at the local plant with only a GED or high school diploma. New workers at General Electric’s plant in Louisville earn only half of what their predecessors did in the 1980s (adjusted for inflation). Globalization levels the labor playing field, which is bad for some, but not all, of the workers in countries that start off richer.

Return to phones and electronics as an example of globalization’s pros and cons. We take for granted the lower price of products that importing and outsourcing make possible, and might not notice the increased productivity their devices allow for on the job, but we take notice that Americans aren’t employed assembling electronics. In Walmart’s case, we might notice a “Made In China” tag on items but not realize that their lower prices have curbed retail inflation in the U.S. over the last 35 years. (Walmart also saved money by selling bulk items, using bar-codes, and coordinating logistics with suppliers — all now customary in retail.) Free trade and outsourcing also help stock returns, because large American corporations not only can make things cheaper, they also do half of their own business overseas. The stock market helps not only the rich but also workers with company pensions and 401(k)’s that rely on growing a nest egg for retirement.

Most importantly, the U.S. exports too, and when it puts up protective tariffs other countries retaliate by taxing American goods. That happened famously when the Smoot-Hawley Tariff of 1930 worsened the Depression, stifling world trade. The shipping company pictured below, UPS, is based in Atlanta and it boosts America’s economy to have them doing business overseas. No globalization; no UPS gondolas in Venice piloted by a gondolier checking his cheap smartphone. Globalization, then, is a complex issue with many pros and cons, some more visible than others. For a bare-bones look at the downside of globalization view the documentary Detrotopia (2013), that traces the decline of unionized labor and manufacturing in one Rust Belt city, or just look at the dilapidated shell of any abandoned mill across America. There aren’t any comparable documentaries concerning the upside of globalization since that’s harder to nail down. When it comes to what psychologist Daniel Kahneman called “fast and slow thinking,” we can see the downside of globalization in five seconds but might need five minutes to really think through the upside. The British magazine Economist, which has promoted free trade now for over 175 years, explained the ongoing appeal of protective tariffs among voters more eloquently than Dick Cheney, if less succinctly: “The concentrated displeasure of producers exposed to foreign competition is more powerful than the diffuse gratitude of the mass of consumers, and so tariffs get reimposed.” Likewise, immigration (which overlaps with the issue of globalization) is favored by most economists as good for the overall economy, but it victimizes certain demographics/sectors who lose jobs to immigrants willing to work for less. It’s always been thus in American history, as you may remember from reading about earlier immigration controversies in Chapters 2 and 7.

The 1992 campaign drew attention to globalization, as did the protests and riots at the 1999 World Trade Organization conference in Seattle. The WTO is the successor to GATT, part of the economic framework the West created after World War II, along with the World Bank and International Monetary Fund, to stimulate global capitalism. The rioters were protesting against the WTO’s free trade policy and the tendency of rich countries to lend money to emerging markets with strings attached, sometimes mandating weak environmental regulations and outlawing unions. Working conditions often seem harsh and exploitive from a western perspective even if the job represents a relatively good opportunity from the employee’s perspective. Apple lays off or adds 100k workers at a time in their Chinese facilities — mobilization on a scale the U.S. hasn’t seen since World War II and wouldn’t tolerate. At the WTO riots, protesters threw bricks through the windows of chains like Starbuck’s that they saw as symbolizing globalization.

In the 2010s, the outsourcing trend reversed some, as more manufacturing jobs returned to the U.S. Some companies, like General Electric, realized that they could monitor and improve on assembly-line efficiency better close to home, while other factors included the rising costs of shipping and rising wages in countries like China and India, which started to approach that of non-unionized American labor in right-to-work states. Yet, insourcing also included foreign workers. Under H1-B non-immigrant visas, companies could hire temporary immigrants to do jobs for which there were no “qualified Americans.” Due to lax oversight, some companies started to define qualified as will do the same job for less money. In 2016, Walt Disney (250 in Data Systems) and Southern California Edison (400 in IT) fired hundreds of American employees and even required them to train their replacements from India before picking up their final paycheck. Corporate America is currently lobbying Congress to loosen H1-B restrictions, while Trump vowed to get rid of the H1-B Visa program in his 2016 campaign.

Globalization continues to be a controversial topic in American politics, but tariffs/protectionism versus free trade isn’t the only issue; there’s also the question of how fair trade agreements are once countries agree to trade. Keep in mind that pure, free trade rarely exists except in economics classrooms. And trade agreements aren’t one-page contracts that declare: “No rules whatsoever. It’s a free-for-all” in large font above the picture of a handshake emblazoned over a Maersk container ship or a picture of John Hancock smuggling bootleg rum into 18th-century Boston. They’re more like legal documents hundreds of pages long that make it difficult for the average citizen to parse out what they really include, and they almost always include tariffs on some things and not on others.

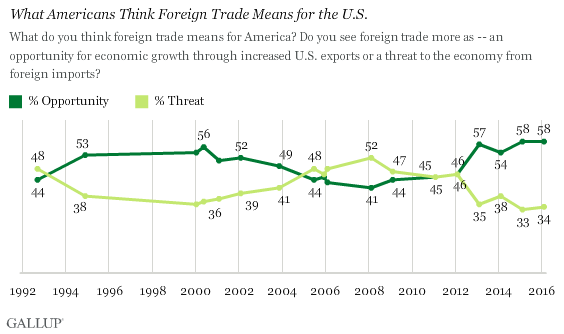

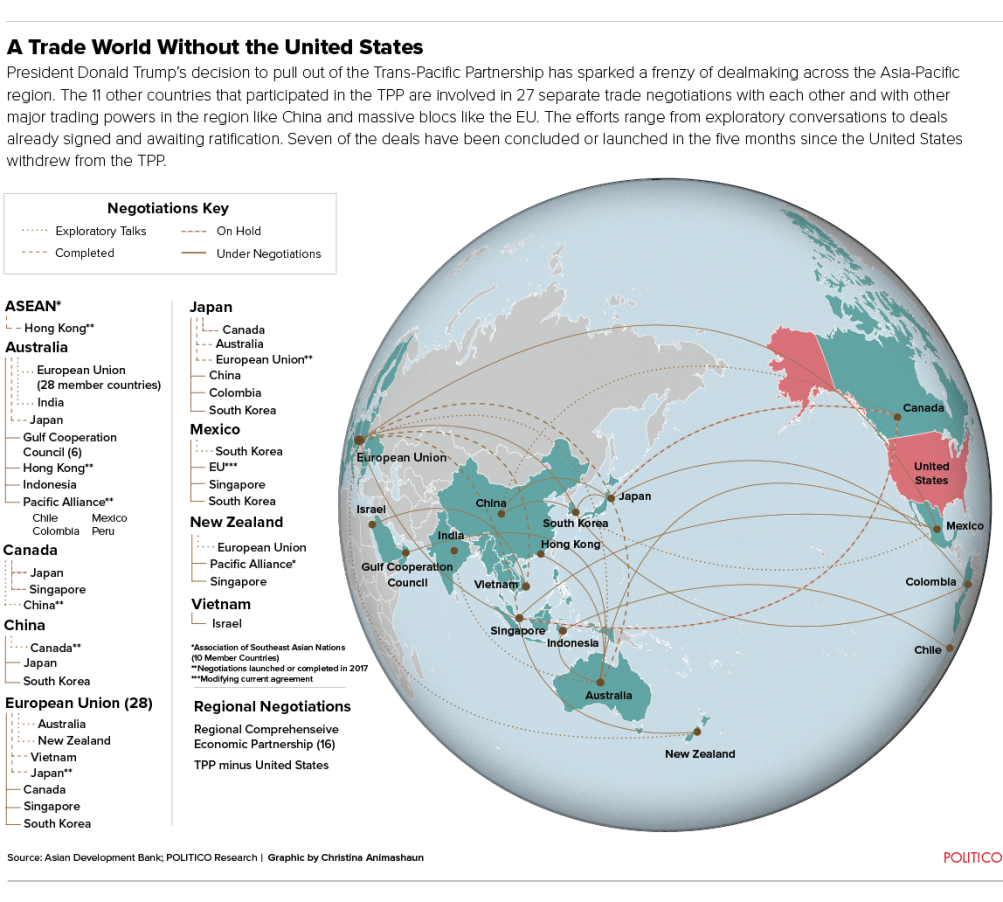

The 2016 election saw two populist candidates, Trump (R) and Bernie Sanders (D), opposed to free trade and they even pressured Hillary Clinton (D) into taking an ambiguous stand against President Obama’s Trans-Pacific Partnership (TPP) that loosened trade restrictions and lowered tariffs between NAFTA (U.S., Canada, Mexico) and twelve Pacific Rim countries that constitute 40% of the world economy. George W. Bush also supported the TPP. If not opposed to trade outright, Sanders and Trump at least wanted to rework the terms of the agreement, though it’s difficult in multilateral (multi-country) agreements to have each country go back to the drawing board because then the others might want to renegotiate and the whole process gets drawn out or falls apart. Part of the reason for the TPP’s unpopularity among Americans was that the negotiations were necessarily secretive (or at least not open to the public) and it seemed that something was being done behind their backs without their input, even though that something might’ve been good.

Trump and his advisor Steve Bannon took advantage, blasting through the door cracked open by Perot a quarter-century earlier, winning big in rural areas and the Rust Belt hit hard by globalization. Politicians, by and large, are aware that globalization is probably a net gain for Americans, but there are economic pockets that lose and are motivated to vote against free trade. It’s tempting to tap into those voters and they deserve to be heard as much as anyone else. Steelworkers can argue that if farmers get subsidies and bankers get bailouts, why shouldn’t they get to “level the playing field” with protective tariffs? You can sympathize with their need for a steady paycheck if not their use of the term level (tariffs artificially unlevel the playing field). Trump tapped the energy of voters disadvantaged by free trade while sagely keeping his bark bigger than his bite. Once in office, he tinkered with existing trade deals (other than TPP) without dismantling them, which would’ve cratered the U.S. economy.

But one point of TPP was to check China’s growing hegemony in Asia by striking agreements with its neighbors but not them, pressuring them by surrounding them with American trading partners and potential allies. With the TPP, North America was signaling China that they could do business elsewhere in Asia (of course, the TPP’s strongest proponents still intended to do a lot of business with China, just on better terms). The TPP provided the option of converting to A-B-C (anyone but China) supply chains if necessary for military purposes. When Trump pulled out of the TPP he lost leverage vis-à-vis China, weakening America’s negotiating position. Moreover, according to the American Farm Bureau Federation, leaving TPP cost farmers ~$5-8 billion in annual exports.

The controversy over Sino-American trade (aka Chimerica) couldn’t be reduced to a simple question of whether one preferred globalization or protectionism (tariffs), but rather disagreements over the terms of bilateral trade. China joined the WTO in 2001 but refused to play by the rules, choosing clear winners and losers in their economy with subsidies and devaluing their yuan currency to boost export and discourage import trade. China subsidizes state-owned companies (aka SOEs or national champions), making it difficult for truly private companies to compete. Big technology firms like Alibaba and Tencent are financially independent but China’s government regulates Internet traffic and uses its Sesame Credit software to encourage loyalty among the population. When the dust settled after a half-century of Cold War, America ironically found itself dependent on a hybrid communist economy for cheap labor and supply chains. China, equally unforeseen by Mao Zedong, morphed into a profit-driven economy with big state-owned firms, similar to the way the U.S. government owned the biggest phone company (Bell) between World War I and 1982. China didn’t embrace its own version of capitalism to join the West, but rather to become more independent.

The controversy over Sino-American trade (aka Chimerica) couldn’t be reduced to a simple question of whether one preferred globalization or protectionism (tariffs), but rather disagreements over the terms of bilateral trade. China joined the WTO in 2001 but refused to play by the rules, choosing clear winners and losers in their economy with subsidies and devaluing their yuan currency to boost export and discourage import trade. China subsidizes state-owned companies (aka SOEs or national champions), making it difficult for truly private companies to compete. Big technology firms like Alibaba and Tencent are financially independent but China’s government regulates Internet traffic and uses its Sesame Credit software to encourage loyalty among the population. When the dust settled after a half-century of Cold War, America ironically found itself dependent on a hybrid communist economy for cheap labor and supply chains. China, equally unforeseen by Mao Zedong, morphed into a profit-driven economy with big state-owned firms, similar to the way the U.S. government owned the biggest phone company (Bell) between World War I and 1982. China didn’t embrace its own version of capitalism to join the West, but rather to become more independent.

China’s relationship with the U.S. wasn’t horrible but degenerated in the first decades of the 21st century with China’s increased military strength (especially naval) and its rampant cyber-theft of American technology, including military technology. The 2007-09 financial meltdown leveled the playing field, narrowing the gap as measured by America’s GDP advantage; China is now ~ 60% as big economically. The International Monetary Fund chart on the right shows how countries ranked by annual GDP in 2018, including how California and Texas would’ve ranked had they been countries (the x-axis measure U.S. dollars in trillions).

American companies also complained to the WTO or Obama administration about having to sign technology-sharing agreements to do business in China (violating WTO rule #7), but only under the condition that the administration or WTO not complain too loudly or tell China who complained about them in much detail. Their top priority, in other words, was to continue to do business in China — by then their biggest or at least fastest-growing market — even if it meant being blackmailed into sharing technology. There were also some products, not all, where Chinese tariffs on incoming American imports were higher than the tariffs the U.S. charged on Chinese goods. The same phenomenon happened during Trump’s administration. During his trade stand-off, and while China’s government was repressing Muslim Uyghurs in its Xinjiang region, crushing democracy in Hong Kong, enforcing digital censorship, and dabbling in cyber-theft, American companies like Starbucks® and Apple® were describing China to their shareholders as “remarkable” and “phenomenal.” Pro basketball was mostly silent on Xinjiang and Hong Kong as well, even as NBA players wore jerseys emblazoned with equality and justice. Global companies were willing to look away because of China’s growth potential, weakening American politicians’ leverage.

Obama inked an agreement with Xi Jinping in 2015 for both countries to curtail cyber-theft. The Trump administration’s National Counter-Intelligence and Security Center conceded that this agreement had been mostly effective, at least on non-military items. Also, the U.S. Chamber of Commerce lists China pretty low on its list of countries that violate trade terms. Post-2015, China is cooperating fairly well with the WTO while the Trump administration is undercutting the agency by blocking the appointment of the judges that rule on disputes. We should also remind ourselves that other countries, including the U.S., have passed tariffs, favored certain industries with subsidies, and stolen each other’s technology — military and commercial — plenty over the years. Alexander Hamilton and Abraham Lincoln saw tariffs as the key to economic strength. Americans prided themselves on industrial/military espionage during the early Industrial Revolution and Cold War. Still, there was widespread, bipartisan concern after China stole plans of Lockheed Martin’s F-35 Lightning II, a stealth fighter that cost the U.S. years of money and research.

Consistent with his campaign promises, Trump started a tit-for-tat tariff war with China, hoping that America could absorb the short-term damage long enough to outlast China and force them to back down. He slapped a 10-25% import duty on nearly half of the products shipping to the U.S. (including dishwashers and solar panels) and China retaliated with tariffs on American agricultural exports. China also cut off recycling for all but cleaned plastics in 2017. Chinese-American trade is the world’s largest and most important exchange and their ensuing trade war was the biggest in history.

There’s no good reason for China and the U.S. to start a military war — and that would likely destroy each country’s economy, at least temporarily — but many Americans aren’t comfortable with potentially being surpassed as the world’s #1 economic and military power, even though China’s population is 4x larger than America’s. Some analysts see a new Sino-American cold war brewing and are hoping to avoid a Thucydides Trap scenario whereby the two countries stumble into an unnecessary war just because China is growing at a faster rate than the U.S. (as was ancient Athens in relation to Sparta, described by the historian Thucydides in History of the Peloponnesian War). As we saw at the end of the Vietnam War chapter, the goal of Obama’s proposed Asia Pivot was to gradually shift more American troops and bases from the Middle East to Asia to protect Japan, South Korea, Taiwan, the Philippines, and Vietnam from potential Chinese aggression. This is one area where Trump supported multilateralism, keeping the U.S. engaged with the Quadrilateral Security Dialogue (aka the Quad, QSD or Asian NATO), through which the U.S., Australia, India, and Japan check Chinese expansion in the South China Sea, where China builds artificial islands as military bases.

The forementioned “China Model” of state-owned enterprises (SOEs) mixed with private entrepreneurship is also one Americans would prefer to see fail, though its South Korean allies have had success with state-favored, but not state-owned, chaebols like Samsung, LG, and Hyundai-Kia. Neither China nor South Korea see free competition as particularly important to economic success. Led by Deng Xiaoping in the ’80s and Xi Jinping (2012- ), China’s communist party planned the economy years in advance and retained control of natural resources and energy while privatizing most other businesses. Americans drank their own Kool-Aid® about how China’s modernizing economy would naturally open up their political system to more democracy, etc., but it never happened. American companies, meanwhile, didn’t really care about China’s system so much as the billion+ potential customers (and workers) that lived there. New York Times economist Thomas Friedman wrote that “Beijing placated us by buying more Boeings, beef and soybeans.”

In Chapter 13 we saw how the U.S. and British remade the world economy in their image after World War II with the World Bank, International Monetary Fund and pro-free trade organizations like GATT > WTO (the World Trade Organization). In Bad Samaritans, Ha-Joon Chang argued that they “kicked the ladder out from under” emerging markets with “asymmetrical demands” after wealthy countries like the U.S. and Britain grew strong through tariffs in the 19th century and Keynesian stimulus spending in the 20th, both of which are forbidden for loan recipients. There may be some truth to that, but China shouldn’t have joined the WTO in that case. By the 2010s, China wasn’t just an emerging economy in the old sweatshop/light manufacturing sense of the word. They operated in robotics, AI, 3-D Printing, microchips, facial recognition software, electric and autonomous cars, etc. They had companies like Huawei that challenged western telecom with its 5G wireless platform. Controversy swirled around the firm in 2019 as the U.S. encouraged other western countries to cut them off and the CEO’s daughter, Meng Wanzhou, was detained in Canada for not honoring sanctions against Iran.

Avoiding conflict with China or another cold war was not on Trump’s agenda when he came into office, nor his chief strategist Steve Bannon. They welcomed an “economic war” with China as their top priority. But Trump’s first staff/cabinet was divided into globalist and nationalist camps. On the globalist side were Treasury Secretary Steve Mnuchin and chief economic advisor Gary Cohn, of Goldman Sachs, who argued for ironing out disagreements but maintaining a mutually-beneficial trade. On the nationalist side of the debate were Trump, Peter Navarro (lifelong Democrat and author of Death By China), and Bannon. Bannon, an economic nationalist, saw globalization as a transfer of wealth from working-class Americans to elites and foreigners and the Sino-American relationship as purely dualistic: they couldn’t co-exist and both thrive as trading partners. Bannon said “There’s no middle ground. One side will win; one side will lose.” Bannon’s take on China was similar to that of his hero, Ronald Reagan’s, toward the USSR. As a naval officer, Bannon was upset with Jimmy Carter during the Iranian Hostage Crisis and admired Reagan’s strategy of winning the Cold War outright instead of co-existing with détente. Bannon had an interesting post-military résumé, with stints as a Wall Street investment banker, Hollywood producer, documentarian, and co-founder of alt-right Breitbart (2007- ) and Cambridge Analytica (2013-18). Trade isn’t war, though. Historians warned that by severing ties with China in hopes of security, we could inadvertently strengthen China, making them more self-sufficient in the same way that the War of 1812 strengthened the U.S. vis-à-vis Britain. Likewise, cutting the U.S. off from promising engineering students would only cause a “brain-drain” to other countries, even if it prevented a few spies from slipping through. Increasingly, not all technology transfer will be from America to China.

Avoiding conflict with China or another cold war was not on Trump’s agenda when he came into office, nor his chief strategist Steve Bannon. They welcomed an “economic war” with China as their top priority. But Trump’s first staff/cabinet was divided into globalist and nationalist camps. On the globalist side were Treasury Secretary Steve Mnuchin and chief economic advisor Gary Cohn, of Goldman Sachs, who argued for ironing out disagreements but maintaining a mutually-beneficial trade. On the nationalist side of the debate were Trump, Peter Navarro (lifelong Democrat and author of Death By China), and Bannon. Bannon, an economic nationalist, saw globalization as a transfer of wealth from working-class Americans to elites and foreigners and the Sino-American relationship as purely dualistic: they couldn’t co-exist and both thrive as trading partners. Bannon said “There’s no middle ground. One side will win; one side will lose.” Bannon’s take on China was similar to that of his hero, Ronald Reagan’s, toward the USSR. As a naval officer, Bannon was upset with Jimmy Carter during the Iranian Hostage Crisis and admired Reagan’s strategy of winning the Cold War outright instead of co-existing with détente. Bannon had an interesting post-military résumé, with stints as a Wall Street investment banker, Hollywood producer, documentarian, and co-founder of alt-right Breitbart (2007- ) and Cambridge Analytica (2013-18). Trade isn’t war, though. Historians warned that by severing ties with China in hopes of security, we could inadvertently strengthen China, making them more self-sufficient in the same way that the War of 1812 strengthened the U.S. vis-à-vis Britain. Likewise, cutting the U.S. off from promising engineering students would only cause a “brain-drain” to other countries, even if it prevented a few spies from slipping through. Increasingly, not all technology transfer will be from America to China.

Gary Cohn tried to arrange a meeting between Trump and American end-users of steel and aluminum — those that would be driven out of business or lose money with steel tariffs — but Trump refused and raised tariffs, leading to Cohn’s resignation. The nationalists won the debate. Bannon told PBS Frontline that Trump’s view of Chinese trade as purely bad for America was his only fully-formed worldview when he came into office and that he’d learned it almost exclusively by watching journalist Lou Dobbs for years on CNN then FOX. In the 1980s, as a celebrity real estate mogul, Trump directed most of his ire toward Japan, but he’d transferred his focus to China by 2000. Bannon and Trump saw American manufacturing as having grown weak, while economists saw it as strong but increasingly automated, providing fewer jobs.

But pretending trade is simple or having a simple understanding of trade doesn’t, unfortunately, make trade simple. American farmers that exported meat, grain, wine, and dairy products to food importers like Vietnam and Japan didn’t fully think things through when they supported protectionism and opposed TPP in the 2016 election, just as they didn’t realize how much corn they exported to Mexico when denouncing NAFTA or how many soybeans they sent to China. When corn prices plummeted after Trump threatened to dismantle NAFTA, he agreed to pull back and renegotiate instead. In Spring 2018, Trump threatened tariffs on steel and aluminum from Mexico and Canada, and in Fall 2018 started renegotiating a slightly re-branded version of NAFTA called USMCA (United States-Mexico-Canada Agreement) that the respective countries signed in 2019-20. The deal opens the U.S. to the Canadian dairy market and requires that automobiles must get 75% of their parts from within their country of origin to qualify for tariff-free imports to the other countries. While more or less just a rebranded NAFTA, USMCA’s passage in Congress at least involved a rare bipartisan agreement between Trump and House Speaker Nancy Pelosi (D-CA).

With China, specifically, there was a widespread consensus — shared by Trump and Democratic politicians like Pelosi and Senator Chuck Schumer (D-NY) — that China was violating the terms of its newfound WTO membership with unfair tariffs, forced technology transfers, currency manipulation (keeping theirs artificially low to boost exports), and intellectual property theft. Neither party grasped (or chose to grasp) that the 2015 Obama-Xi deal on cyber-theft was largely effective on non-military technology. Both parties wanted to resume trade, though, once new terms could be hammered out. Trump and Bannon weren’t really protectionists, they were just using tariffs as a temporary tool to renegotiate with China.

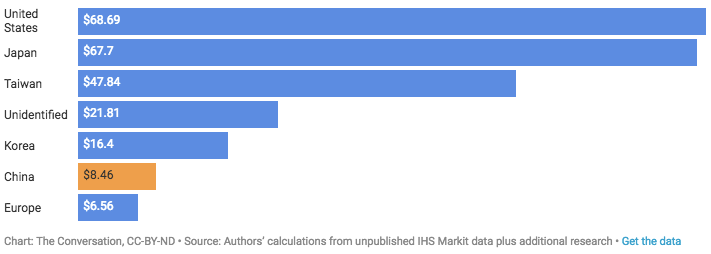

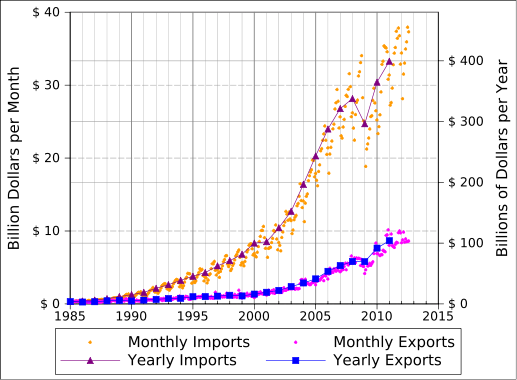

Looking at America’s negative balance of trade with China (importing more than we export; see chart on right), Dobbs and Trump saw deficits as a harbinger of declining economic might — what Trump was referencing in the Tweet® above by saying “when we are down a hundred billion…” In this zero-sum view, money is simply funneling from one country to the next. But, in contrast to Dobbs, Trump, Bannon, and Navarro, most economists aren’t as focused on trade deficits, pointing out that wealthier countries tend to import more than they export because they have more money to spend. Importing products, after all, is voluntary; if the U.S. wanted to level the trade deficit it could just buy fewer things. Also, trade deficit/surplus stats can be misleading because, if a finished product like phones goes from China to the U.S., it counts as being in China’s favor even though various countries “added value” to the product along the way, including the U.S.; it was just assembled in China. The chart below shows how many countries contributed to an iPhone, even though China got all the credit in the balance of trade because that’s where the final product shipped from.

Looking at America’s negative balance of trade with China (importing more than we export; see chart on right), Dobbs and Trump saw deficits as a harbinger of declining economic might — what Trump was referencing in the Tweet® above by saying “when we are down a hundred billion…” In this zero-sum view, money is simply funneling from one country to the next. But, in contrast to Dobbs, Trump, Bannon, and Navarro, most economists aren’t as focused on trade deficits, pointing out that wealthier countries tend to import more than they export because they have more money to spend. Importing products, after all, is voluntary; if the U.S. wanted to level the trade deficit it could just buy fewer things. Also, trade deficit/surplus stats can be misleading because, if a finished product like phones goes from China to the U.S., it counts as being in China’s favor even though various countries “added value” to the product along the way, including the U.S.; it was just assembled in China. The chart below shows how many countries contributed to an iPhone, even though China got all the credit in the balance of trade because that’s where the final product shipped from.

Trump preferred to see less of the supply chain overseas, though, for products deemed essential to national security like steel and aluminum. The wisdom of simplifying or diversifying supply chains could be seen in the 2020 COVID-19 outbreak, when the U.S. was caught short on medical supplies. Trump wanted out of broad multilateral agreements and to renegotiate one-on-one bilateral agreements of the sort the U.S. had forged with South Korea (KORUS) under Bush 43 and Obama except that Trump’s “beautiful deals” would improve the terms. Trump was less ideological than transactional — hoping to trade, but with improved trade terms because he saw the old terms as disadvantageous to the U.S. As Americans followed these debates, they rarely knew what, exactly, those trade terms were in the first place and it wasn’t 100% clear that Trump did either. He just told voters that other countries “were raping us” and that our previous presidents were “stupid.”

But economists point out that when one country overplays its hand, other countries ice them out and sign separate agreements with each other (above) — thus the advantage of multilateral pacts. Countries are more willing to lower their own tariffs if it gives them access to multiple countries’ products, not just one. A new TPP-11 (led by Japan but excluding the U.S. and, still, China) formed immediately after Trump’s withdrawal announcement. By mid-2017, New Zealand, Australia, Canada, and the EU started negotiating lower tariffs with Asian food importers, hoping to undersell American farmers. With America and post-Brexit (post-EU) Britain on the sidelines, the Europe Union renegotiated with Vietnam, Malaysia, and Mexico, and Japan offered the EU the same deal on agricultural imports that it took the U.S. two years to hammer out during the TPP negotiations. An alliance of Latin American countries including Mexico, Peru, Chile, and Colombia formed their own Pacific Alliance to negotiate with Asian countries while China, sensing blood in the water, formed a 15-country regional partnership in Asia to rival TPP-11.

The cumulative effective of the 2007-09 Financial Crisis, the protracted Sino-American trade war, and COVID-19 has led to what The Economist called slowbalization. You can surmise the advantages and disadvantages of that trend if it continues into the 2020s based on what you’ve read: essentially an inversion of the good and bad we saw in the 1990s and ’00s. The trend is already beginning in Japan, Britain, India, and the United States as governments encourage self-sufficiency. Expect the trend of poorer countries gaining ground to level off and for prices to rise in wealthier nations, while a more fractured world will make international cooperation on issues like climate, diplomacy, and health more challenging.

In January 2020, the U.S. and China had just signed Phase One of a new trade deal that made some headway on technology sharing and intellectual property rights abuses and reducing agricultural and manufacturing tariffs when talks were interrupted by the pandemic. With China Phase One, South Korea’s KORUS, and NAFTA/USMCA, Trump incrementally improved on and re-branded existing deals. They were a little bit more beautiful. But Chinese relations soured in Spring 2020 when Trump stressed COVID-19’s origins in Wuhan and he tried to score election points by spinning Joe Biden as soft on China the way Republicans had against Harry Truman in 1949 (Chapter 15), undercutting negotiations. Just after the 2020 U.S. elections, perhaps hoping to fend off the potential of Biden renewing TPP talks, China inked its own agreement with neighboring Asian countries: the Regional Comprehensive Economic Partnership (right). Reversing the advantage the U.S. would’ve had with the TPP, China can now dictate the terms of America’s trade with the entire region. In the meantime, Biden will inherit Trump’s smoldering trade war.

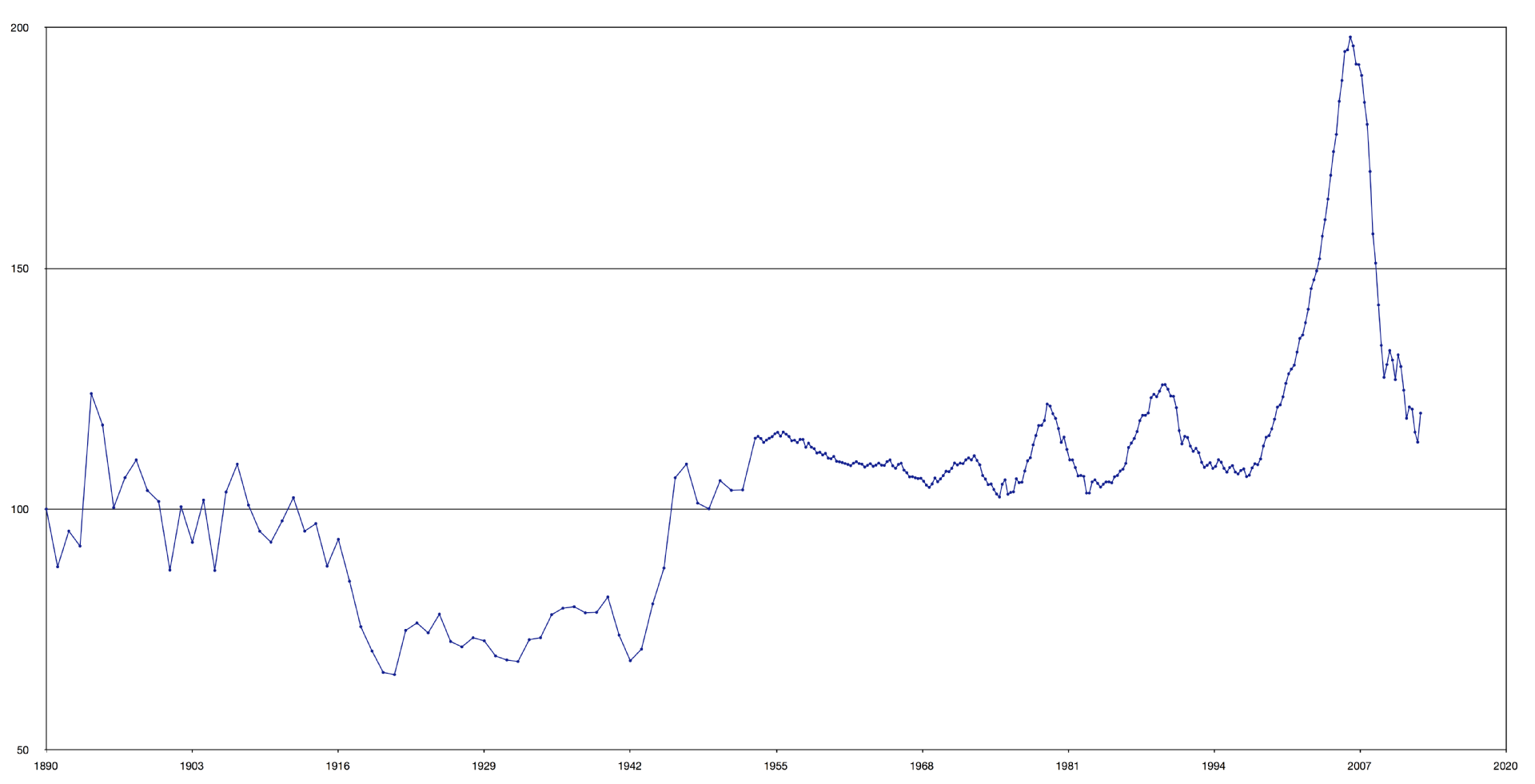

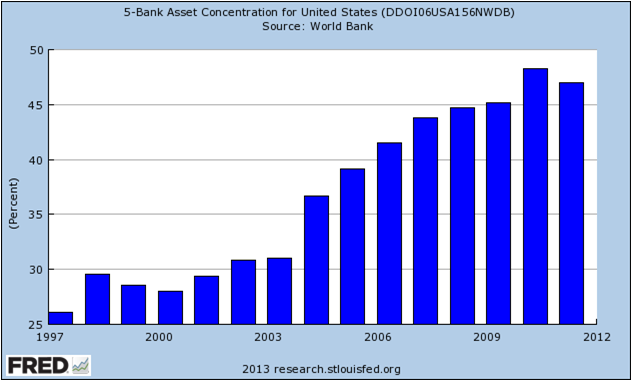

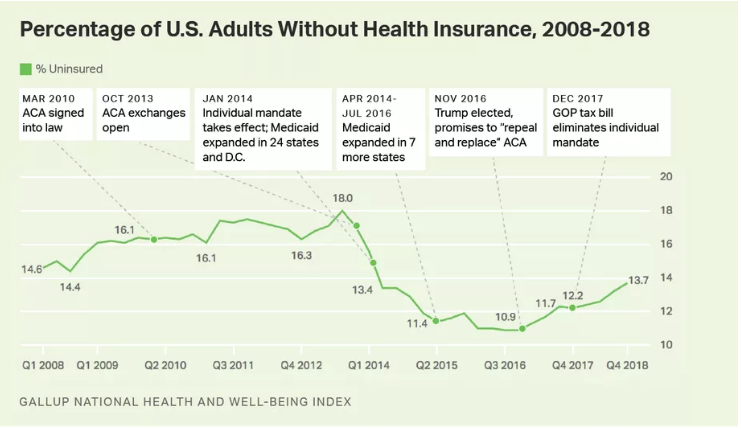

In January 2020, the U.S. and China had just signed Phase One of a new trade deal that made some headway on technology sharing and intellectual property rights abuses and reducing agricultural and manufacturing tariffs when talks were interrupted by the pandemic. With China Phase One, South Korea’s KORUS, and NAFTA/USMCA, Trump incrementally improved on and re-branded existing deals. They were a little bit more beautiful. But Chinese relations soured in Spring 2020 when Trump stressed COVID-19’s origins in Wuhan and he tried to score election points by spinning Joe Biden as soft on China the way Republicans had against Harry Truman in 1949 (Chapter 15), undercutting negotiations. Just after the 2020 U.S. elections, perhaps hoping to fend off the potential of Biden renewing TPP talks, China inked its own agreement with neighboring Asian countries: the Regional Comprehensive Economic Partnership (right). Reversing the advantage the U.S. would’ve had with the TPP, China can now dictate the terms of America’s trade with the entire region. In the meantime, Biden will inherit Trump’s smoldering trade war.